I know I’ve been on a bit of a tear lately about artificial intelligence (AI). I promise e-Literate won’t turn into the “all AI all the time” blog. That said, since I have identified it as a potential factor in a coming tipping point for education, I think it’s important that we sharpen our intuitions about what we can and can’t expect to get from it.

Plus, it’s fun.

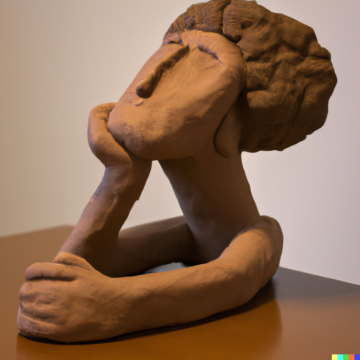

In a recent post, I quoted an interview with experts in the field who were talking about playing with AI tools that can generate images from text descriptions as a way of expressing their creativity. And in my last post, I included an image from one such tool, DALL-E 2, created from the prompt “A copy of the sculpture “The Thinker” made by a third grader using clay.”

In this post, I will use this image and the tool it created as a jumping-off point for exploring the promise and limitations of large cutting-edge AI models.

Interpreting art

To say that I lack well-developed visual skills would be an understatement. When I’m thinking, which is generally whenever I’m awake, I am usually looking at the inside of my skull. I’ve been known to walk into fire hydrants and street signs with some regularity.

My wife, on the other hand, has an eye. She took private sculpture lessons in high school from Stanley Bleifeld. She did a brief stint at art school before turning to English. She teaches our grandchildren art. And she loves Rodin, the sculptor who created the thinker. I picked the image for the post mainly because I liked it. Her reaction to it was, “That looks nothing at all like the original. Where are the hunched shoulders? The crossed elbow? Where’s the tension in the figure? And what’s with the hair? That’s not what a third grader would make.”

So we looked at other options. DALL-E 2 generates four options for each prompt, which you can further play with. Here are the four options that my prompt generated:

The bottom one is the one she thought best captured the original and is most like what a third grader would produce.

The model did well with “clay.” I tested its understanding of materials by asking it to copy the famous sculpture in Jell-O. In all four cases, it captured what Jell-O looks like very well. Here’s the best image I got:

The AI clearly knows what green Jell-O looks like, down to the different shades that can come from light and food coloring. (The Jello-O mold as the seat is a nice touch.) That’s not surprising. The AI likely had many examples of well-labeled images of Jello-O on the internet.

It struggled with two aspects of the problem I gave it to solve. First, what are the salient features of The Thinker in terms of its artistic merit? Which details matter the most? And second, how would artists at different ages and developmental stages see and capture those features?

Let’s look at each in turn.

Artistic detail

My wife has already given us a pretty good list of some salient features of the sculpture. The subject is literally and figuratively pensive. (Puns intended.) Can we get the AI to capture the art in work? My first experiment was to try asking it to interpret the sculpture through the lens of another artist. So, for example, here’s what I got when I asked it to show me a painting of the sculpture by Van Gogh:

Interesting. It gets some of the tension and some of the balance between detail and lack of detail (although that balance is also consistent with Impressionist painting). But all four of the thinker images I got back for this prompt had Van Gogh’s head on them. This is probably because Van Gogh’s famous portraiture is self-portraiture. What if we tried a renowned portrait artist like Rembrandt?

I’m not sure I would describe this figure as pensive, exactly. To my (poor) eye, the tension isn’t there. Also, all four examples came back with the same white hat and ruffle. The AI has fixated on those details as essential to Rembrandt’s portraits.

What if we stretched the model a bit by trying a less conventional artist? Here’s an example using Salvador Dalí as the artist:

Painting of the sculpture The Thinker by Salvador Dalí as interpreted by DALL-E 2.

Hmm. I’ll leave it to more visual folks to comment on this one. It doesn’t help me. I will note that all four images the AI gave me had that strange tail coming out of the back of the head. It has made a generalization about Dalí’s portraiture.

I won’t show you DALL-E 2’s interpretation of Hieronymus Bosch’s version of The Thinker, not because it’s gross but because it just didn’t work at all.

DALL-E 2 is a language model tacked onto an image model. It’s interpreting the words of the prompt based on analyzing a large corpus of text (e.g., the internet) and mashing that up with visual features it’s learned from analyzing a large corpus of images (e.g., the internet). But the connection between the two is loose. For example, even though it’s probably digested many descriptions and analyses of The Thinker, it doesn’t translate that information to the visual model. My guess is that if I built a chatbot using the underlying GPT-3 language model and asked it about the features that are considered important in The Thinker as a work of art, it could tell me. DALL-E 2 doesn’t translate that information about salient features into images.

How could you fix this if you wanted to build an application that can visually re-interpret works of art while preserving the essential features of the original? I’m going to speculate here because this gets beyond my competence. These models can be tuned by training them on special corpi of information. I’m told they’re not easy to tune; they’re so complex that their behavior can be unpredictable. But, for example, you could try to amplify the art history analyses in the information that gets sent from the language model to the visual model. I’m not sure how one would get the salient features picked up by the former to be interpreted by the latter. Maybe it could elaborate on your prompt to include details that you didn’t. I don’t know. Remember my post about the miracle, the grind, and the wall in AI? This would be the grind. It would be a lot of hard work.

Artistic development

Getting the salient details of art is hard enough. But I also asked it to interpret those details not through the eyes of a specific artist but through the eyes of a person at a particular developmental level. A third grader. GPT-3 does have a model of sorts for this. If you ask it to give answers that are appropriate for a tenth grader, you will get a different result than if you ask it to respond to a first-year college student. Much of the content it was trained on undoubtedly was labeled for grade level and/or reading level. It doesn’t “know” how tenth graders think but it’s seen a lot of text that it “knows” were written for tenth graders. It can imitate that. But how does it translate that into artistic development?

Here’s what I got when I asked DALL-E 2 to show me clay copies of The Thinker created by “an artistic eighth grader”:

These are, on the whole, worse. We want to see sculptures that more accurately capture the artistically salient features of the original. Instead, we get more hair and more paint.

How could you get the model to capture artistic development? Again, I’ll speculate as a layperson. The root of the problem may well be in the training data. The internet has many, many images. But it doesn’t have a large and well-labeled set of images showing the same source image (like the Rodin sculpture) being copied by students at different age levels using different media (e.g., clay, watercolors, etc.). If so, then we’ve hit the wall. Generating that set of training data may very well be out of reach for the software developers.

Language is weird

I’ll throw one more example in just for fun. By this point in my experiment, I had gotten bored with The Thinker and was just messing around. I asked the AI to show me “a watercolor painting of Harry Potter in a public restroom.” Now, there are two ways of parsing this sentence. I could have been asking for “(a watercolor painting of Harry Potter) in a public restroom” or “a watercolor painting of (Harry Potter in a public restroom)”. DALL-E 2 couldn’t decide which one I was asking for, so it gave me both in one image:

These models are tricky to work with because it’s very easy for us to wander into territory where we’re recruiting multiple complex aspects of human cognition such as visual processing, language processing, and domain knowledge. It’s hard to anticipate the edge cases. That’s why most practical AI tools today do not use free-form prompts. Instead, they limit the user’s choices through the user interface to ensure that the request is one that the AI has a reasonable chance of responding to in a useful way. And even then, it’s tricky stuff.

If you’re a layperson interested in exploring these big language models more, I recommend reading Janelle Shane’s AI Weirdness blog and her book You Look Like a Thing and I Love You: How Artificial Intelligence Works and Why It’s Making the World a Weirder Place.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- Platoblockchain. Web3 Metaverse Intelligence. Knowledge Amplified. Access Here.

- Source: https://eliterate.us/seeing-the-future-developing-intuitions-about-artificial-intelligence/

- 1

- a

- About

- accurately

- Ages

- AI

- All

- already

- Although

- analyzing

- and

- Another

- answers

- anticipate

- Application

- appropriate

- around

- Art

- artificial

- artificial intelligence

- Artificial intelligence (AI)

- artist

- artistic

- Artists

- aspects

- back

- Balance

- based

- because

- before

- being

- BEST

- between

- Beyond

- Big

- Bit

- Blog

- book

- Bored

- Bottom

- build

- built

- capture

- cases

- Chance

- chatbot

- choices

- clearly

- College

- come

- coming

- comment

- complex

- connection

- considered

- consistent

- content

- conventional

- could

- created

- creativity

- Crossed

- cutting-edge

- dall-e

- data

- describe

- detail

- details

- developers

- developing

- Development

- developmental

- DID

- different

- Doesn’t

- domain

- Dont

- down

- each

- Edge

- Education

- Eighth

- Elaborate

- English

- enough

- ensure

- essential

- etc

- Even

- exactly

- example

- examples

- expect

- experiment

- experts

- Exploring

- eye

- Eyes

- famous

- Features

- field

- Figure

- Fire

- First

- Fix

- food

- Former

- from

- fun

- further

- future

- generally

- generate

- generated

- generates

- generating

- get

- Give

- given

- going

- good

- grade

- Green

- gross

- Hair

- Hard

- hard work

- hat

- head

- help

- here

- High

- history

- Hit

- How

- HTTPS

- human

- I’LL

- identified

- image

- images

- important

- in

- include

- included

- information

- instead

- Intelligence

- interested

- Interface

- Internet

- interpretation

- Interview

- IT

- Know

- knowledge

- known

- Lack

- language

- large

- Last

- learned

- Leave

- Lens

- Lessons

- Level

- levels

- Licensed

- light

- likely

- LIMIT

- limitations

- List

- Look

- look like

- looked

- looking

- LOOKS

- Lot

- love

- made

- make

- Making

- many

- materials

- Matter

- Media

- Merit

- model

- models

- more

- most

- multiple

- ONE

- Options

- original

- Other

- paint

- painting

- particular

- person

- picked

- plato

- Plato Data Intelligence

- PlatoData

- Play

- playing

- Point

- poor

- portrait

- Post

- potential

- Practical

- pretty

- private

- probably

- Problem

- processing

- produce

- promise

- public

- reach

- reaction

- Reading

- reasonable

- recent

- recommend

- recruiting

- remember

- Renowned

- request

- Respond

- result

- root

- Said

- Salvador

- same

- School

- Second

- seeing

- sentence

- set

- show

- Signs

- since

- skills

- So

- Software

- Software Developers

- SOLVE

- some

- Source

- special

- specific

- stages

- street

- Student

- Students

- subject

- such

- surprising

- talking

- terms

- The

- The Future

- the information

- the world

- their

- There.

- thing

- Thinking

- Third

- thought

- Through

- Tipping

- to

- today

- tool

- tools

- touch

- trained

- Training

- translate

- TURN

- Turning

- under

- underlying

- understanding

- undoubtedly

- unpredictable

- us

- use

- User

- User Interface

- usually

- version

- wanted

- ways

- What

- which

- while

- white

- WHO

- wife

- Wikipedia

- will

- words

- Work

- works

- world

- would

- written

- Your

- zephyrnet