A new technical paper titled “Hyperscale Hardware Optimized Neural Architecture Search” was published by researchers at Google, Apple, and Waymo.

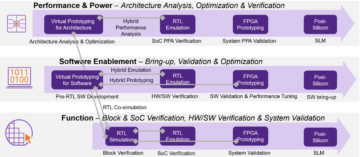

“This paper introduces the first Hyperscale Hardware Optimized Neural Architecture Search (H2O-NAS) to automatically design accurate and performant machine learning models tailored to the underlying hardware architecture. H2O-NAS consists of three key components: a new massively parallel “one-shot” search algorithm with intelligent weight sharing, which can scale to search spaces of O(10280) and handle large volumes of production traffic; hardware-optimized search spaces for diverse ML models on heterogeneous hardware; and a novel two-phase hybrid performance model and a multi-objective reward function optimized for large scale deployments,” states the paper.

找出 这里的技术论文. 2023 年 XNUMX 月出版。

Sheng Li, Garrett Andersen, Tao Chen, Liqun Cheng, Julian Grady, Da Huang, Quoc V. Le, Andrew Li, Xin Li, Yang Li, Chen Liang, Yifeng Lu, Yun Ni, Ruoming Pang, Mingxing Tan, Martin Wicke, Gang Wu, Shengqi Zhu, Parthasarathy Ranganathan, and Norman P. Jouppi. 2023. Hyperscale Hardware Optimized Neural Architecture Search. In Proceedings of the 28th ACM International Conference on Architectural Support for Programming Languages and Operating Systems, Volume 3 (ASPLOS 2023). Association for Computing Machinery, New York, NY, USA, 343–358. https://doi.org/10.1145/3582016.3582049

- SEO 支持的内容和 PR 分发。 今天得到放大。

- 柏拉图区块链。 Web3 元宇宙智能。 知识放大。 访问这里。

- 与 Adryenn Ashley 一起铸造未来。 访问这里。

- Sumber: https://semiengineering.com/hyperscale-hw-optimized-neural-architecture-search-google/