Generated with Midjourney

Enterprises in every industry and corner of the globe are rushing to integrate the power of large language models (LLMs) like OpenAI’s ChatGPT, Anthropic’s Claude, and AI12Lab’s Jurassic to boost performance in a wide range of business applications, such as market research, customer service, and content generation.

However, building an LLM application at the enterprise scale requires a different toolset and understanding than building traditional machine learning (ML) applications. Business leaders and executives who want to preserve brand voice and reliable service quality need to develop a deeper understanding of how LLMs work and the pros and cons of various tools in an LLM application stack.

In this article, we’ll give you an essential introduction to the high-level strategy & tools you’ll need to build and run an LLM application for your business.

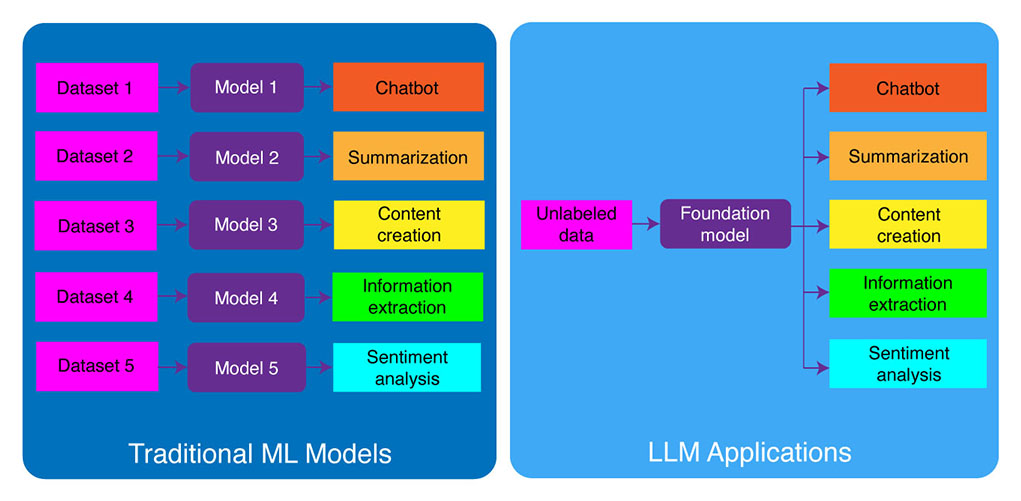

Traditional ML Development vs. LLM Applications

Traditional machine learning models were task-specific, meaning that you needed to build a separate model for each different task. For example, if you wanted to analyze customer sentiment, you would need to build one model, and if you wanted to build a customer support chatbot, you would need to build another model.

This process of building and training task-specific ML models is time-consuming and requires a lot of data. The kind of datasets needed to train these different ML models would also vary depending on the task. To train a model to analyze customer sentiment, you would need a dataset of customer reviews that have been labeled with a corresponding sentiment (positive, negative, neutral). To train a model to build a customer support chatbot, you would need a dataset of conversations between customers and technical support.

Large language models have changed this. LLMs are pre-trained on a massive dataset of text and code, which allows them to perform well on a wide range of tasks out of the box, including:

- Text summarization

- Content creation

- Translation

- Information extraction

- Question answering

- Sentiment analysis

- Customer support

- Sales support

The process of developing LLM applications can be broken down into four essential steps:

- Choose an appropriate foundation model. It’s a key component, defining the performance of your LLM application.

- Customize the model, if necessary. You may need to fine-tune the model or augment it with the additional knowledge base to meet your specific needs.

- Set up ML infrastructure. This includes the hardware and software needed to run your application (i.e., semiconductors, chips, cloud hosting, inference, and deployment).

- Augment your application with additional tools. These tools can help improve your application’s efficiency, performance, and security.

Now, let’s take a look at the corresponding technology stack.

If this in-depth educational content is useful for you, subscribe to our AI mailing list to be alerted when we release new material.

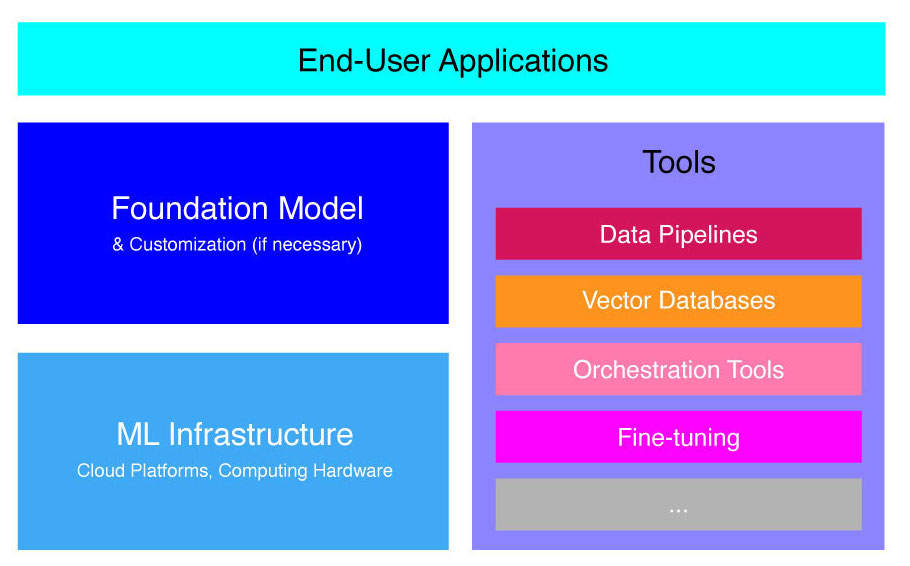

High-Level LLM Application Stack

LLM applications are built on top of several key components, including:

- A foundation model, which might require customization in specific use cases.

- ML infrastructure for sufficient computing resources via cloud platforms or the company’s own hardware.

- Additional tools, such as data pipelines, vector databases, orchestration tools, fine-tuning ML platforms, model performance monitoring tools, etc.

We’re going to briefly walk you through these components so you can better understand the toolkit needed to build and deploy an LLM application.

What Are Foundation Models?

Using a single pre-trained LLM can save you a lot of time and resources. However, training such a model from the ground up is a timely and costly process which is beyond the capabilities of most companies except for an elite few technology leaders.

Several companies and research teams have trained these models and allow other companies to use them. Leading examples include ChatGPT, Claude, Llama, Jurassic, and T5. These public-facing models are called foundation models. Some of them are proprietary and can be accessed via API calls for a fee. Others are open-sourced and can be used for free. These models are pre-trained on a massive dataset of unlabeled textual data, enabling them to perform a wide range of tasks, from generating creative ad copies to communicating with your customers in their native language on behalf of the company.

There are two main types of foundation models: proprietary and open-source.

Proprietary models are owned by a single company or organization and are typically only available for a fee. Some of the most popular examples of proprietary models include GPT models by OpenAI, Claude models by Anthropic, and Jurassic models by AI21 Labs.

Open-source models are usually available for free to anyone who wants to use them. However, some open-source models have limitations on their use, such as: (1) only being available for research purposes, (2) only being available for commercial use by companies of a certain size. The open-source community claims that putting such restrictions doesn’t allow a model to qualify as “open-source.” Still, the most prominent examples of language models that can be used for free, include Llama models by Meta, Falcon models by the Technology Innovation Institute in Abu Dhabi, and StableLM models by Stability AI. Read more about open-source models and the associated risks here.

Now let’s discuss several factors to consider when choosing a foundation model for your LLM application.

Choose a Foundation Model

Selecting the best foundation model for your LLM application can be a challenging process, but we can basically break it down into three steps:

- Choose between proprietary and open-source models. Proprietary models are typically larger and more capable than open-source models, but they can be more expensive to use and less flexible. Additionally, the code is not as transparent, which makes it difficult to debug or troubleshoot problems with proprietary models’ performance. Open-source models, on the other hand, usually get fewer updates and less support from the developers.

- Choose the size of the model. Larger models are usually better at performing tasks that require a lot of knowledge, such as answering questions or generating creative text. However, larger models are also more computationally expensive to use. You may start by experimenting with larger models, and then go to smaller ones as long as a model’s performance is satisfactory for your use case.

- Select a specific model. You may start by reviewing the general benchmarks to shortlist the models for testing. Then, proceed with testing different models for your application-specific assignments. For custom benchmarking, consider calculating BLEU and ROUGE scores, the metrics that help quantify the number of corrections that are necessary to AI-generated text before releasing the output for human-in-the-loop applications.

For a better understanding of the differences between various language models, check out our overview of the most powerful language (LLM) and visual language models (VLM).

After you have chosen a foundation model for your application, you can consider whether you need to customize the model for even better performance.

Customize a Foundation Model

In some cases, you may want to customize a foundation language model for better performance in your specific use case. For example, you may want to optimize for a certain:

- Domain. If you operate in specific domains, such as legal, financial, or healthcare, you may want to enrich the model’s vocabulary in this domain so it can better understand and respond to end-user queries.

- Task. For example, if you want the model to generate marketing campaigns, you may provide it with specific examples of branded marketing content. This will help the model learn the patterns and styles that are appropriate for your company and audience.

- Tone of voice. If you need the model to use a specific tone of voice, you can customize the model on a dataset that includes examples of your target linguistic samples.

There are three possible ways to customize a foundation language model:

- Fine-tuning: provides the model with a domain-specific labeled dataset of about 100-500 records. The model weights are updated, which should result in better performance on the tasks represented by this dataset.

- Domain adaptation: provides the model with a domain-specific unlabeled dataset that contains a large corpus of data from the corresponding domain. The model weights are also updated in this case.

- Information retrieval: augments the foundation model with closed-domain knowledge. The model is not re-trained, and the model weights stay the same. However, the model is allowed to retrieve information from a vector database containing relevant data.

The first two approaches require significant computing resources to retrain the model, which is usually only feasible for large companies with the appropriate technical talent to manage the customization. Smaller companies typically use the more common approach of augmenting the model with domain knowledge through a vector database, which we detail later in this article in the section on LLM tools.

Set up ML Infrastructure

The ML infrastructure component of the LLMOps landscape includes the cloud platforms, computing hardware, and other resources that are needed to deploy and run LLMs. This component is especially relevant if you choose to use an open-source model or customize the model for your application. In this case, you may need significant computing resources to fine-tune the model, if necessary, and run it.

There are a number of cloud platforms that offer services for deploying LLMs, including Google Cloud Platform, Amazon Web Services, and Microsoft Azure. These platforms provide a number of features that make it easy to deploy and run LLMs, including:

- Pre-trained models that can be fine-tuned for your specific application

- Managed infrastructure that takes care of the underlying hardware and software

- Tools and services for monitoring and debugging your LLMs

The amount of computing resources you need will depend on the size and complexity of your model, the tasks you want it to perform, and the scale of the business activity, where you want to deploy this model.

Augment With Tools

Additional LLM adjacent tools can be used to further enhance the performance of your LLM application.

Data Pipelines

If you need to use your data in your LLM product, the data preprocessing pipeline will be an essential pillar of your new tech stack, just like in the traditional enterprise AI stack. These tools include connectors to ingest data from any source, a data transformation layer, and downstream connectors. Leading data pipeline providers, such as Databricks and Snowflake, and new players, like Unstructured, make it easy for developers to point large and highly heterogeneous corpora of natural language data (e.g., thousands of PDFs, PowerPoint presentations, chat logs, scraped HTML, etc.) to a single point of access or even into a single document that can be further used by LLM applications.

Vector Databases

Large language models are limited to processing a few thousand words at a time, so they can’t effectively process large documents on their own. To harness the power of large documents, businesses need to use vector databases.

Vector databases are storage systems that transform large documents that they receive through data pipelines into manageable vectors, or embeddings. LLM applications can then query these databases to pinpoint the right vectors, extracting only the necessary nuggets of information.

Some of the most prominent vector databases currently available are Pinecone, Chroma, and Weaviate.

Orchestration Tools

When a user submits a query to your LLM application, such as a question for customer service, the application needs to construct a series of prompts before submitting this query to the language model. The final request to the language model is typically composed of a prompt template hard-coded by the developer, examples of valid outputs called few-shot examples, any necessary information retrieved from external APIs, and a set of relevant documents retrieved from the vector database. Orchestration tools from companies like LangChain or LlamaIndex can help to streamline this process by providing ready-to-use frameworks for managing and executing prompts.

Fine-tuning

Large language models trained on massive datasets can produce grammatically correct and fluent text. However, they may lack precision in certain areas, such as medicine or law. Fine-tuning these models on domain-specific datasets allows them to internalize the unique features of those areas, enhancing their ability to generate relevant text.

Fine-tuning an LLM can be a cost-intensive process for small companies. However, solutions from companies like Weights & Biases and OctoML can help with streamlined and efficient fine-tuning. These solutions provide a platform for companies to fine-tune LLMs without having to invest in their own infrastructure.

Other Tools

There are many other tools that can be useful for building and running LLM applications. For example, you may need labeling tools if you want to fine-tune the model with your specific data samples. You may also want to deploy specific tools to monitor the performance of your application, as even minor changes to the foundation model or requests from customers can significantly impact the performance of prompts. Finally, there are tools that monitor model safety to help you avoid promoting hateful content, dangerous recommendations, or biases. The necessity and importance of these different tools will depend on your specific use case.

What’s Next in LLM Application Development?

The four steps for LLM product development that we discussed here, are an essential foundation of any enterprise’s generative AI strategy that leverages large language models. They are important for non-technical business leaders to understand, even if you have a technical team implementing the details. We will publish more detailed tutorials in the future on how to leverage the wide range of generative AI tools on the marketplace. For now, you can subscribe to our newsletter to get the latest updates.

Enjoy this article? Sign up for more enterprise AI updates.

We’ll let you know when we release more summary articles like this one.

Related

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Automotive / EVs, Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- BlockOffsets. Modernizing Environmental Offset Ownership. Access Here.

- Source: https://www.topbots.com/llm-product-development-technology-stack/

- :is

- :not

- :where

- $UP

- 1

- a

- ability

- About

- abu dhabi

- access

- accessed

- activity

- Ad

- Additional

- Additionally

- adjacent

- AI

- AI strategy

- allow

- allowed

- allows

- also

- Amazon

- Amazon Web Services

- amount

- an

- analyze

- and

- Another

- Anthropic

- any

- anyone

- api

- APIs

- Application

- Application Development

- applications

- approach

- approaches

- appropriate

- ARE

- areas

- article

- articles

- AS

- associated

- At

- audience

- available

- avoid

- Azure

- base

- Basically

- BE

- been

- before

- behalf

- being

- benchmarking

- benchmarks

- BEST

- Better

- between

- Beyond

- biases

- boost

- Box

- brand

- branded

- Break

- briefly

- Broken

- build

- Building

- built

- business

- Business Applications

- Business Leaders

- businesses

- but

- by

- calculating

- called

- Calls

- Campaigns

- CAN

- capabilities

- capable

- care

- case

- cases

- certain

- challenging

- changed

- Changes

- chatbot

- ChatGPT

- check

- Chips

- Choose

- choosing

- chosen

- claims

- Cloud

- Cloud Hosting

- Cloud Platform

- code

- commercial

- Common

- communicating

- community

- Companies

- company

- Company’s

- complexity

- component

- components

- composed

- computing

- Cons

- Consider

- construct

- contains

- content

- conversations

- copies

- Corner

- correct

- Corrections

- Corresponding

- costly

- Creative

- Currently

- custom

- customer

- Customer Service

- Customer Support

- Customers

- customization

- customize

- Dangerous

- data

- Database

- databases

- Databricks

- datasets

- deeper

- defining

- Depending

- deploy

- deploying

- deployment

- detail

- detailed

- details

- develop

- Developer

- developers

- developing

- Development

- Dhabi

- differences

- different

- difficult

- discuss

- discussed

- document

- documents

- Doesn’t

- domain

- domains

- down

- e

- each

- easy

- educational

- effectively

- efficiency

- efficient

- elite

- enabling

- enhance

- enhancing

- enrich

- Enterprise

- especially

- essential

- etc

- Ether (ETH)

- Even

- Every

- example

- examples

- Except

- executing

- executives

- expensive

- external

- factors

- falcon

- feasible

- Features

- fee

- few

- fewer

- final

- Finally

- financial

- First

- flexible

- For

- Foundation

- four

- frameworks

- Free

- from

- further

- future

- General

- generate

- generating

- generation

- generative

- Generative AI

- get

- Give

- globe

- Go

- going

- Google Cloud

- Google Cloud Platform

- Ground

- hand

- Hardware

- harness

- Have

- having

- healthcare

- help

- here

- high-level

- highly

- hosting

- How

- How To

- However

- HTML

- HTTPS

- i

- if

- Impact

- implementing

- importance

- important

- improve

- in

- in-depth

- include

- includes

- Including

- industry

- information

- Infrastructure

- Innovation

- Institute

- integrate

- into

- Introduction

- Invest

- IT

- jpg

- just

- Key

- Kind

- Know

- knowledge

- labeling

- Labs

- Lack

- landscape

- language

- large

- larger

- later

- latest

- Latest Updates

- Law

- layer

- leaders

- leading

- LEARN

- learning

- Legal

- less

- Leverage

- leverages

- like

- limitations

- Limited

- Llama

- Long

- Look

- Lot

- machine

- machine learning

- Main

- make

- MAKES

- manage

- managing

- many

- Market

- market research

- Marketing

- Marketing Campaigns

- marketplace

- massive

- material

- max-width

- May..

- meaning

- medicine

- Meet

- Meta

- Metrics

- Microsoft

- Microsoft Azure

- might

- minor

- ML

- model

- models

- Monitor

- monitoring

- more

- most

- Most Popular

- native

- Natural

- Natural Language

- necessary

- Need

- needed

- needs

- negative

- Neutral

- New

- New Tech

- Newsletter

- next

- non-technical

- now

- number

- of

- offer

- on

- ONE

- ones

- only

- open source

- OpenAI

- operate

- Optimize

- or

- orchestration

- organization

- Other

- Others

- our

- out

- output

- overview

- own

- owned

- patterns

- perform

- performance

- performing

- Pillar

- pipeline

- platform

- Platforms

- plato

- Plato Data Intelligence

- PlatoData

- players

- Point

- Popular

- positive

- possible

- power

- powerful

- Precision

- Presentations

- problems

- process

- processing

- produce

- Product

- product development

- prominent

- promoting

- proprietary

- PROS

- provide

- providers

- provides

- providing

- publish

- purposes

- Putting

- qualify

- quality

- queries

- question

- Questions

- range

- Read

- receive

- recommendations

- records

- release

- releasing

- relevant

- reliable

- represented

- request

- requests

- require

- requires

- research

- Resources

- Respond

- restrictions

- result

- reviewing

- Reviews

- right

- risks

- Run

- running

- Safety

- same

- Save

- Scale

- Section

- security

- Semiconductors

- sentiment

- separate

- Series

- service

- Services

- set

- several

- should

- sign

- significant

- significantly

- single

- Size

- small

- smaller

- So

- Software

- Solutions

- some

- Source

- specific

- Stability

- stack

- start

- stay

- Steps

- Still

- storage

- Strategy

- streamline

- streamlined

- styles

- such

- sufficient

- SUMMARY

- support

- Systems

- Take

- takes

- Talent

- Target

- Task

- tasks

- team

- teams

- tech

- Technical

- technical support

- Technology

- technology innovation

- template

- Testing

- than

- that

- The

- The Future

- their

- Them

- then

- There.

- These

- they

- this

- those

- thousands

- three

- Through

- time

- time-consuming

- to

- TONE

- Tone of Voice

- toolkit

- tools

- top

- TOPBOTS

- traditional

- Train

- trained

- Training

- Transform

- Transformation

- transparent

- tutorials

- two

- types

- typically

- underlying

- understand

- understanding

- unique

- unique features

- updated

- Updates

- use

- use case

- used

- User

- usually

- various

- via

- Voice

- vs

- want

- wanted

- wants

- ways

- we

- web

- web services

- WELL

- were

- when

- whether

- which

- WHO

- wide

- Wide range

- will

- with

- without

- words

- Work

- would

- you

- Your

- zephyrnet