今天,我们很高兴地宣布 混合-8x7B Mistral AI 开发的大语言模型 (LLM) 可供客户通过 亚马逊SageMaker JumpStart 一键部署以运行推理。 Mixtral-8x7B LLM 是一个预训练的稀疏混合专家模型,基于 7 亿个参数主干,每个前馈层有 8 个专家。您可以使用 SageMaker JumpStart 尝试此模型,SageMaker JumpStart 是一个机器学习 (ML) 中心,提供对算法和模型的访问,以便您可以快速开始使用 ML。在这篇文章中,我们将介绍如何发现和部署 Mixtral-7xXNUMXB 模型。

什么是 Mixtral-8x7B

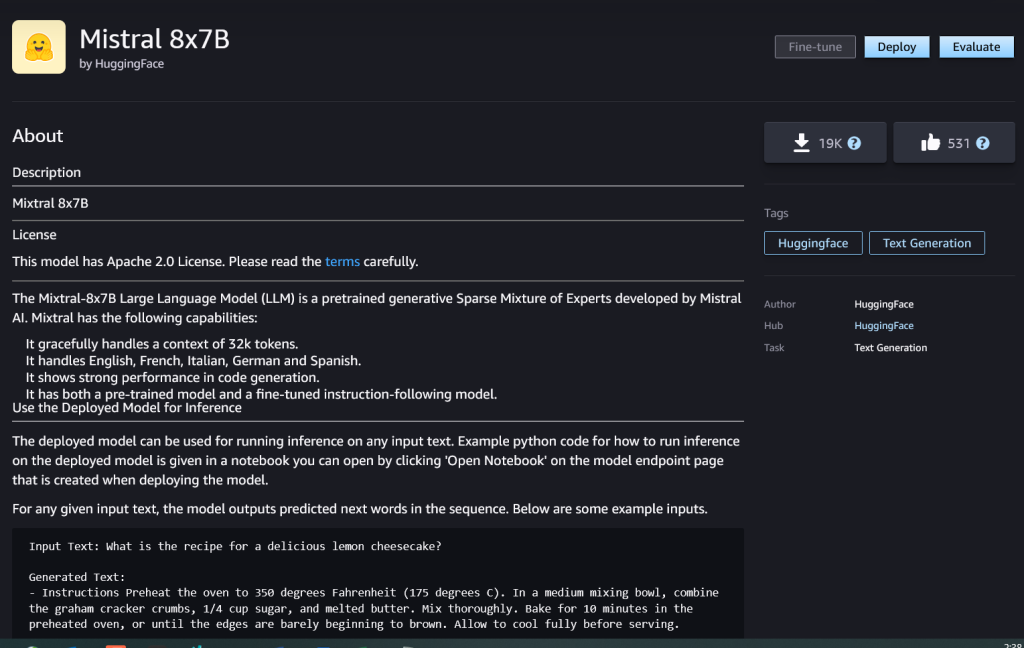

Mixtral-8x7B是Mistral AI开发的基础模型,支持英语、法语、德语、意大利语和西班牙语文本,具有代码生成能力。它支持各种用例,例如文本摘要、分类、文本完成和代码完成。它在聊天模式下表现良好。为了演示模型的直接可定制性,Mistral AI 还发布了用于聊天用例的 Mixtral-8x7B 指令模型,并使用各种公开可用的对话数据集进行了微调。 Mixtral 模型的上下文长度高达 32,000 个标记。

Mixtral-8x7B 比以前最先进的模型具有显着的性能改进。其稀疏的专家混合架构使其能够在 9 个自然语言处理 (NLP) 基准测试中的 12 个上取得更好的性能结果 西北风人工智能。 Mixtral 的性能可匹配或超过其大小 10 倍的模型。与同等大小的密集模型相比,通过仅利用每个令牌的一小部分参数,它可以实现更快的推理速度和更低的计算成本,例如,总共有 46.7 亿个参数,但每个令牌仅使用 12.9 亿个参数。高性能、多语言支持和计算效率的结合使 Mixtral-8x7B 成为 NLP 应用程序的有吸引力的选择。

该模型在宽松的 Apache 2.0 许可证下提供,可以不受限制地使用。

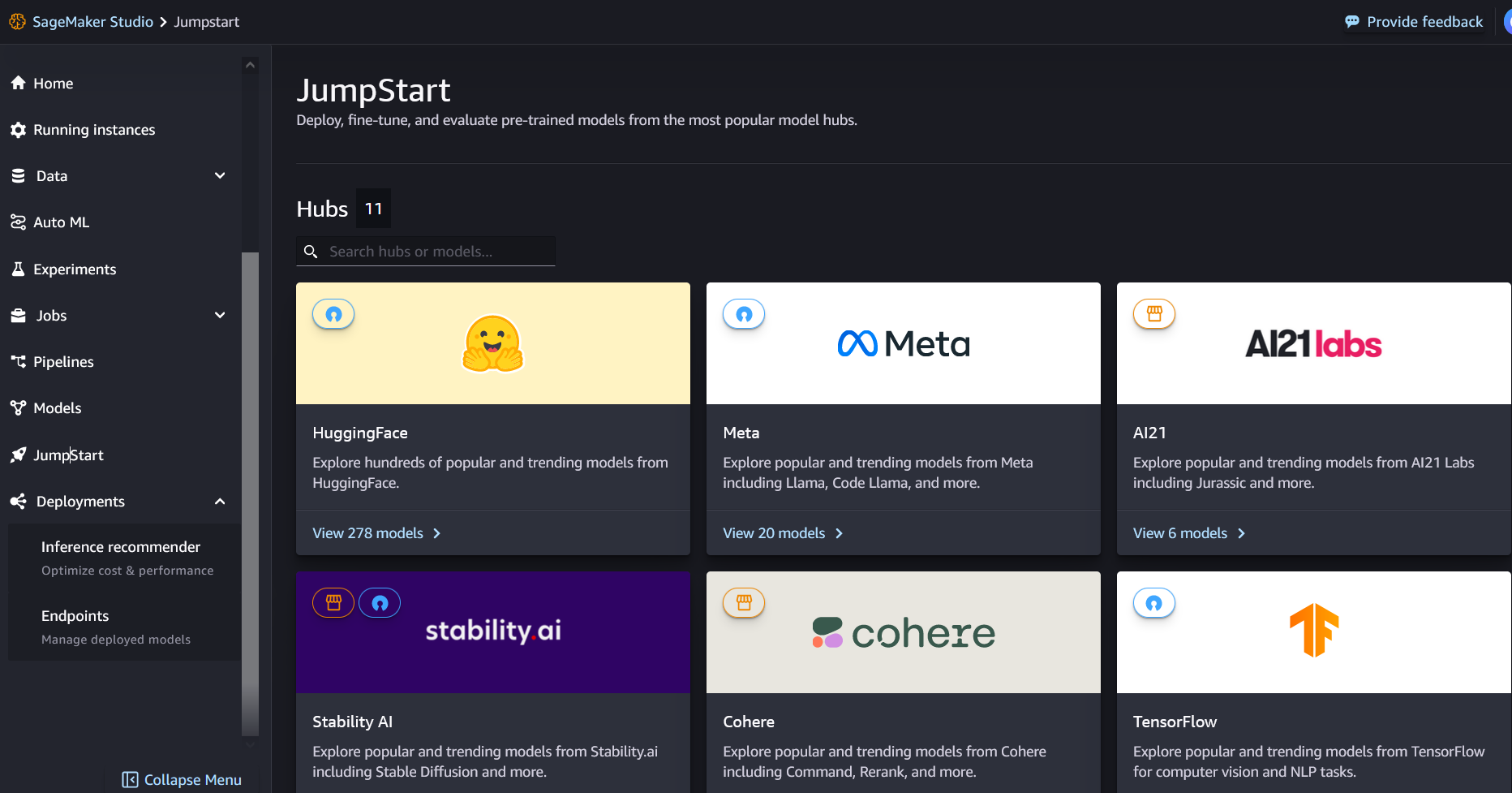

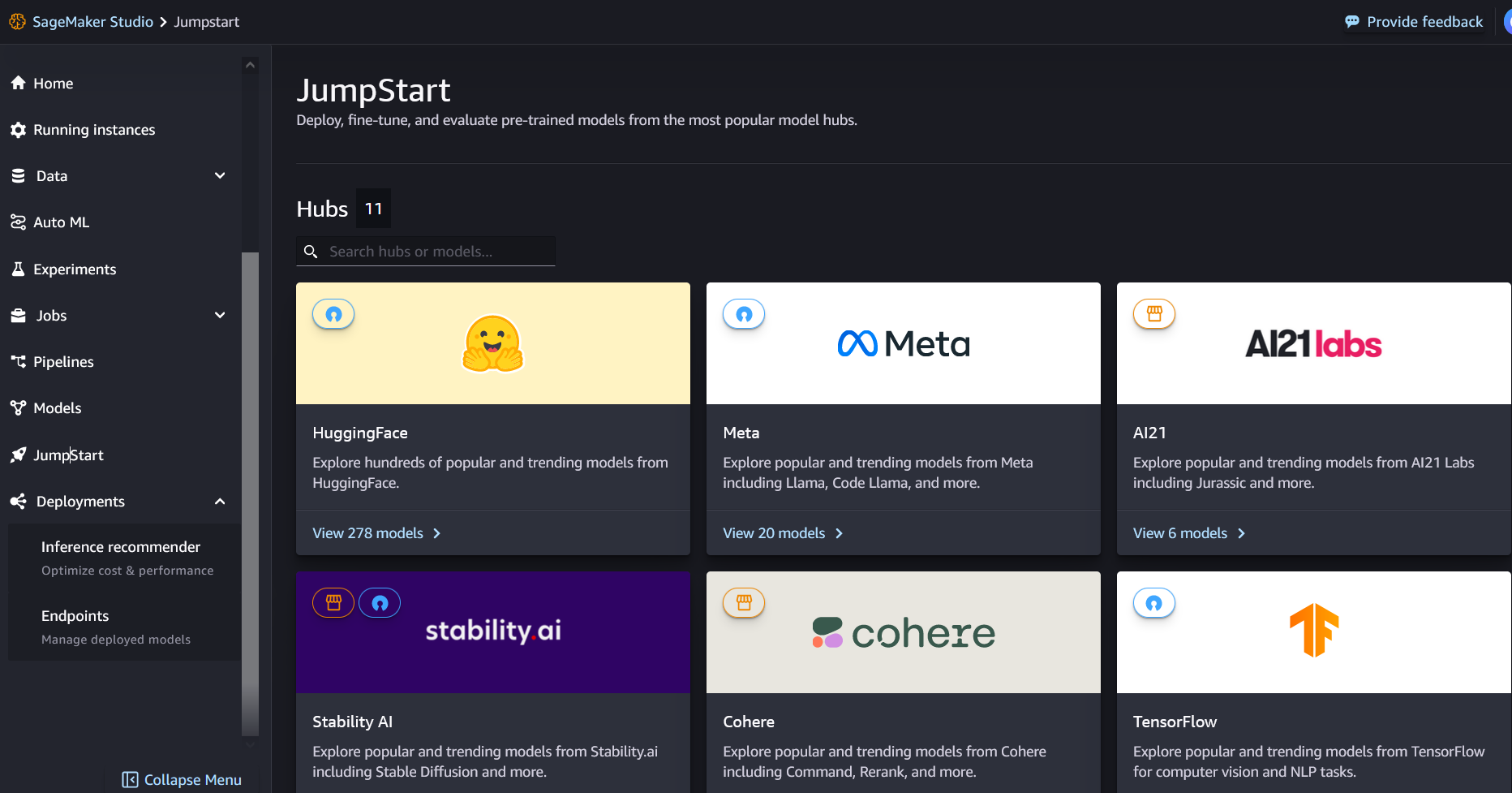

什么是 SageMaker JumpStart

借助 SageMaker JumpStart,机器学习从业者可以从不断增长的性能最佳基础模型列表中进行选择。 机器学习从业者可以将基础模型部署到专用的 亚马逊SageMaker 网络隔离环境中的实例,并使用 SageMaker 自定义模型进行模型训练和部署。

您现在只需点击几下即可发现并部署 Mixtral-8x7B 亚马逊SageMaker Studio 或通过 SageMaker Python SDK 以编程方式,使您能够利用 SageMaker 功能导出模型性能和 MLOps 控制,例如 Amazon SageMaker管道, Amazon SageMaker调试器,或容器日志。 该模型部署在 AWS 安全环境中并受您的 VPC 控制,有助于确保数据安全。

探索型号

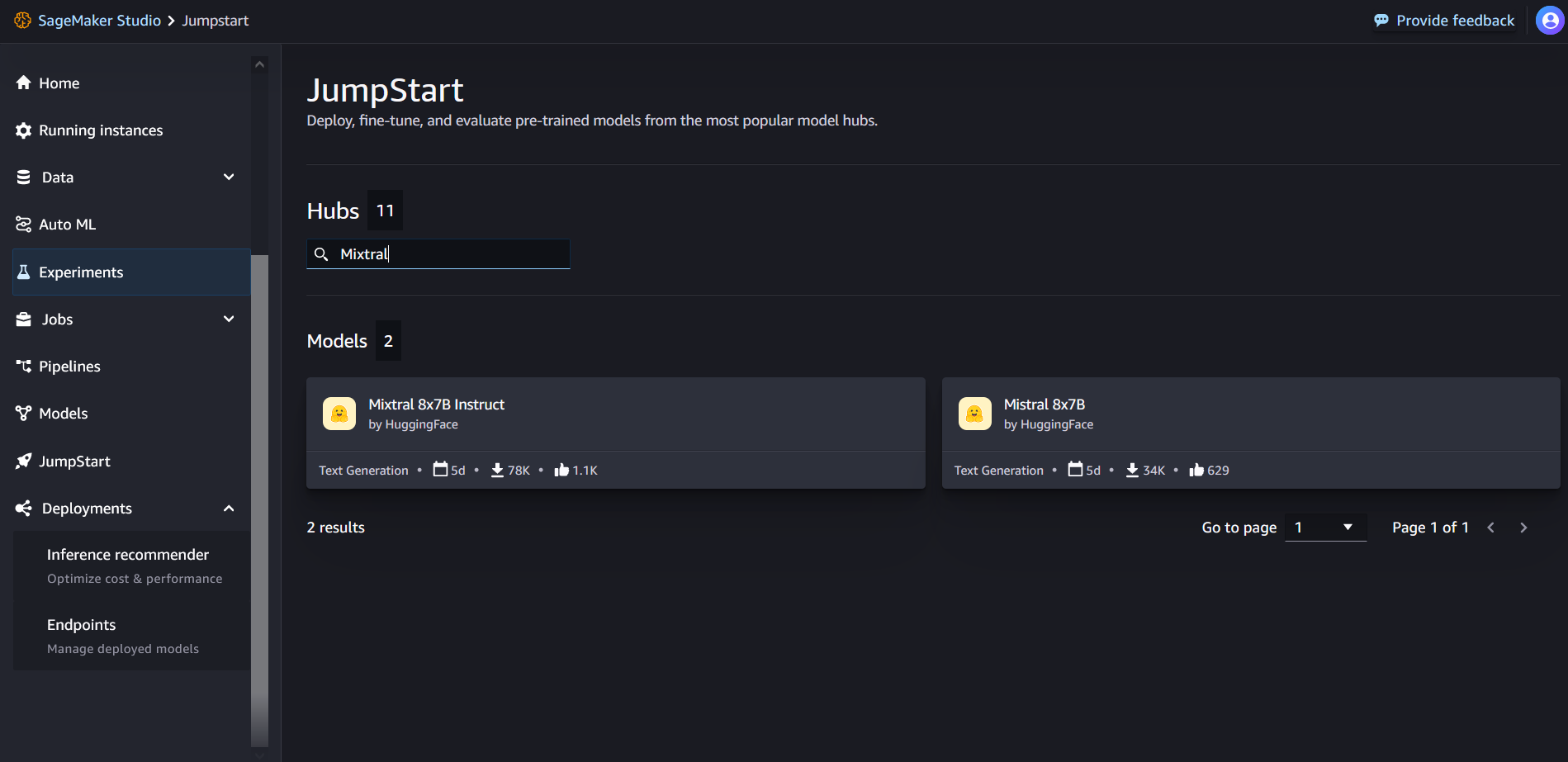

您可以通过 SageMaker Studio UI 和 SageMaker Python SDK 中的 SageMaker JumpStart 访问 Mixtral-8x7B 基础模型。在本节中,我们将介绍如何在 SageMaker Studio 中发现模型。

SageMaker Studio 是一个集成开发环境 (IDE),提供基于 Web 的单一可视化界面,您可以在其中访问专用工具来执行所有 ML 开发步骤,从准备数据到构建、训练和部署 ML 模型。 有关如何开始和设置 SageMaker Studio 的更多详细信息,请参阅 亚马逊SageMaker Studio.

在 SageMaker Studio 中,您可以通过选择来访问 SageMaker JumpStart 快速启动 在导航窗格中。

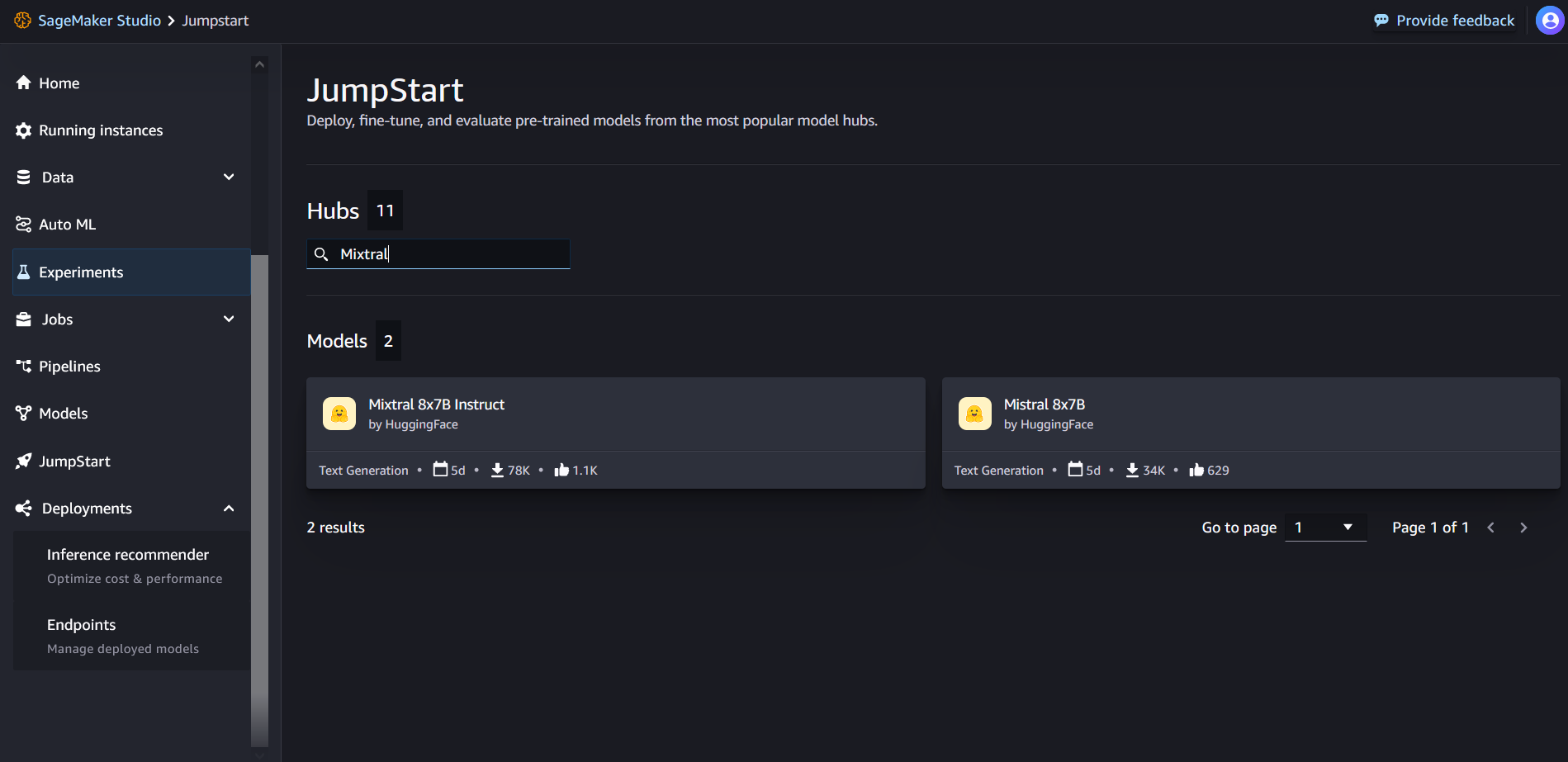

在 SageMaker JumpStart 登录页面中,您可以在搜索框中搜索“Mixtral”。您将看到显示 Mixtral 8x7B 和 Mixtral 8x7B Instruct 的搜索结果。

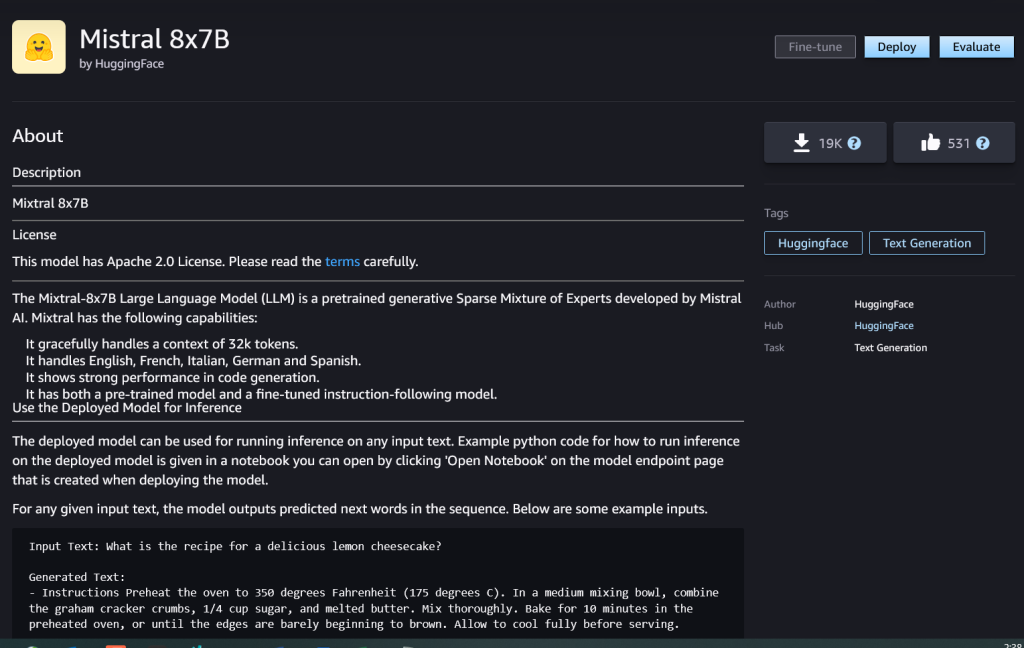

您可以选择模型卡来查看有关模型的详细信息,例如许可证、用于训练的数据以及如何使用。您还会发现 部署 按钮,您可以使用该按钮来部署模型并创建端点。

部署模型

当您选择时开始部署 部署。部署完成后,您已创建一个端点。您可以通过传递示例推理请求负载或使用 SDK 选择测试选项来测试端点。当您选择使用 SDK 的选项时,您将看到可在 SageMaker Studio 的首选笔记本编辑器中使用的示例代码。

要使用 SDK 进行部署,我们首先选择 Mixtral-8x7B 模型,由 model_id with value huggingface-llm-mixtral-8x7b。您可以使用以下代码在 SageMaker 上部署任何选定的模型。同样,您可以使用 Mixtral-8x7B 自己的模型 ID 来部署 Mixtral-XNUMXxXNUMXB 指令:

from sagemaker.jumpstart.model import JumpStartModel

model = JumpStartModel(model_id="huggingface-llm-mixtral-8x7b")

predictor = model.deploy()

这会使用默认配置在 SageMaker 上部署模型,包括默认实例类型和默认 VPC 配置。 您可以通过在中指定非默认值来更改这些配置 快速启动模型.

部署后,您可以通过 SageMaker 预测器对部署的端点运行推理:

payload = {"inputs": "Hello!"}

predictor.predict(payload)

提示示例

您可以像任何标准文本生成模型一样与 Mixtral-8x7B 模型进行交互,其中模型处理输入序列并输出序列中预测的下一个单词。在本节中,我们提供示例提示。

代码生成

使用前面的示例,我们可以使用如下所示的代码生成提示:

# Code generation

payload = {

"inputs": "Write a program to compute factorial in python:",

"parameters": {

"max_new_tokens": 200,

},

}

predictor.predict(payload)

您将获得以下输出:

Input Text: Write a program to compute factorial in python:

Generated Text:

Factorial of a number is the product of all the integers from 1 to that number.

For example, factorial of 5 is 1*2*3*4*5 = 120.

Factorial of 0 is 1.

Factorial of a negative number is not defined.

The factorial of a number can be written as n!.

For example, 5! = 120.

## Write a program to compute factorial in python

```

def factorial(n):

if n == 0:

return 1

else:

return n * factorial(n-1)

print(factorial(5))

```

Output:

```

120

```

## Explanation:

In the above program, we have defined a function called factorial which takes a single argument n.

If n is equal to 0, then we return 1.

Otherwise, we return n multiplied by the factorial of n-1.

We then call the factorial function with the argument 5 and print the result.

The output of the program is 120, which is the factorial of 5.

## How to compute factorial in python

In the above program, we have used a recursive function to compute the factorial of a number.

A recursive function is a function that calls itself.

In this case, the factorial function calls itself with the argument n-1.

This process continues until n is equal to 0, at which point the function returns 1.

The factorial of a number can also be computed using a loop.

For example, the following program uses a for loop to compute the factorial of a number:

```

def factorial(n):

result = 1

for i in range(1, n+1):

result *= i

return result

情感分析提示

您可以使用 Mixtral 8x7B 使用如下提示执行情感分析:

payload = {

"inputs": """

Tweet: "I hate it when my phone battery dies."

Sentiment: Negative

Tweet: "My day has been :+1:"

Sentiment: Positive

Tweet: "This is the link to the article"

Sentiment: Neutral

Tweet: "This new music video was incredibile"

Sentiment:""",

"parameters": {

"max_new_tokens": 2,

},

}

predictor.predict(payload)

您将获得以下输出:

Input Text: Tweet: "I hate it when my phone battery dies."

Sentiment: Negative

###

Tweet: "My day has been :+1:"

Sentiment: Positive

###

Tweet: "This is the link to the article"

Sentiment: Neutral

###

Tweet: "This new music video was incredibile"

Sentiment:

Generated Text: Positive

问题回答提示

您可以对 Mixtral-8x7B 使用如下所示的问答提示:

# Question answering

payload = {

"inputs": "Could you remind me when was the C programming language invented?",

"parameters": {

"max_new_tokens": 100,

},

}

query_endpoint(payload)

您将获得以下输出:

Input Text: Could you remind me when was the C programming language invented?

Generated Text:

C was invented in 1972 by Dennis Ritchie at Bell Labs.

C is a general-purpose programming language. It was invented to write the UNIX operating system.

C is a structured programming language. It is a middle-level language. It is a procedural language.

C is a compiled language. It is a portable language.

C is a case-sensitive language. It is a free-form language

Mixtral-8x7B 指令

Mixtral-8x7B 的指令调整版本接受格式化指令,其中对话角色必须以用户提示开始,并在用户指令和助手(模型答案)之间交替。必须严格遵守指令格式,否则模型将生成次优输出。用于构建 Instruct 模型提示的模板定义如下:

<s> [INST] Instruction [/INST] Model answer</s> [INST] Follow-up instruction [/INST]]

需要注意的是 <s> 和 </s> 是字符串开头 (BOS) 和字符串结尾 (EOS) 的特殊标记,而 [INST] 和 [/INST] 是常规字符串。

以下代码显示了如何将提示格式化为指令格式:

from typing import Dict, List

def format_instructions(instructions: List[Dict[str, str]]) -> List[str]:

"""Format instructions where conversation roles must alternate user/assistant/user/assistant/..."""

prompt: List[str] = []

for user, answer in zip(instructions[::2], instructions[1::2]):

prompt.extend(["<s>", "[INST] ", (user["content"]).strip(), " [/INST] ", (answer["content"]).strip(), "</s>"])

prompt.extend(["<s>", "[INST] ", (instructions[-1]["content"]).strip(), " [/INST] ","</s>"])

return "".join(prompt)

def print_instructions(prompt: str, response: str) -> None:

bold, unbold = '33[1m', '33[0m'

print(f"{bold}> Input{unbold}n{prompt}nn{bold}> Output{unbold}n{response[0]['generated_text']}n")

知识检索

您可以使用以下代码进行知识检索提示:

instructions = [{"role": "user", "content": "what is the recipe of mayonnaise?"}]

prompt = format_instructions(instructions)

payload = {

"inputs": prompt,

"parameters": {"max_new_tokens": 315, "do_sample": True}

}

response=predictor.predict(payload)

print_instructions(prompt, response)

您将获得以下输出:

> Input

<s>[INST] what is the recipe of mayonnaise? [/INST]</s>

> Output

Here is a simple recipe for homemade mayonnaise:

Ingredients:

* 1 egg yolk

* 1 tablespoon of mustard (Dijon is a good choice)

* 1 cup of oil (canola or vegetable oil work well)

* 1-2 tablespoons of white vinegar or lemon juice

* Salt to taste

Instructions:

1. In a medium bowl, whisk together the egg yolk and mustard. It is important that these ingredients are at room temperature.

2. Start adding the oil drop by drop, whisking continuously. This step is crucial to ensure that the mayonnaise emulsifies and does not separate.

3. Once you have added about a quarter of the oil and the mixture has thickened, you can start adding the oil in a thin stream, still whisking constantly.

4. When all the oil has been incorporated and the mayonnaise is thick, whisk in the vinegar or lemon juice and salt to taste.

5. If the mayonnaise is too thick, you can thin it with a little water.

6. Store the mayonnaise in the refrigerator and use within a few days.

Note: It is important to use pasteurized eggs or egg yolks when making homemade mayonnaise to reduce the risk of foodborne illness.

编码

混合模型可以展示编码任务的基准优势,如以下代码所示:

instructions = [

{

"role": "user",

"content": "In Bash, how do I list all text files in the current directory (excluding subdirectories) that have been modified in the last month?",

}

]

prompt = format_instructions(instructions)

payload = {

"inputs": prompt,

"parameters": {"max_new_tokens": 256, "do_sample": True, "temperature": 0.2}

}

response=predictor.predict(payload)

print_instructions(prompt, response)

您将获得以下输出:

> Input

<s>[INST] In Bash, how do I list all text files in the current directory (excluding subdirectories) that have been modified in the last month? [/INST]</s>

> Output

ef{0.15cm} To list all text files in the current directory that have been modified in the last month, you can use a combination of the `find` and `grep` commands in Bash. Here's the command you're looking for:

```bash

find . -maxdepth 1 -type f -name "*.txt" -mtime -30

```

Let's break down this command:

- `find .` starts a search in the current directory.

- `-maxdepth 1` limits the search to the current directory only (excluding subdirectories).

- `-type f` specifies that you're looking for files.

- `-name "*.txt"` filters the results to only include files with a `.txt` extension.

- `-mtime -30` filters the results to only include files modified within the last 30 days.

This command will output the paths of all text files in the current directory that have been modified in the last month.

数学与推理

混合模型还报告了数学准确性的优势:

instructions = [

{

"role": "user",

"content": "I bought an ice cream for 6 kids. Each cone was $1.25 and I paid with a $10 bill. How many dollars did I get back? Explain first before answering.",

}

]

prompt = format_instructions(instructions)

payload = {

"inputs": prompt,

"parameters": {"max_new_tokens": 600, "do_sample": True, "temperature": 0.2}

}

response=predictor.predict(payload)

print_instructions(prompt, response)

混合模型可以通过数学逻辑提供理解,如以下输出所示:

> Input

<s>[INST] I bought an ice cream for 6 kids. Each cone was $1.25 and I paid with a $10 bill. How many dollars did I get back? Explain first before answering. [/INST] </s>

> Output

First, let's calculate the total cost of the ice cream cones. Since each cone costs $1.25 and you bought 6 cones, the total cost would be:

Total cost = Cost per cone * Number of cones

Total cost = $1.25 * 6

Total cost = $7.50

Next, subtract the total cost from the amount you paid with the $10 bill to find out how much change you got back:

Change = Amount paid - Total cost

Change = $10 - $7.50

Change = $2.50

So, you got $2.50 back.

清理

运行完笔记本后,删除在此过程中创建的所有资源,以便停止计费。使用以下代码:

predictor.delete_model()

predictor.delete_endpoint()

结论

在这篇文章中,我们向您展示了如何在 SageMaker Studio 中开始使用 Mixtral-8x7B 并部署模型进行推理。由于基础模型是预先训练的,因此它们可以帮助降低培训和基础设施成本,并支持针对您的用例进行定制。立即访问 SageMaker Studio 中的 SageMaker JumpStart 以开始使用。

资源

关于作者

拉奇纳查达 是 AWS 战略客户的首席解决方案架构师 AI/ML。 Rachna 是一个乐观主义者,他相信以合乎道德和负责任的方式使用 AI 可以改善未来的社会并带来经济和社会繁荣。 在业余时间,Rachna 喜欢与家人共度时光、远足和听音乐。

拉奇纳查达 是 AWS 战略客户的首席解决方案架构师 AI/ML。 Rachna 是一个乐观主义者,他相信以合乎道德和负责任的方式使用 AI 可以改善未来的社会并带来经济和社会繁荣。 在业余时间,Rachna 喜欢与家人共度时光、远足和听音乐。

凯尔乌尔里希博士 是一名应用科学家 Amazon SageMaker 内置算法 团队。 他的研究兴趣包括可扩展机器学习算法、计算机视觉、时间序列、贝叶斯非参数和高斯过程。 他在杜克大学获得博士学位,并在 NeurIPS、Cell 和 Neuron 上发表了论文。

凯尔乌尔里希博士 是一名应用科学家 Amazon SageMaker 内置算法 团队。 他的研究兴趣包括可扩展机器学习算法、计算机视觉、时间序列、贝叶斯非参数和高斯过程。 他在杜克大学获得博士学位,并在 NeurIPS、Cell 和 Neuron 上发表了论文。

克里斯托弗·惠顿 是 JumpStart 团队的软件开发人员。他帮助扩展模型选择并将模型与其他 SageMaker 服务集成。 Chris 热衷于加速人工智能在各个业务领域的普及。

克里斯托弗·惠顿 是 JumpStart 团队的软件开发人员。他帮助扩展模型选择并将模型与其他 SageMaker 服务集成。 Chris 热衷于加速人工智能在各个业务领域的普及。

法比奥·诺纳托·德保拉博士 是 GenAI SA 专家的高级经理,帮助模型提供商和客户在 AWS 中扩展生成式 AI。 Fabio 热衷于实现生成式人工智能技术的民主化。工作之余,您会发现法比奥 (Fabio) 骑着摩托车在索诺玛山谷的山上行走,或者阅读 ComiXology。

法比奥·诺纳托·德保拉博士 是 GenAI SA 专家的高级经理,帮助模型提供商和客户在 AWS 中扩展生成式 AI。 Fabio 热衷于实现生成式人工智能技术的民主化。工作之余,您会发现法比奥 (Fabio) 骑着摩托车在索诺玛山谷的山上行走,或者阅读 ComiXology。

Ashish Khetan 博士 是 Amazon SageMaker 内置算法的高级应用科学家,帮助开发机器学习算法。 他在伊利诺伊大学香槟分校获得博士学位。 他是机器学习和统计推理领域的活跃研究者,在 NeurIPS、ICML、ICLR、JMLR、ACL 和 EMNLP 会议上发表了多篇论文。

Ashish Khetan 博士 是 Amazon SageMaker 内置算法的高级应用科学家,帮助开发机器学习算法。 他在伊利诺伊大学香槟分校获得博士学位。 他是机器学习和统计推理领域的活跃研究者,在 NeurIPS、ICML、ICLR、JMLR、ACL 和 EMNLP 会议上发表了多篇论文。

卡尔艾伯森 领导 Amazon SageMaker 算法和 JumpStart(SageMaker 的机器学习中心)的产品、工程和科学。 他热衷于应用机器学习来释放商业价值。

卡尔艾伯森 领导 Amazon SageMaker 算法和 JumpStart(SageMaker 的机器学习中心)的产品、工程和科学。 他热衷于应用机器学习来释放商业价值。

拉奇纳查达 是 AWS 战略客户的首席解决方案架构师 AI/ML。 Rachna 是一个乐观主义者,他相信以合乎道德和负责任的方式使用 AI 可以改善未来的社会并带来经济和社会繁荣。 在业余时间,Rachna 喜欢与家人共度时光、远足和听音乐。

拉奇纳查达 是 AWS 战略客户的首席解决方案架构师 AI/ML。 Rachna 是一个乐观主义者,他相信以合乎道德和负责任的方式使用 AI 可以改善未来的社会并带来经济和社会繁荣。 在业余时间,Rachna 喜欢与家人共度时光、远足和听音乐。 凯尔乌尔里希博士 是一名应用科学家

凯尔乌尔里希博士 是一名应用科学家  克里斯托弗·惠顿 是 JumpStart 团队的软件开发人员。他帮助扩展模型选择并将模型与其他 SageMaker 服务集成。 Chris 热衷于加速人工智能在各个业务领域的普及。

克里斯托弗·惠顿 是 JumpStart 团队的软件开发人员。他帮助扩展模型选择并将模型与其他 SageMaker 服务集成。 Chris 热衷于加速人工智能在各个业务领域的普及。 法比奥·诺纳托·德保拉博士 是 GenAI SA 专家的高级经理,帮助模型提供商和客户在 AWS 中扩展生成式 AI。 Fabio 热衷于实现生成式人工智能技术的民主化。工作之余,您会发现法比奥 (Fabio) 骑着摩托车在索诺玛山谷的山上行走,或者阅读 ComiXology。

法比奥·诺纳托·德保拉博士 是 GenAI SA 专家的高级经理,帮助模型提供商和客户在 AWS 中扩展生成式 AI。 Fabio 热衷于实现生成式人工智能技术的民主化。工作之余,您会发现法比奥 (Fabio) 骑着摩托车在索诺玛山谷的山上行走,或者阅读 ComiXology。 Ashish Khetan 博士 是 Amazon SageMaker 内置算法的高级应用科学家,帮助开发机器学习算法。 他在伊利诺伊大学香槟分校获得博士学位。 他是机器学习和统计推理领域的活跃研究者,在 NeurIPS、ICML、ICLR、JMLR、ACL 和 EMNLP 会议上发表了多篇论文。

Ashish Khetan 博士 是 Amazon SageMaker 内置算法的高级应用科学家,帮助开发机器学习算法。 他在伊利诺伊大学香槟分校获得博士学位。 他是机器学习和统计推理领域的活跃研究者,在 NeurIPS、ICML、ICLR、JMLR、ACL 和 EMNLP 会议上发表了多篇论文。 卡尔艾伯森 领导 Amazon SageMaker 算法和 JumpStart(SageMaker 的机器学习中心)的产品、工程和科学。 他热衷于应用机器学习来释放商业价值。

卡尔艾伯森 领导 Amazon SageMaker 算法和 JumpStart(SageMaker 的机器学习中心)的产品、工程和科学。 他热衷于应用机器学习来释放商业价值。