In today’s data-driven business environment, organizations face the challenge of efficiently preparing and transforming large amounts of data for analytics and data science purposes. Businesses need to build data warehouses and data lakes based on operational data. This is driven by the need to centralize and integrate data coming from disparate sources.

At the same time, operational data often originates from applications backed by legacy data stores. Modernizing applications requires a microservice architecture, which in turn necessitates the consolidation of data from multiple sources to construct an operational data store. Without modernization, legacy applications may incur increasing maintenance costs. Modernizing applications involves changing the underlying database engine to a modern document-based database like MongoDB.

These two tasks (building data lakes or data warehouses and application modernization) involve data movement, which uses an extract, transform, and load (ETL) process. The ETL job is a key functionality to having a well-structured process in order to succeed.

AWS Adeziv este un serviciu de integrare a datelor fără server, care facilitează descoperirea, pregătirea, mutarea și integrarea datelor din mai multe surse pentru analiză, învățare automată (ML) și dezvoltare de aplicații. Atlas MongoDB is an integrated suite of cloud database and data services that combines transactional processing, relevance-based search, real-time analytics, and mobile-to-cloud data synchronization in an elegant and integrated architecture.

By using AWS Glue with MongoDB Atlas, organizations can streamline their ETL processes. With its fully managed, scalable, and secure database solution, MongoDB Atlas provides a flexible and reliable environment for storing and managing operational data. Together, AWS Glue ETL and MongoDB Atlas are a powerful solution for organizations looking to optimize how they build data lakes and data warehouses, and to modernize their applications, in order to improve business performance, reduce costs, and drive growth and success.

In this post, we demonstrate how to migrate data from Serviciul Amazon de stocare simplă (Amazon S3) buckets to MongoDB Atlas using AWS Glue ETL, and how to extract data from MongoDB Atlas into an Amazon S3-based data lake.

Prezentare generală a soluțiilor

In this post, we explore the following use cases:

- Extracting data from MongoDB – MongoDB is a popular database used by thousands of customers to store application data at scale. Enterprise customers can centralize and integrate data coming from multiple data stores by building data lakes and data warehouses. This process involves extracting data from the operational data stores. When the data is in one place, customers can quickly use it for business intelligence needs or for ML.

- Ingesting data into MongoDB – MongoDB also serves as a no-SQL database to store application data and build operational data stores. Modernizing applications often involves migration of the operational store to MongoDB. Customers would need to extract existing data from relational databases or from flat files. Mobile and web apps often require data engineers to build data pipelines to create a single view of data in Atlas while ingesting data from multiple siloed sources. During this migration, they would need to join different databases to create documents. This complex join operation would need significant, one-time compute power. Developers would also need to build this quickly to migrate the data.

AWS Glue comes handy in these cases with the pay-as-you-go model and its ability to run complex transformations across huge datasets. Developers can use AWS Glue Studio to efficiently create such data pipelines.

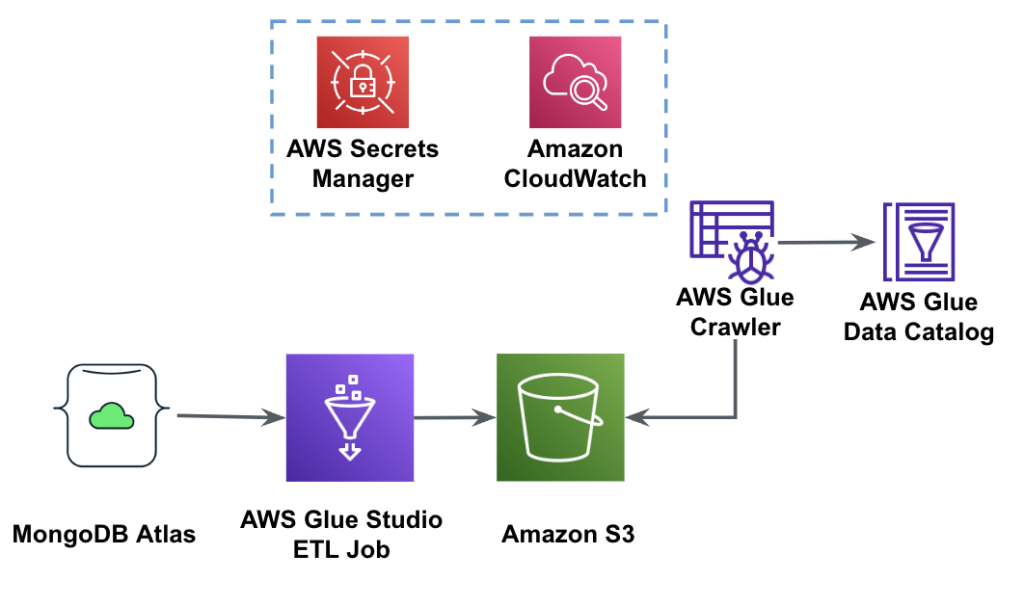

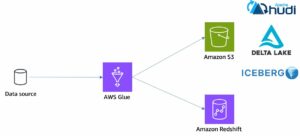

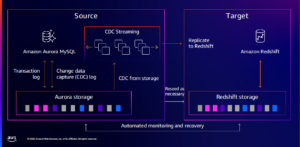

The following diagram shows the data extraction workflow from MongoDB Atlas into an S3 bucket using the AWS Glue Studio.

In order to implement this architecture, you will need a MongoDB Atlas cluster, an S3 bucket, and an Gestionarea identității și accesului AWS (IAM) role for AWS Glue. To configure these resources, refer to the prerequisite steps in the following GitHub repo.

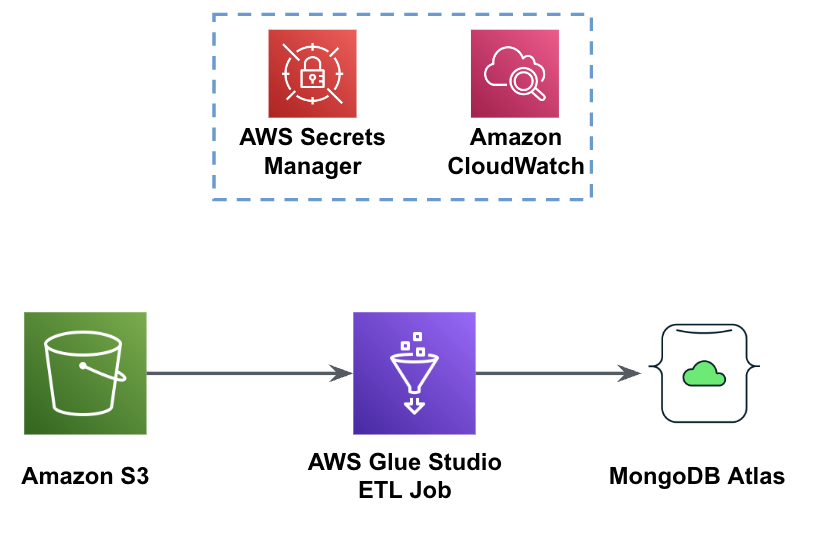

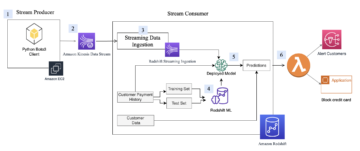

The following figure shows the data load workflow from an S3 bucket into MongoDB Atlas using AWS Glue.

The same prerequisites are needed here: an S3 bucket, IAM role, and a MongoDB Atlas cluster.

Load data from Amazon S3 to MongoDB Atlas using AWS Glue

The following steps describe how to load data from the S3 bucket into MongoDB Atlas using an AWS Glue job. The extraction process from MongoDB Atlas to Amazon S3 is very similar, with the exception of the script being used. We call out the differences between the two processes.

- Create a free cluster in MongoDB Atlas.

- Încărcați sample JSON file to your S3 bucket.

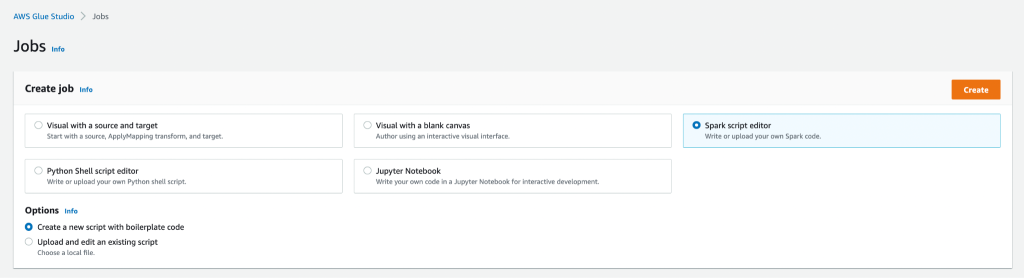

- Create a new AWS Glue Studio job with the Editor de script Spark opțiune.

- Depending on whether you want to load or extract data from the MongoDB Atlas cluster, enter the load script or extract script in the AWS Glue Studio script editor.

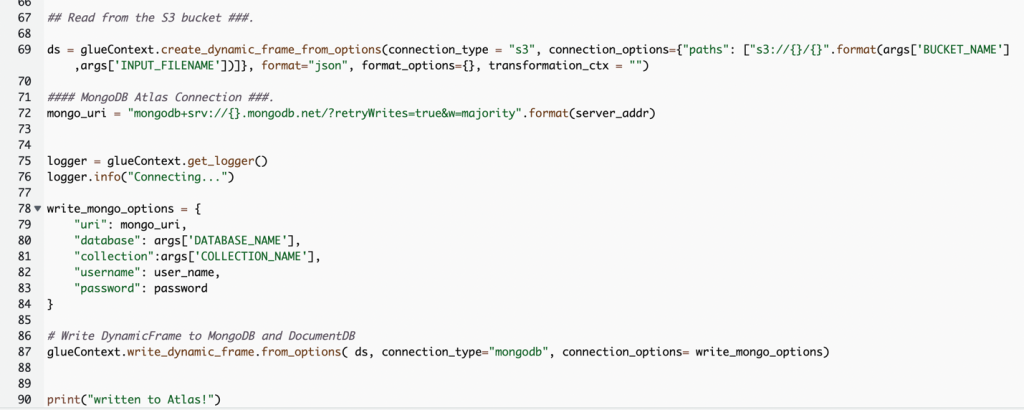

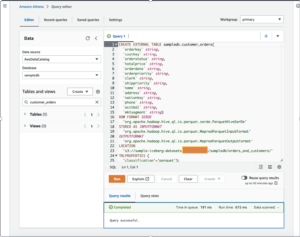

The following screenshot shows a code snippet for loading data into the MongoDB Atlas cluster.

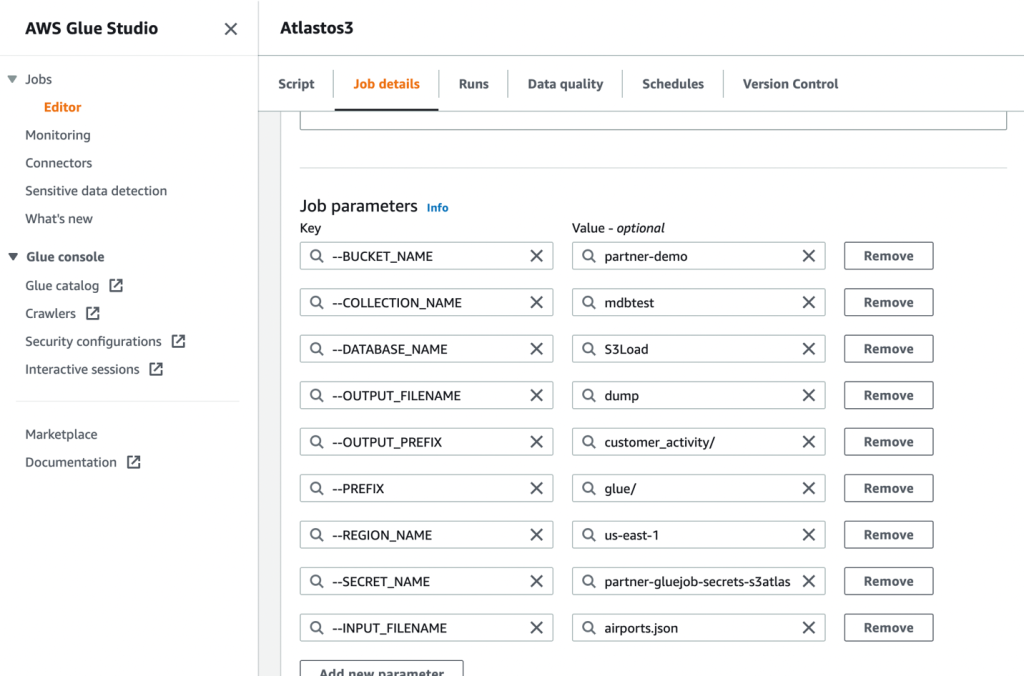

Codul folosește Manager de secrete AWS to retrieve the MongoDB Atlas cluster name, user name, and password. Then, it creates a DynamicFrame for the S3 bucket and file name passed to the script as parameters. The code retrieves the database and collection names from the job parameters configuration. Finally, the code writes the DynamicFrame to the MongoDB Atlas cluster using the retrieved parameters.

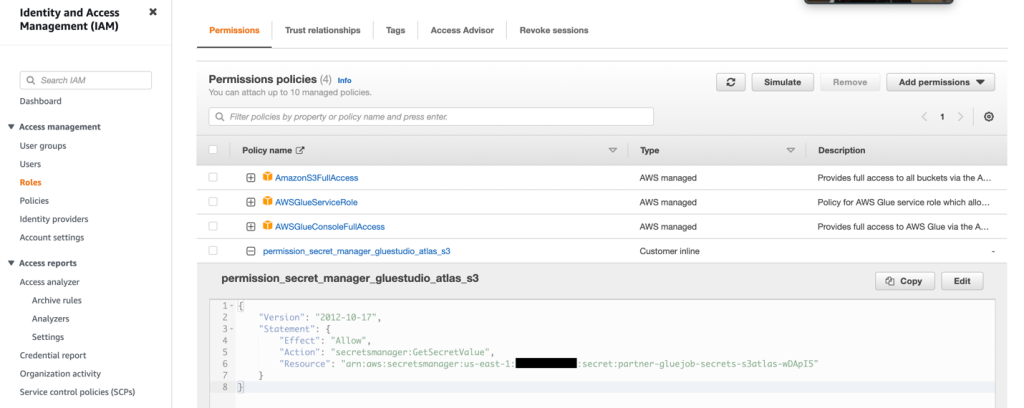

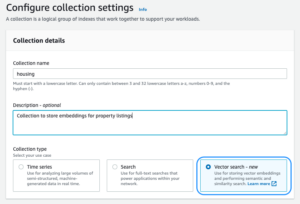

- Create an IAM role with the permissions as shown in the following screenshot.

Pentru mai multe detalii, consultați Configurați un rol IAM pentru jobul dvs. ETL.

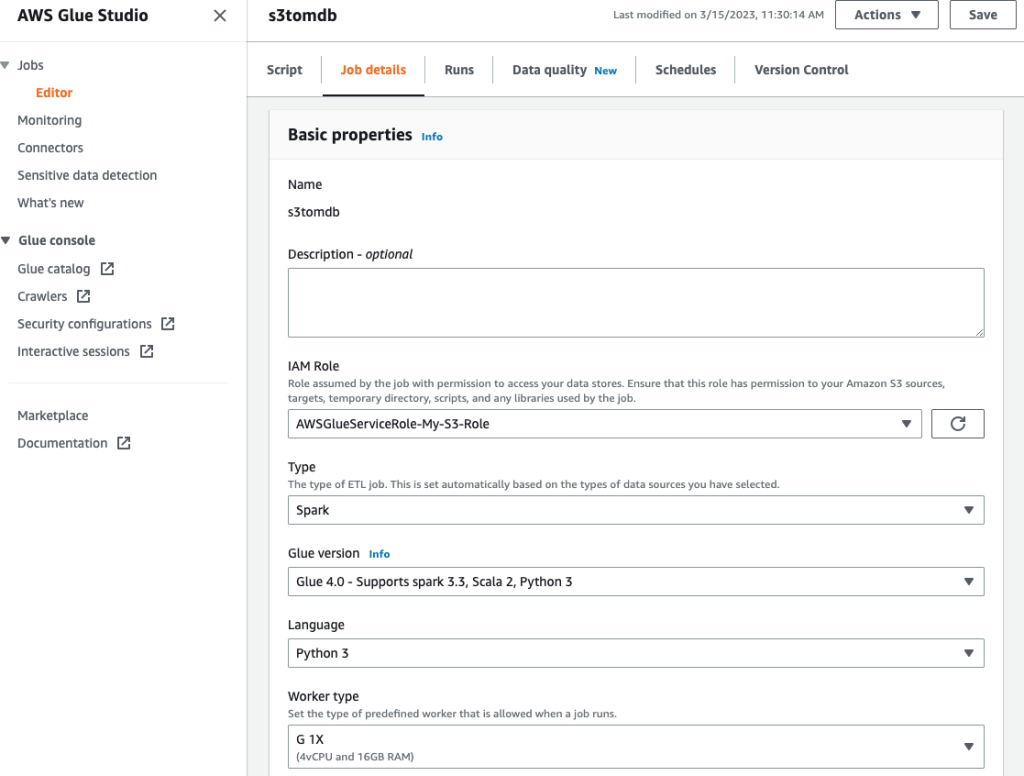

- Give the job a name and supply the IAM role created in the previous step on the Detaliile postului tab.

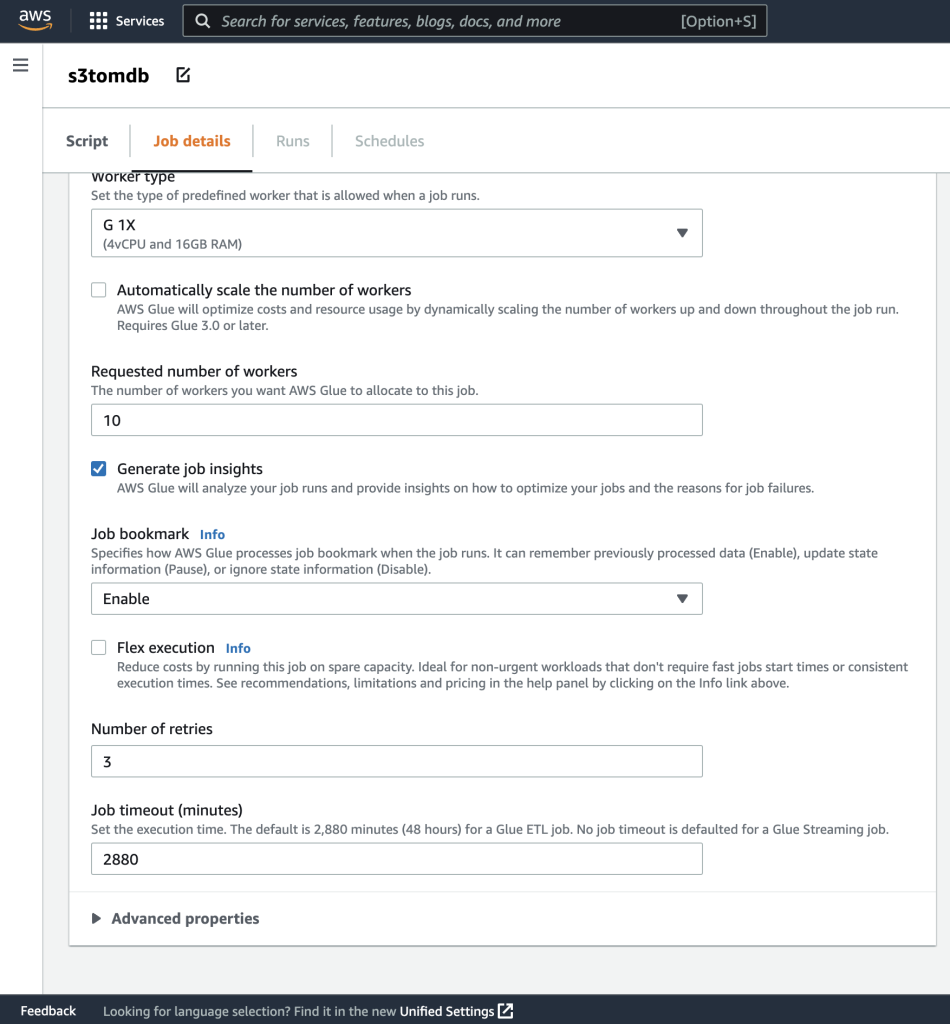

- You can leave the rest of the parameters as default, as shown in the following screenshots.

- Next, define the job parameters that the script uses and supply the default values.

- Save the job and run it.

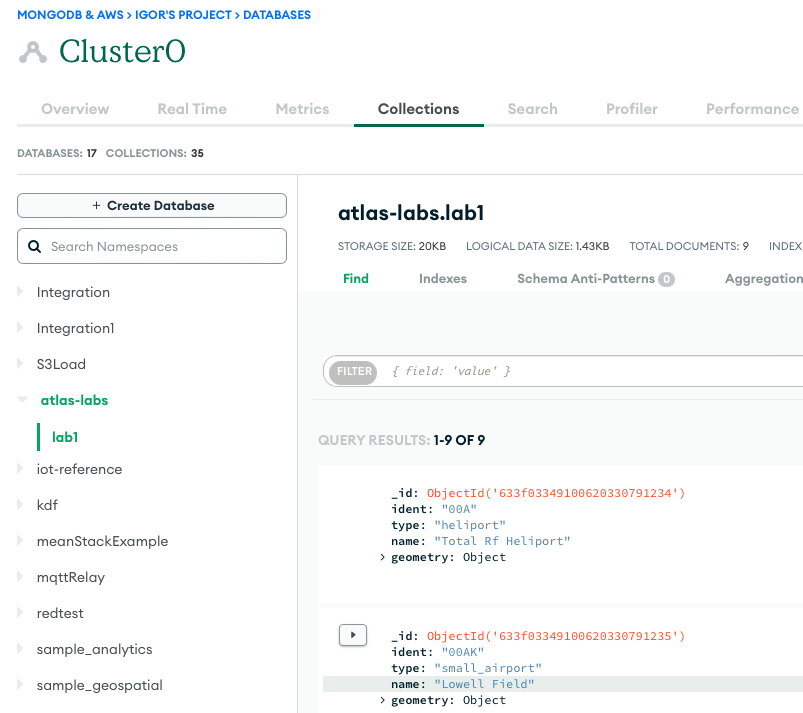

- To confirm a successful run, observe the contents of the MongoDB Atlas database collection if loading the data, or the S3 bucket if you were performing an extract.

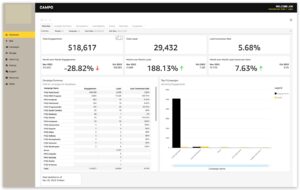

The following screenshot shows the results of a successful data load from an Amazon S3 bucket into the MongoDB Atlas cluster. The data is now available for queries in the MongoDB Atlas UI.

- To troubleshoot your runs, review the Amazon CloudWatch logs using the link on the job’s Alerga tab.

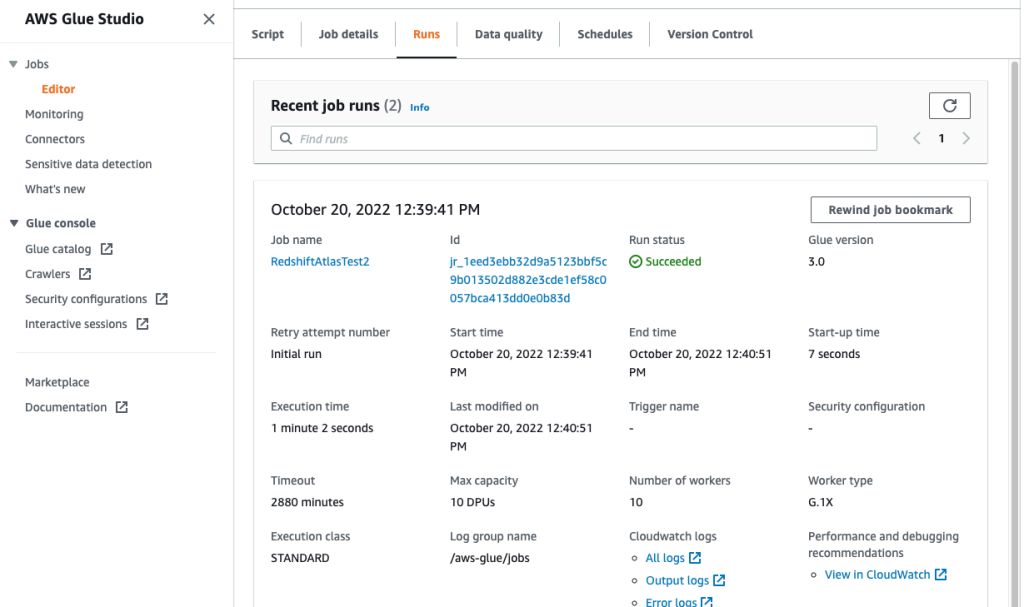

The following screenshot shows that the job ran successfully, with additional details such as links to the CloudWatch logs.

Concluzie

In this post, we described how to extract and ingest data to MongoDB Atlas using AWS Glue.

With AWS Glue ETL jobs, we can now transfer the data from MongoDB Atlas to AWS Glue-compatible sources, and vice versa. You can also extend the solution to build analytics using AWS AI and ML services.

Pentru a afla mai multe, consultați GitHub depozit for step-by-step instructions and sample code. You can procure Atlas MongoDB pe AWS Marketplace.

Despre Autori

Igor Alekseev este un Senior Partner Architect Solution la AWS în domeniul Date și Analytics. În rolul său, Igor lucrează cu parteneri strategici, ajutându-i să construiască arhitecturi complexe, optimizate pentru AWS. Înainte de a se alătura AWS, ca arhitect de date/soluții, a implementat multe proiecte în domeniul Big Data, inclusiv mai multe lacuri de date în ecosistemul Hadoop. În calitate de inginer de date, a fost implicat în aplicarea AI/ML pentru detectarea fraudelor și automatizarea biroului.

Babu Srinivasan este un Senior Partner Solutions Architect la MongoDB. În rolul său actual, lucrează cu AWS pentru a construi integrările tehnice și arhitecturile de referință pentru soluțiile AWS și MongoDB. Are peste două decenii de experiență în tehnologiile de baze de date și cloud. Este pasionat de furnizarea de soluții tehnice clienților care lucrează cu mai mulți integratori de sisteme globale (GSI) în mai multe zone geografice.

Babu Srinivasan este un Senior Partner Solutions Architect la MongoDB. În rolul său actual, lucrează cu AWS pentru a construi integrările tehnice și arhitecturile de referință pentru soluțiile AWS și MongoDB. Are peste două decenii de experiență în tehnologiile de baze de date și cloud. Este pasionat de furnizarea de soluții tehnice clienților care lucrează cu mai mulți integratori de sisteme globale (GSI) în mai multe zone geografice.

- Distribuție de conținut bazat pe SEO și PR. Amplifică-te astăzi.

- PlatoAiStream. Web3 Data Intelligence. Cunoștințe amplificate. Accesați Aici.

- Mintând viitorul cu Adryenn Ashley. Accesați Aici.

- Cumpărați și vindeți acțiuni în companii PRE-IPO cu PREIPO®. Accesați Aici.

- Sursa: https://aws.amazon.com/blogs/big-data/compose-your-etl-jobs-for-mongodb-atlas-with-aws-glue/

- :are

- :este

- 100

- 11

- a

- capacitate

- Despre Noi

- acces

- peste

- Suplimentar

- AI

- AI / ML

- de asemenea

- Amazon

- Sume

- an

- Google Analytics

- și

- aplicație

- Dezvoltare de Aplicații

- aplicatii

- Aplicarea

- Apps

- arhitectură

- SUNT

- AS

- At

- atlas

- Automatizare

- disponibil

- AWS

- AWS Adeziv

- Piața AWS

- sprijinit

- bazat

- fiind

- între

- Mare

- Datele mari

- construi

- Clădire

- afaceri

- business intelligence

- performanta in afaceri

- întreprinderi

- by

- apel

- CAN

- cazuri

- contesta

- schimbarea

- Cloud

- Grup

- cod

- colectare

- combină

- vine

- venire

- complex

- Calcula

- Configuraţie

- Confirma

- consolidare

- construi

- conținut

- a continuat

- Cheltuieli

- crea

- a creat

- creează

- creaţie

- Curent

- clienţii care

- de date

- inginer de date

- integrarea datelor

- Lacul de date

- știința datelor

- depozite de date

- Pe bază de date

- Baza de date

- baze de date

- seturi de date

- zeci de ani

- Mod implicit

- demonstra

- descrie

- descris

- detalii

- Detectare

- Dezvoltatorii

- Dezvoltare

- diferenţele

- diferit

- descoperi

- nebunie

- documente

- domeniu

- conduce

- condus

- în timpul

- ecosistem

- editor

- eficient

- Motor

- inginer

- inginerii

- Intrați

- Afacere

- clienții întreprinderii

- Mediu inconjurator

- Eter (ETH)

- excepție

- existent

- experienţă

- explora

- extinde

- extrage

- extracţie

- Față

- Figura

- Fișier

- Fişiere

- În cele din urmă

- plat

- flexibil

- următor

- Pentru

- fraudă

- detectarea fraudei

- Gratuit

- din

- complet

- funcționalitate

- geografii

- Caritate

- Creștere

- Hadoop

- la indemana

- având în

- he

- ajutor

- aici

- lui

- Cum

- Cum Pentru a

- HTML

- http

- HTTPS

- mare

- IAM

- Identitate

- if

- punerea în aplicare a

- implementat

- îmbunătăţi

- in

- Inclusiv

- crescând

- intrare

- instrucțiuni

- integra

- integrate

- integrare

- integrările

- Inteligență

- în

- implica

- implicat

- IT

- ESTE

- Loc de munca

- Locuri de munca

- alătura

- aderarea

- JSON

- Cheie

- lac

- mare

- AFLAȚI

- învăţare

- Părăsi

- Moştenire

- ca

- LINK

- Link-uri

- încărca

- încărcare

- cautati

- maşină

- masina de învățare

- întreținere

- FACE

- gestionate

- de conducere

- multe

- piaţă

- Mai..

- migra

- migrațiune

- ML

- Mobil

- model

- Modern

- modernizare

- moderniza

- MongoDB

- mai mult

- muta

- mişcare

- multiplu

- nume

- nume

- Nevoie

- necesar

- nevoilor

- Nou

- acum

- observa

- of

- Birou

- de multe ori

- on

- ONE

- operaţie

- operațional

- Optimizați

- Opțiune

- or

- comandă

- organizații

- afară

- parametrii

- partener

- parteneri

- Trecut

- pasionat

- Parolă

- performanță

- efectuarea

- permisiuni

- Loc

- Plato

- Informații despre date Platon

- PlatoData

- Popular

- Post

- putere

- puternic

- Pregăti

- pregătirea

- premise

- precedent

- anterior

- proces

- procese

- prelucrare

- Proiecte

- furnizează

- furnizarea

- scopuri

- interogări

- repede

- în timp real

- reduce

- de încredere

- necesita

- Necesită

- Resurse

- REST

- REZULTATE

- revizuiască

- Rol

- Alerga

- acelaşi

- scalabil

- Scară

- Ştiinţă

- capturi de ecran

- Caută

- sigur

- senior

- serverless

- servește

- serviciu

- Servicii

- câteva

- indicat

- Emisiuni

- semnificativ

- asemănător

- simplu

- singur

- soluţie

- soluţii

- Surse

- Pas

- paşi

- depozitare

- stoca

- magazine

- simplu

- Strategic

- parteneri strategici

- simplifica

- studio

- reuși

- succes

- de succes

- Reușit

- astfel de

- suită

- livra

- sincronizare

- sistem

- sarcini

- Tehnic

- Tehnologii

- decât

- acea

- lor

- Lor

- apoi

- Acestea

- ei

- acest

- mii

- timp

- la

- azi

- împreună

- tranzacțional

- transfer

- Transforma

- transformări

- transformare

- ÎNTORCĂ

- Două

- ui

- care stau la baza

- utilizare

- utilizat

- Utilizator

- folosind

- Valori

- foarte

- Vizualizare

- vrea

- a fost

- we

- web

- au fost

- cand

- dacă

- care

- în timp ce

- voi

- cu

- fără

- flux de lucru

- de lucru

- ar

- tu

- Ta

- zephyrnet