This article was published as a part of the Data Science Blogathon

Overview

Julia is a programming language that is famous not only because of its friendly environment but also due to its in-built packages, which make NLP very easy. You all might remember the long python codes we use for data cleaning, string comparisons, data extraction, embeddings, etc. This language provides you with in-built packages that you use in seconds for these purposes. Wanna see how? Let’s rush!

Table of Contents

-

Introduction

-

Word Tokenizers package

-

String Distance package

-

Embeddings Package

-

Transformers package

-

Conclusion

Introduction

In Natural Language Processing(NLP) we generally deal with text/word processing. It includes everything like tokenization, segmentation, classification, parsing, tagging, and also semantic reasoning. Text is the most crucial element in NLP, and it takes most of the time as the code of text processing is very large. To perform all the word processing operations we need a lot of time, space, and consciousness. Julia provides the solution to those long 2-3 pages of codes that take hours to write by using its in-built packages. Here, we will learn about such in-built packages with the help of their implementable codes.

Word Tokenizers package in Julia

One of the very basic word processing packages is the WordTokenizers.jl package. This package performs various tasks like extract tokens from sentences, extract sentences from paragraphs, and extract sentences and tokens simultaneously from paragraphs. Here we see all three variations one by one with the help of examples.

Official package link: https://github.com/JuliaText/WordTokenizers.jl

A). Installation

To install this package in Julia run the command

pkg> add WordTokenizers

B). Split sentence into tokens

WordTokenizers.jl package has a method called tokenize(text)

This method actually searches spaces in the sentence and separates all the words on that basis. Here you don’t have to separate tokens explicitly just call the tokenize method and pass the text.

julia> using WordTokenizers

julia> text = "I also have dream of creating my own tools like others have.";

julia> tokenize(text) |> print # Default tokenizer

SubString{String}["I", "also", "have", "dream", "of", "creating", "my", "own", "tools", "like", "others", "have","''"]

C). Split paragraph into sentences

WordTokenizer.jl package has a method called split_sentences(text). This method searches for the full stop in the paragraph and separates the sentences based on that.

julia> text = "I also have dream of creating my own tools like others have. But the thing which I found different in myself is my craze. I had that sarcasm which made me to do so.";

julia> split_sentences(text)

3-element Array{SubString{String},1}: “I also have dream of creating my own tools like others have.", "But the thing which I found different in myself is my craze.", "I had that sarcasm which made me to do so."”

D). Split paragraph into Sentence + Tokens

split_sentences(text)method is also used to split paragraph into sentences as well as into tokens.

julia> tokenize.(split_sentences(text))

3-element Array{Array{SubString{String},1},1}: SubString{String}[“I”, “also”, “have”, “dream”, “of”, “creating”, “my”, “own”, “tools”, “like”, “others”, “have”, “.”] SubString{String}[“But”, “the”, “thing”, “which”, “I”, “found”, “different”, “in”, “myself”, “is”, “my”, “craze”, “earth”, “.”]

SubString{String}[“I”, “had”, “that”, “sarcasm”, “which”, “made”, “me”, “to”, “do”, “so”, “.”]

E). Availability of many different algorithms for parsing:

-

A reversible Tokenizer is a tokenizer whose result can be reversed to recover the original text. Allocates into separate tokens

-

TokTok Tokenizer is a regular expression-based tokenizer.

-

Tweet Tokenizer is a tokenizer that focuses on splitting tweets, including emojis, HTML inserts, and more.

-

NLTK Word tokenizer is a typical algorithm used by the NLTK library, which is considered the best in comparison with the previous ones in terms of handling UNICODE characters, etc.

-

Poorman’s tokenizer – remove all punctuation marks and separate by spaces. Sometimes it can work even worse than just split.

-

Punctuation space tokenizes – improvement of the previous algorithm by tracking word boundaries. For example, it prevents the hyphenation of words.

-

Penn Tokenizer is the implementation of the tokenizer used in the Penn Treebank corpus.

-

Improved Penn Tokenizer is a modification implemented according to an algorithm from the NLTK library.

The method used for the choice of the tokenization algorithm is carried out by calling set_tokenizer(nltk_word_tokenize)

String Distance Package

StringDistance.jl package of Julia provides you with the ease of string comparisons. A complete analysis of documents is not always necessary. Sometimes it is necessary to solve quite applied problems of determining the similarity of strings, for example, identifying typos, or incomplete titles. It is very convenient to use the StringDistances.jl package when you want to compare strings.

using StringDistances

compare("shikha", "shikha", Hamming())

#> 1.0 compare("shikha", "shihka", Jaro()) #> 0.9444444444444445 compare("shikha", "shihka", Winkler(Jaro())) #> 0.9611111111111111 compare("shikha", "shikhaa", QGram(2)) #> 0.9230769230769231 compare("shikha", "shikhaa", Winkler(QGram(2))) #> 0.9538461538461539

The value return by compare method is the proximity of the strings. Usually, we consider 1 as a complete match and 0 as no match.

Jaro-Winkler is often used as, of the currently common metrics. The reason is its speed which is quite high, but the accuracy of this metric is not such great by modern standards. The RatcliffObershelp metric, for example, gives more precision in determining the similarity of long strings with multiple words. In addition, it can be used in combination with pretreatment. Let say you have two strings with the same words but the order of words is different then you can use sorting tokens.

compare("shikha and shia", "shia and shikha", RatcliffObershelp()) #> 0.44444 compare("shikha and shia", "shia and shikha", TokenSort(RatcliffObershelp()) #> 1.0 compare("shikha and shia", "shikha is crazily fighting with shia", TokenSet(Jaro())) #> 0.944444 compare("shikha and shia", "shikha is crazily fighting with shia", TokenMax(RatcliffObershelp())) #> 0.855

The preprocessing result should be saved first where it is necessary to compare a large number of strings. For example, instead of calling TokenSort every time, just do it once and store the intermediate sorted results. One of the advantages of Julia, in this case, is that the library is fully implemented in Julia, therefore the code can be easily used to optimize or use parts of it in your implementation.

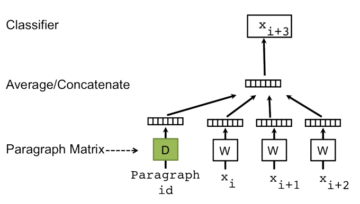

Embeddings Package in Julia

When we want to implement algorithms for vectorizing texts into some vector spaces we use the Embeddings.jl package of Julia. The main advantage of these multidimensional spaces is that the operations of addition or subtraction of vectors representing certain words lead to the fact that the result becomes close to other words that are close in the context in the text corpus on which the software was provided. One of the first well-known algorithms for such vectorization was Word2Vec. A typical example of operations on vectors associated with words: king – man + woman = queen… Different dimensions of these spaces can be adjusted depending on which dataset is used. For example, there are datasets trained on the news, on Wikipedia articles, in only one language, or in several languages at once. As a result, the dimensionality of space can vary over a wide range from hundreds to thousands of dimensions. Generally, these spaces are called “semantic space”, and the distance between vectors is often called “semantic distance”.

Official package link:- https://github.com/JuliaText/Embeddings.jl

The main feature of vectorization methods classified as “embedding” is that any word, sentence, or text can be represented as one vector with a fixed dimension. As a result, if it is necessary to solve the problem of matching documents, including the problems of clustering and classification, it can be done much faster than, for example, based on inverse indices. Or more precisely than in the case of the term-document matrix, since it does not use the context of words in any way. A separate issue is what measure of distance should be used in each specific vector space. Usually, the cosine distance is used. But it depends on the properties of the selected vector space.

The vectorization methods provided by our favorite Embeddings.jl package includes Word2Vec, GloVe, FastText. The last option is pre-trained for a large number of documents. Actually, for any vectorization method, you can retrain the model on your dataset, but the retraining time can be very long. One of the drawbacks of most of these vectorization methods is a large amount of data, including a dictionary of terms and the representation of vectors for each of them. To use, for example, word2vec, you just need to have 8-16 GB of RAM. Otherwise, the matrix with vectors may simply not fit.

There is one more package called DataDeps.jl package, which is used to automate the loading of large datasets at the moment they are needed (“lazy loading”). If you run a program using Embedding.jl for the first time, the text console will ask you whether to load or not. Since this can also happen on the server in the background process, it is possible to automatically load dependencies by setting an environment variable.

ENV["DATADEPS_ALWAYS_ACCEPT"] = true

The only requirement is that there is sufficient disk space to store the downloaded files. Downloaded dependencies are placed in a directory ~/.julia/datadeps by dataset names.

The first stage is loading data for the required vectorization method:

using Embeddings function get_embedding(word) ind = get_word_index[word] emb = embtable.embeddings[:,ind] return emb end

The second stage is the vectorization of each individual word or phrase:

julia> get_embedding("blue")

300-element Array{Float32,1}: 0.01540828 0.03409082 0.0882124 0.04680265 -0.03409082

...

Words can be retrieved by the WordTokenizers or by TextAnalysis. You can add vectors using standard operations already built into Julia:

julia> a = rand(5)

5-element Array{Float64,1}: 0.012300397820243392 0.13543646950484067 0.9780602985106086 0.24647179461578816 0.18672770774122105 julia> b = ones(5)

5-element Array{Float64,1}: 1.0 1.0 1.0 1.0 1.0

julia> a+b

5-element Array{Float64,1}: 1.0123003978202434 1.1354364695048407 1.9780602985106086 1.2464717946157882 1.186727707741221

Clustering algorithms are provided by the Clustering.jl package. Machine learning methods including classification methods – see the MLJ.jl package. If you wish to independently implement clustering algorithms, I recommend that you pay attention to the package https://github.com/JuliaStats/Distances.jl, which provides a huge set of algorithms for calculating the vector distance:

-

Mean squared deviation

-

Root mean squared deviation

-

Normalized root mean squared deviation

-

Squared Euclidean distance

-

Periodic Euclidean distance

-

Total variation distance

-

Euclidean distance

-

Jaccard distance

-

Hamming distance

-

Cosine distance

-

Correlation distance

-

Bhattacharyya distance

-

Mean absolute deviation

These distance measures can be used when performing clustering or classification.

Transformers package in Julia

Transformers.jl is a pure Julia implementation of the Transformers architecture that Google’s BERT neural network is based on. It should be noted that it is this neural network and its modifications that have now become very popular for solving the NER problem – marking up named entities, as well as for vectorizing words.

Transformers.jl uses the Flux.jl package, which, in turn, is not only a pure Julia library for implementing neural networks but also one of the fastest implementations for performing such operations. Flux.jl easily switches from CPU to GPU, but this is rather a general requirement for libraries that implement neural network functions.

In this article, we will not go into the specifics of BERT or neural networks as such. Let’s stick ourselves to an example of code for vectorizing texts:

using Transformers using Transformers.Basic using Transformers.Pretrain using Transformers.Datasets using Transformers.BidirectionalEncoder using Flux using Flux: onehotbatch, gradient import Flux.Optimise: update! using WordTokenizers ENV["DATADEPS_ALWAYS_ACCEPT"] = true const FromScratch = false #use wordpiece and tokenizer from pretrain const wordpiece = pretrain"bert-uncased_L-12_H-768_A-12:wordpiece" const tokenizer = pretrain"bert-uncased_L-12_H-768_A-12:tokenizer" const vocab = Vocabulary(wordpiece) const bert_model = gpu( FromScratch ? create_bert() : pretrain"bert-uncased_L-12_H-768_A-12:bert_model" ) Flux.testmode!(bert_model) function vectorize(str::String) tokens = str |> tokenizer |> wordpiece text = ["[CLS]"; tokens; "[SEP]"] t_indices = vocab(text) seg_indices = [fill(1, length(tokens) + 2);] sample = (tok = t_indices, seg = seg_indices) bert_embedding = sample |> bert_model.embed collect(sum(bert_embedding, dims=2)[:]) end

The method defined here vectorize will be used to vectorize texts. Well, its use is as follows:

using Distances

x1 = vectorize("Some deserves love")

x2 = vectorize("Some deserves hatred")

cosine_dist(x1, x2)

Well, at the end of this section, I would like to note once again that Transformers.jl allows you to vectorize text, and the further use of these vectors depends entirely on the tasks to be solved.

Conclusion

After reading this article, I can assure you that all NLP enthusiasts will shift to Julia. And even you have to shift, as we are coders and we don’t give time to those things which are already present in some libraries.

- '

- "

- 11

- Absolute

- ADvantage

- algorithm

- algorithms

- All

- analysis

- analytics

- architecture

- article

- articles

- availability

- BEST

- call

- classification

- Cleaning

- code

- Common

- Consciousness

- Creating

- data

- deal

- Dimension

- distance

- documents

- Environment

- etc

- extraction

- Feature

- First

- first time

- fit

- full

- General

- GPU

- great

- Handling

- here

- High

- How

- HTTPS

- huge

- Hundreds

- Including

- IT

- King

- language

- Languages

- large

- lead

- LEARN

- learning

- Library

- LINK

- load

- Long

- love

- machine learning

- Main Feature

- man

- Match

- Matrix

- measure

- Media

- Metrics

- model

- names

- Natural Language

- Natural Language Processing

- network

- networks

- Neural

- neural network

- neural networks

- news

- nlp

- Operations

- Option

- order

- Other

- Others

- Pay

- Penn

- Popular

- Precision

- present

- Program

- Programming

- Python

- RAM

- range

- Reading

- Recover

- Results

- retraining

- Run

- Sarcasm

- Science

- selected

- set

- setting

- shift

- So

- Software

- SOLVE

- Space

- speed

- split

- Stage

- standards

- store

- time

- Tokenization

- Tokens

- Tracking

- unicode

- value

- Wikipedia

- woman

- words

- Work