Microsoft continues the AI race without downshifting with Visual ChatGPT. Visual ChatGPT is a new model that combines ChatGPT and VFMs, including Transformers, ControlNet, and Stable Diffusion. Sounds good? The technique also makes it possible for ChatGPT conversations to go beyond linguistic barriers. As the GPT-4 release date approaches, the future of ChatGPT is getting brighter with each passing day.

Even though there are a lot of successful AI image generators, like DALL-E 2, Wombo Dream, and more, a freshly developed AI art tool always receive a warm welcome from the community. Will Visual ChatGPT continue this tradition? Let’s take a closer look.

What is Visual ChatGPT?

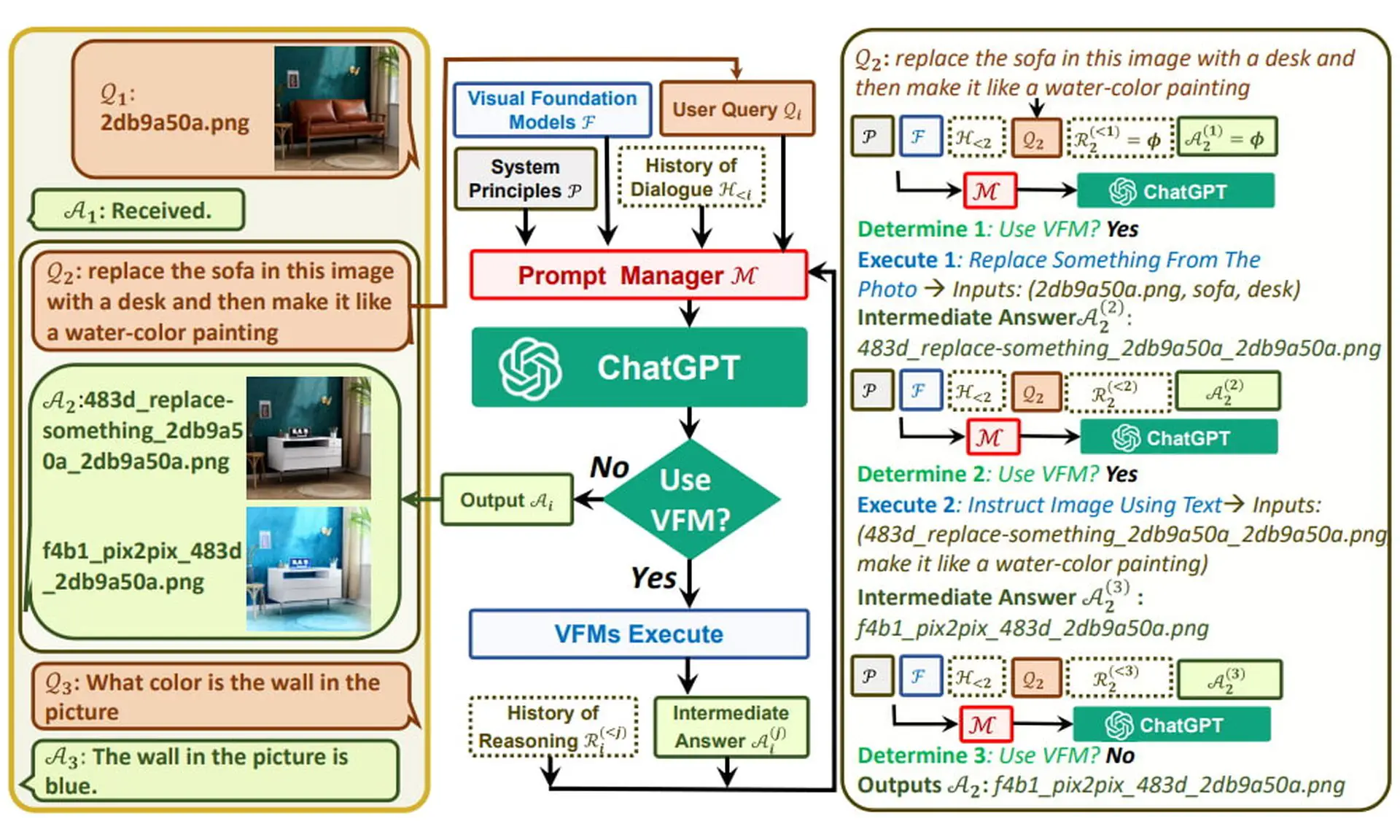

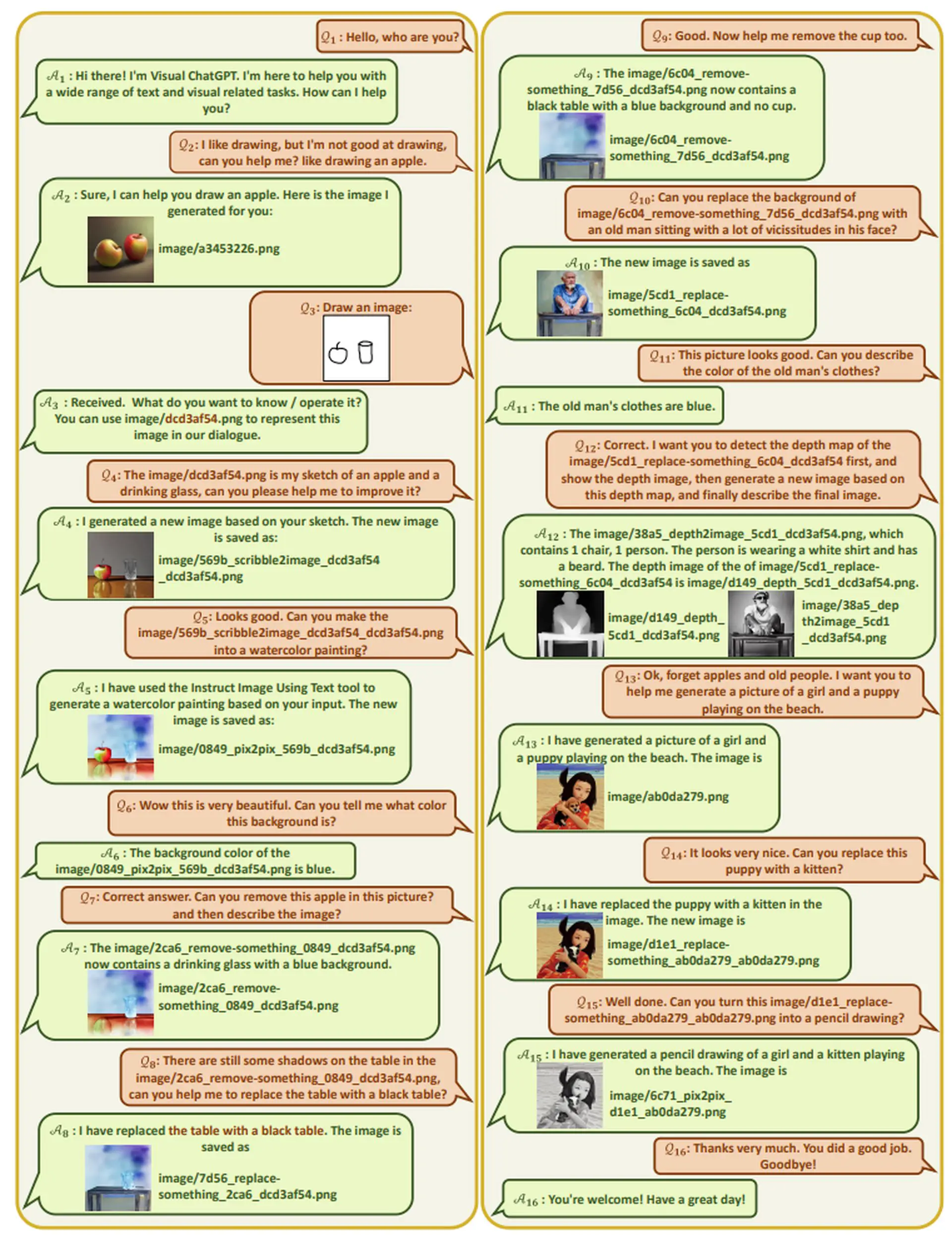

Visual ChatGPT is a new model that combines ChatGPT with VFMs like Transformers, ControlNet, and Stable Diffusion. In essence, the AI model acts as a bridge between users, allowing them to communicate via chat and generate visuals.

ChatGPT is currently limited to writing a description for use with Stable Diffusion, DALL-E, or Midjourney; it cannot process or generate images on its own. Yet with the Visual ChatGPT model, the system could generate an image, modify it, crop out unwanted elements, and do much more.

ChatGPT has attracted interdisciplinary interest for its remarkable conversational competency and reasoning abilities across numerous sectors, resulting in an excellent choice for a language interface.

It’s linguistic training, however, prohibits it from processing or generating images from the visual environment. Meanwhile, models with visual foundations, such as Visual Transformers or Steady Diffusion, demonstrate impressive visual comprehension and producing abilities when given tasks with one-round fixed inputs and outputs. A new model, like Visual ChatGPT, can be created by combining these two models.

“Instead of training a new multimodal ChatGPT from scratch, we build Visual ChatGPT directly based on ChatGPT and incorporate a variety of VFMs.”

-Microsoft

It enables users to communicate with ChatGPT in ways that go beyond words.

What are Visual foundation models (VFMs)?

The phrase “visual foundation models” (VFMs) is commonly employed to characterize a group of fundamental algorithms employed in computer vision. These methods are used to transfer standard computer vision skills onto AI applications and can serve as the basis for more complex models.

Learning how to use AI is a game changer

Visual ChatGPT features

Researchers at Microsoft have developed a system called Visual ChatGPT that features numerous visual foundation models and graphical user interfaces for interacting with ChatGPT.

What will change with Visual ChatGPT? It will be capable of the following:

- In addition to text, Visual ChatGPT may also generate and receive images.

- Complex visual inquiries or editing instructions that call for the collaboration of different AI models across multiple stages can be handled by Visual ChatGPT.

- To handle models with many inputs/outputs and those that require visual feedback, the researchers developed a series of prompts that integrate visual model information into ChatGPT. They discovered through testing that Visual ChatGPT facilitates the investigation of ChatGPT’s visual capabilities utilizing visual foundation models.

It is not perfect yet. The researchers observed certain problems with their work, such as the inconsistent generating outcomes caused by the failure of visual foundation models (VFMs) and the diversity of the prompts. They came to the conclusion that a self-correcting module is required to guarantee that execution results are in line with human objectives and to make any necessary corrections. Due to the need for ongoing course correction, including such a module could lengthen the inference time of the model. The team intends to conduct deeper research into this matter in a subsequent study.

How to use Visual ChatGPT?

You need to run the Visual ChatGPT demo first. According to its GitHub page, here’s what you need to do for it:

# create a new environment

conda create -n visgpt python=3.8 # activate the new environment

conda activate visgpt # prepare the basic environments

pip install -r requirement.txt # download the visual foundation models

bash download.sh # prepare your private openAI private key

export OPENAI_API_KEY={Your_Private_Openai_Key} # create a folder to save images

mkdir ./image # Start Visual ChatGPT !

python visual_chatgpt.pyAfter the Visual ChatGPT demo starts to run on your PC, all you need to this is give it a prompt!

With the use of tools like Visual ChatGPT, the learning curve for text-to-image models may be lowered, and different AI programs can communicate with one another. Previous state-of-the-art models, such as LLMs and T2I models, were developed in isolation; but, with the help of innovations, we may be able to improve their performance significantly.

When it comes to producing images with ChatGPT, GPT-4 immediately comes to mind. So when will this highly anticipated model be released?

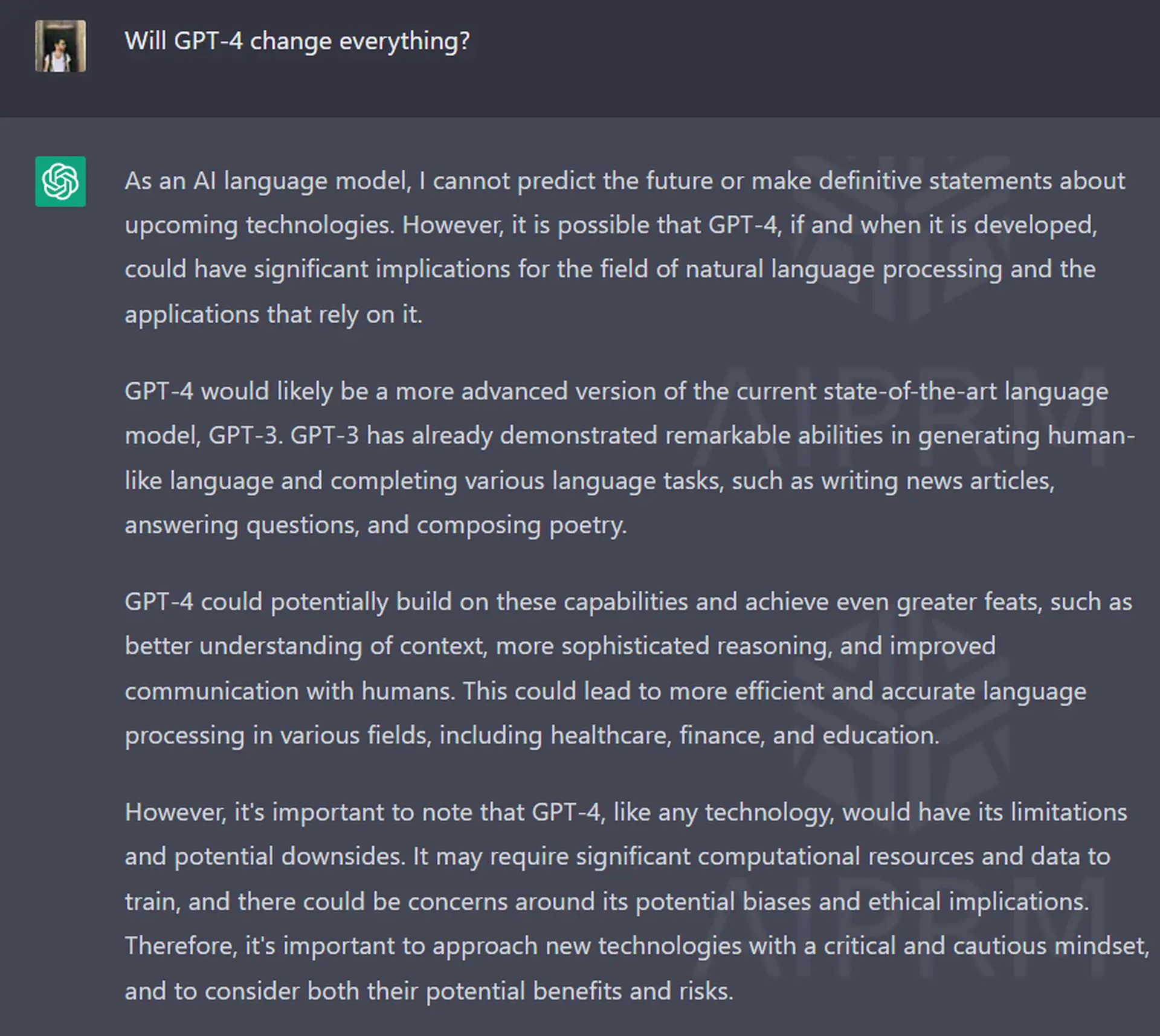

GPT-4 release date

A new artificial intelligence model called GPT-4 is about to be released by OpenAI, the company behind ChatGPT, as early as next week, according to Microsoft Germany’s chief technology officer (CTO). This new version is widely considered to be vastly more capable than its predecessor, which will pave the way for the widespread adoption of generative AI in business.

Since 2019, when it invested $1 billion in OpenAI, Microsoft has been a crucial partner of the AI startup. Microsoft upped its share in the AI lab by several billion dollars in January, following the remarkable success of ChatGPT, an AI-powered chatbot that has taken the internet by storm in recent months.

Visual ChatGPT GPU memory usage

Visual ChatGPT also shared a list of GPU memory usage of each visual foundation model.

| Foundation Model | Memory Usage (MB) |

|---|---|

| ImageEditing | 6667 |

| ImageCaption | 1755 |

| T2I | 6677 |

| canny2image | 5540 |

| line2image | 6679 |

| hed2image | 6679 |

| scribble2image | 6679 |

| pose2image | 6681 |

| BLIPVQA | 2709 |

| seg2image | 5540 |

| depth2image | 6677 |

| normal2image | 3974 |

| InstructPix2Pix | 2795 |

To save your GPU memory, you can modify “self.tools” with fewer visual foundation models.

Check out the paper for more detailed information.

AI 101

Are you new to AI? You can still get on the AI train! We have created a detailed AI glossary for the most commonly used artificial intelligence terms and explain the basics of artificial intelligence as well as the risks and benefits of AI. Feel free the use them.

Other AI tools we have reviewed

Almost every day, a new tool, model, or feature pops up and changes our lives and we have already reviewed some of the best ones:

- Text-to-text AI tools

Do you want to learn how to use ChatGPT effectively? We have some tips and tricks for you without switching to ChatGPT Plus! AI prompt engineering is the key to limitless worlds, but you should be careful; when you want to use the AI tool, you can get errors like “ChatGPT is at capacity right now” and “too many requests in 1-hour try again later”. Yes, they are really annoying errors, but don’t worry; we know how to fix them.

- Text-to-image AI tools

While there are still some debates about artificial intelligence-generated images, people are still looking for the best AI art generators. Will AI replace designers? Keep reading and find out.

- Other AI tools

- SEO Powered Content & PR Distribution. Get Amplified Today.

- Platoblockchain. Web3 Metaverse Intelligence. Knowledge Amplified. Access Here.

- Source: https://dataconomy.com/2023/03/what-is-visual-chatgpt-how-to-use-gpt4-date/

- :is

- $1 billion

- $UP

- 1

- 2019

- 7

- 8

- a

- abilities

- Able

- About

- According

- across

- acts

- addition

- Adoption

- AI

- ai art

- AI in Business

- AI-powered

- algorithms

- All

- Allowing

- already

- always

- and

- Another

- Anticipated

- applications

- approaches

- ARE

- Art

- artificial

- artificial intelligence

- AS

- At

- attracted

- barriers

- based

- bash

- basic

- basis

- BE

- behind

- benefits

- BEST

- between

- Beyond

- Billion

- BRIDGE

- brighter

- Brings

- build

- business

- by

- call

- called

- CAN

- Can Get

- cannot

- capabilities

- capable

- Capacity

- careful

- caused

- certain

- change

- Changes

- characterize

- chatbot

- ChatGPT

- chief

- chief technology officer

- choice

- closer

- collaboration

- combines

- combining

- coming

- Coming Soon

- commonly

- communicate

- community

- company

- complex

- computer

- Computer Vision

- conclusion

- Conduct

- considered

- continue

- continues

- conversational

- conversations

- Corrections

- could

- course

- create

- created

- crop

- crucial

- CTO

- Currently

- curve

- dall-e

- Date

- day

- deeper

- Demo

- demonstrate

- description

- detailed

- developed

- different

- Diffusion

- directly

- discovered

- Diversity

- dollars

- Dont

- download

- each

- Early

- effectively

- elements

- enables

- Environment

- environments

- Errors

- essence

- Ether (ETH)

- Every

- every day

- examples

- excellent

- execution

- Explain

- explore

- export

- facilitates

- Failure

- Feature

- Features

- feedback

- Find

- First

- Fix

- fixed

- following

- For

- Foundation

- Foundations

- Free

- from

- fundamental

- future

- game

- generate

- generating

- generation

- generative

- Generative AI

- generators

- get

- getting

- gif

- Give

- given

- Go

- good

- GPU

- Group

- guarantee

- handle

- Have

- help

- highly

- How

- How To

- However

- HTTPS

- human

- image

- image generation

- images

- immediately

- impressive

- improve

- in

- Including

- incorporate

- information

- innovations

- Inquiries

- install

- instructions

- integrate

- Intelligence

- intends

- interacting

- interest

- Interface

- interfaces

- Internet

- invested

- investigation

- isolation

- IT

- ITS

- January

- jpg

- Keep

- Key

- Know

- lab

- language

- learning

- like

- Limited

- limitless

- Line

- List

- Lives

- Look

- looking

- Lot

- make

- MAKES

- many

- Matter

- max-width

- Meanwhile

- Memory

- methods

- Microsoft

- MidJourney

- mind

- model

- models

- modify

- module

- months

- more

- most

- multiple

- necessary

- Need

- New

- next

- next week

- numerous

- objectives

- of

- Officer

- on

- ONE

- ongoing

- OpenAI

- own

- partner

- Passing

- PC

- People

- perfect

- performance

- plato

- Plato Data Intelligence

- PlatoData

- Pops

- Popular

- possible

- predecessor

- Prepare

- previous

- private

- Private Key

- problems

- process

- processing

- Programs

- Python

- Race

- Reading

- receive

- recent

- release

- released

- remarkable

- replace

- requests

- require

- required

- requirement

- research

- researchers

- resulting

- Results

- reviewed

- Run

- Save

- Sectors

- Series

- serve

- several

- Share

- shared

- should

- significantly

- skills

- So

- some

- Soon

- stable

- stages

- standard

- start

- starts

- startup

- state-of-the-art

- steady

- Still

- Storm

- Study

- subsequent

- success

- successful

- such

- system

- Take

- tasks

- team

- Technology

- Testing

- that

- The

- The Future

- their

- Them

- These

- Through

- time

- tips

- tips and tricks

- to

- tool

- tools

- tradition

- Training

- transfer

- transformers

- unwanted

- Usage

- use

- User

- users

- Utilizing

- variety

- version

- via

- vision

- warm

- Way..

- ways

- week

- welcome

- WELL

- What

- which

- widely

- widespread

- will

- with

- without

- words

- Work

- working

- world’s

- writing

- Your

- zephyrnet