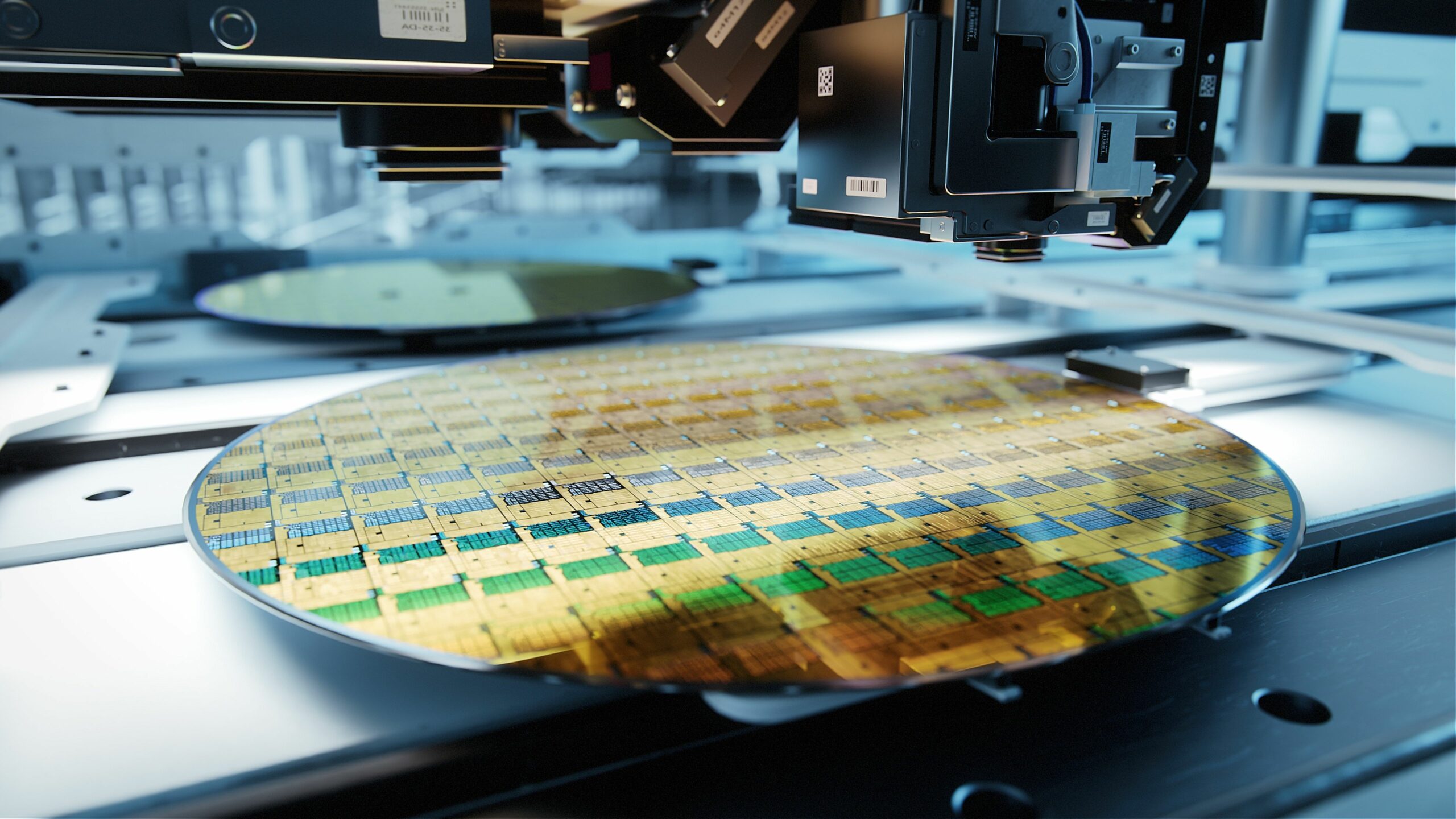

Expanding fab capacity is slow and expensive even under ideal circumstances. It has been still more difficult in recent years, as pandemic-related shortages have strained equipment supply chains.

When integrated circuit demand rises faster than expansions can fill the gap, fabs try to find “hidden” capacity through improved operations. They hope that more efficient workflows will allow existing equipment to deliver more finished wafers.

It’s an important goal, but one that has challenged the industry since its inception. Shiladitya Chakravorty, senior data scientist at GlobalFoundries, explained in work presented at last year’s ASMC conference that fab scheduling is a highly complex version of the so-called “job shop scheduling” problem.[1] Each lot of wafers passes through hundreds of process steps. While the list of steps is fixed, the flow is re-entrant because the lot visits some processes more than once.

The exact number of available tools for each step varies as tools are taken offline for maintenance or repairs. Some steps, like diffusion furnaces, consolidate multiple lots into large batches. Some sequences, like photoresist processing, must adhere to stringent time constraints. Lithography cells must match wafers with the appropriate reticles. Lot priorities change continuously. Even the time needed for an individual process step may change, as run-to-run control systems adjust recipe times for optimal results.

For all of these reasons, there is no deterministic “first principles” model of a full semiconductor fab, and no way to accurately predict a single wafer’s cycle time or exact route. Scheduling typically relies on rule-based heuristics and the ability of human managers and tool operators to make dispatch decisions in real time.

On the other hand, Chakravorty said, fabs have a lot of data about their operations. They have historical data for such variables as process time and cycle time, for both individual process cells and the fab as a whole. They know how often tools fail, how long they take to repair, and where the bottlenecks are. As machine learning systems become more capable, this data can help train them to assist human decision makers.

At the fab level, machine learning can support improved cycle time prediction and capacity planning. At the process cell or cluster tool level, it can inform WIP scheduling decisions. In between, it can facilitate better load balancing and order dispatching. As a first step, though, all of these applications need accurate models of the fab environment, which is a difficult problem.

Fab simulation with generative AI

In a presentation at this year’s Semicon West, minds.ai CTO Jasper van Heugten suggested that generative AI tools can be part of the solution. Generative AI extrapolates new data that “fits” an existing dataset. For example, a generative image tool might be used to reconstruct a scene from a low-resolution photograph. The company uses similar techniques to “up-sample” existing fab datasets. The technique can be used to develop a more detailed representation of the fab, or could perturb real-world data to generate multiple potential scenarios.

In either case, the generated data can train better fab capacity models and dispatching tools. As Peter Lendermann, chief business development officer at D-Simlab Technologies explained at the same conference’s Extended Chip Capacity session, capacity models seek to predict the result of a given assignment of wafers to tools. Metrics of interest include cycle time and throughput, but also the fab’s ability to deliver orders on time, and the impact of lot assignments on product quality. If the fab accelerates delivery of a high-priority lot, how much will that delay lower-priority lots? Models can provide information, but balancing competing priorities requires human input.

Schedule optimizers can use a robust capacity model to test their proposals and identify the “best” schedule. However, even this “ideal” is only a starting point. While it’s not possible for a fab-wide scheduler to respond to events in the actual fab in real time, setting guidelines for dispatching software itself is an important step forward. Often, the interactions between fab capacity changes and cycle time are not intuitive. Because dispatching software must act in real time, it necessarily prioritizes finding a “good enough” solution quickly over a “best” solution.

D-Simlab argued for the use of machine learning to support “situation-based dispatching.” The dispatch agent, trained using a fab simulation, learns what lots give “good” outcomes in particular situations, and uses that knowledge to make similar decisions in the fab.

Addressing local scheduling in real time

Local process cells are significantly more tractable, for both conventional and machine learning methods. Productivity improvements in bottleneck areas can have a substantial impact on the fab as a whole.

In work presented at this year’s ASMC, Semya Elaoud, R&D lead at Flexciton, discussed a project at Seagate Springtown that tied a fab-wide scheduler to a local toolset scheduler. The fab-wide scheduler used predicted wait times for future process steps to reprioritize WIP lots as needed every few minutes, then set the updated priorities to toolset schedulers. The toolset schedulers, because they focused on smaller tool clusters, were able to provide exact schedules for, collectively, every step in the fab. The combined system achieved an 8.7% increase in overall fab throughput, with a 9.4% increase in lot moves for the lithography toolsets specifically. This work also found that automated dispatching could achieve better load balancing. Human dispatchers tended to favor tools located near the dispatch work station, while the automated system overcame this bias.[2]

Local scheduling also spotlights the relationships between productivity measures like cycle time and product quality measures like yield. For example, maintenance scheduling necessarily involves a tradeoff between tool availability and potentially degraded product quality. When demand is high, the fab might temporarily run above nominal capacity by deferring maintenance. Managers need tools to help assess the risks and benefits of this approach.

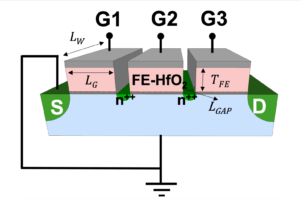

Scheduling also helps determine the yield achieved by time-constrained process sequences. In some photoresists, for instance, image quality degrades over time. Failure to complete the entire lithography process, from resist coat through exposure and development, within a limited amount of time, will lead to reduced yield and increased rework.

These sequences, called “time constraint tunnels” or “queue time restrictions” in the literature, pose special problems for scheduling systems. Before dispatching a lot to the first step in the sequence, the system needs to estimate the total cycle time and confirm that the lot will reach the last step in time and that other lots already in the tunnel will not be adversely affected. For example, it might be more efficient to run all lots requiring the same reticle together, but only if doing so does not overly delay other lots.

The GlobalFoundries group demonstrated the effectiveness of neural network methods for time constraint tunnel dispatching. The relationship between input parameters and cycle time is complex and non-linear. As discussed above, machine learning methods are especially useful in situations like this, where statistical data is available but exact modeling is difficult. Compared to an existing linear regression model, cycle time predictions from two different artificial neural network models achieved comparable average errors, but substantially better root mean squared error. The linear regression model gave worse results in high-cycle-time scenarios, precisely the situations where accurate predictions are most important.

Here, again, definition of objectives by a qualified human is critical. A dispatch system that only sent one lot into the tunnel at a time would probably never violate the time constraint, but would also force the stepper to sit idle for extended periods.

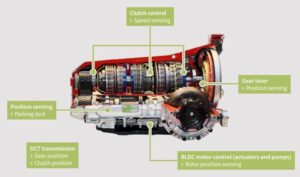

Cluster tools are another case in which accurate local scheduling can have substantial benefits. Cluster tools that integrate up to 8 process chambers, pose many of the same problems that overall fab scheduling does, but on a smaller scale. Each lot may have a different recipe and take a different path through the tool. The availability of individual chambers changes. There is no buffer inside the tool, but the run-to-run process control system might increase or decrease the residence time in a given chamber. A vacuum pump might take more or less time to establish the desired process conditions. And a real-time scheduling system might only have a few seconds to “think” between wafer moves.

Here, Peer Group’s Doug Suerich, director of marketing, and Trevor McIlroy, principal developer, demonstrated the usefulness of reinforcement learning methods. Reinforcement learning works by having an agent propose solutions to a simulated environment, which are rewarded or penalized based on the results. While initially random, the solutions improve over time.

The Peer Group work used reinforcement learning to generate schedules offline, which were used as a starting point for the real-time scheduler. The real-time scheduler, in turn, simulated as many potential moves as possible in the time available, first looking for a “valid” sequence that did not violate predetermined rules. Once a valid sequence was discovered, the system used any remaining time to attempt to find a better one.[3]

What’s next?

Overall, machine learning tools for fab scheduling fall into two broad categories. Large, offline models may seek to find optimal fab-scale solutions, but require too much computation to act in real time. Smaller local models, on the other hand, attempt to respond to changes as they occur. As both kinds of models become more capable, more and more work is being done on ways to connect the two.

That interface might occur through the existing lot dispatch system, as in the work at Seagate Springtown. Or, as in the Peer Group project, similar models might be used with different time horizons. Benjamin Kovács and colleagues at University of Klagenfurt are developing open-source simulation tools to support benchmarking and testing of emerging solutions.[4]

References

- S. Chakravorty and N. N. Nagarur, “Analysis of Artificial Neural Network Based Algorithms For Real Time Dispatching,” 2022 33rd Annual SEMI Advanced Semiconductor Manufacturing Conference (ASMC), Saratoga Springs, NY, USA, 2022, pp. 1-6, doi: 10.1109/ASMC54647.2022.9792495.

- S. Elaoud, D. Xenos and T. O’Donnell, “Deploying an integrated framework of fab-wide and toolset schedulers to improve performance in a real large-scale fab,” 2023 34th Annual SEMI Advanced Semiconductor Manufacturing Conference (ASMC), Saratoga Springs, NY, USA, 2023, pp. 1-6, doi: 10.1109/ASMC57536.2023.10121116.

- D. Suerich and T. McIlroy, “Artificial Intelligence for Real Time Cluster Tool Scheduling: EO: Equipment Optimization,” 2022 33rd Annual SEMI Advanced Semiconductor Manufacturing Conference (ASMC), Saratoga Springs, NY, USA, 2022, pp. 1-3, doi: 10.1109/ASMC54647.2022.9792523.

- B. Kovács, et. al., “A Customizable Simulator for Artificial Intelligence Research to Schedule Semiconductor Fabs,” 2022 33rd Annual SEMI Advanced Semiconductor Manufacturing Conference (ASMC), Saratoga Springs, NY, USA, 2022, pp. 1-6, doi: 10.1109/ASMC54647.2022.9792520.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Automotive / EVs, Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- BlockOffsets. Modernizing Environmental Offset Ownership. Access Here.

- Source: https://semiengineering.com/using-ml-for-improved-fab-scheduling/

- :has

- :is

- :not

- :where

- $UP

- 1

- 10

- 2022

- 2023

- 8

- 9

- a

- ability

- Able

- About

- above

- accelerates

- accurate

- accurately

- Achieve

- achieved

- Act

- actual

- adhere

- advanced

- adversely

- again

- Agent

- AI

- AL

- algorithms

- All

- allow

- already

- also

- amount

- an

- and

- annual

- Another

- any

- applications

- approach

- appropriate

- ARE

- areas

- argued

- artificial

- artificial intelligence

- AS

- assess

- assist

- At

- Automated

- availability

- available

- average

- balancing

- based

- BE

- because

- become

- been

- before

- being

- benchmarking

- benefits

- Benjamin

- Better

- between

- bias

- both

- broad

- buffer

- business

- business development

- but

- by

- called

- CAN

- capable

- Capacity

- case

- categories

- cell

- Cells

- chains

- challenged

- Chamber

- change

- Changes

- chief

- chip

- circumstances

- Cluster

- colleagues

- collectively

- combined

- company

- comparable

- compared

- competing

- complete

- complex

- computation

- conditions

- Conference

- Confirm

- Connect

- consolidate

- constraints

- continuously

- control

- conventional

- could

- critical

- CTO

- customizable

- cycle

- data

- data scientist

- datasets

- decision

- decisions

- decrease

- definition

- delay

- deliver

- delivery

- Demand

- demonstrated

- desired

- detailed

- Determine

- develop

- Developer

- developing

- Development

- DID

- different

- difficult

- Diffusion

- Director

- discovered

- discussed

- Dispatch

- does

- doing

- done

- E&T

- each

- effectiveness

- efficient

- either

- emerging

- Entire

- Environment

- equipment

- error

- Errors

- especially

- establish

- estimate

- Even

- events

- Every

- example

- existing

- expensive

- explained

- Exposure

- facilitate

- FAIL

- Failure

- Fall

- faster

- favor

- few

- fill

- Find

- finding

- First

- fixed

- flow

- focused

- For

- Force

- Forward

- found

- Framework

- from

- full

- future

- gap

- generate

- generated

- generative

- Generative AI

- Give

- given

- goal

- Group

- Group’s

- guidelines

- hand

- Have

- having

- help

- helps

- High

- highly

- historical

- hope

- Horizons

- How

- However

- HTTPS

- human

- Hundreds

- ideal

- identify

- Idle

- if

- image

- Impact

- important

- improve

- improved

- improvements

- in

- inception

- include

- Increase

- increased

- individual

- industry

- inform

- information

- initially

- input

- inside

- instance

- integrate

- integrated

- Intelligence

- interactions

- interest

- Interface

- into

- intuitive

- IT

- ITS

- itself

- Know

- knowledge

- large

- large-scale

- Last

- lead

- learning

- less

- Level

- like

- Limited

- List

- literature

- load

- local

- located

- Long

- looking

- Lot

- machine

- machine learning

- maintenance

- make

- Makers

- Managers

- manufacturing

- many

- Marketing

- Match

- May..

- mean

- measures

- methods

- Metrics

- might

- minds

- minutes

- ML

- model

- modeling

- models

- more

- more efficient

- most

- moves

- much

- multiple

- must

- Near

- necessarily

- Need

- needed

- needs

- network

- Neural

- neural network

- never

- New

- next

- no

- number

- NY

- objectives

- occur

- of

- Officer

- offline

- often

- on

- once

- ONE

- only

- open source

- Operations

- operators

- optimal

- optimization

- or

- order

- orders

- Other

- outcomes

- over

- overall

- parameters

- part

- particular

- passes

- path

- peer

- penalized

- performance

- periods

- Peter

- planning

- plato

- Plato Data Intelligence

- PlatoData

- Point

- possible

- potential

- potentially

- precisely

- predict

- predicted

- prediction

- Predictions

- presentation

- presented

- Principal

- probably

- Problem

- problems

- process

- processes

- processing

- Product

- Product Quality

- productivity

- project

- Proposals

- propose

- provide

- pump

- qualified

- quality

- quickly

- R&D

- random

- reach

- real

- real world

- real-time

- reasons

- recent

- recipe

- Reduced

- regression

- reinforcement learning

- relationship

- Relationships

- remaining

- repair

- representation

- require

- requires

- research

- Respond

- result

- Results

- rewarded

- Rises

- risks

- robust

- root

- Route

- rules

- Run

- Said

- same

- Scale

- scenarios

- scene

- schedule

- scheduling

- Scientist

- seconds

- Seek

- Semi

- semiconductor

- senior

- sent

- Sequence

- session

- set

- setting

- Shop

- shortages

- significantly

- similar

- simulation

- simulator

- since

- single

- sit

- situations

- slow

- smaller

- So

- Software

- solution

- Solutions

- some

- special

- specifically

- Squared

- Starting

- station

- statistical

- Step

- Steps

- Still

- substantial

- substantially

- such

- supply

- Supply chains

- support

- system

- Systems

- T

- Take

- taken

- techniques

- Technologies

- test

- Testing

- than

- that

- The

- their

- Them

- then

- There.

- These

- they

- this

- though?

- Through

- throughput

- Tied

- time

- times

- to

- together

- too

- tool

- tools

- toolsets

- Total

- Train

- trained

- Trevor

- try

- tunnel

- TURN

- two

- typically

- under

- university

- updated

- USA

- use

- used

- uses

- using

- Vacuum

- version

- Visits

- wait

- was

- Way..

- ways

- were

- West

- What

- when

- which

- while

- whole

- will

- with

- within

- Work

- workflows

- works

- worse

- would

- years

- Yield

- zephyrnet