Introduction

Running Large Language Models has always been a tedious process. One has to download a set of 3rd party software to load these LLMs or download Python and create an environment by downloading a lot of Pytorch and HuggingFace Libraries. If going through the Pythonic Approach, one has to go through the process of writing code to download and run the model. This guide will look at an easier approach to running these LLMs.

Learning Objectives

- Understand the challenges of traditional LLM execution

- Grasp the innovative concept of Llamafiles

- Learn to download and run your own Llamafile executables with ease

- Learning to create Llamfiles from quantized LLMs

- Identify the limitations of this approach

This article was published as a part of the Data Science Blogathon.

Table of contents

Problems with Large Language Models

Large Language Models (LLMs) have revolutionized how we interact with computers, generating text, translating languages, writing different kinds of creative content, and even answering your questions in an informative way. However, running these powerful models on your computer has often been challenging.

To run the LLMs, we have to download Python and a lot of AI dependencies, and on top of that, we even have to write code to download and run them. Even when installing the ready-to-use UIs for Large Language Models, it involves many setups, which can easily go wrong. Installing and running them like an executable has not been a simple process.

What are Llamafiles?

Llamafiles are created to work easily with popular open-source large language models. These are single-file executables. It’s just like downloading an LLM and running it like an executable. There is no need for an initial installation of libraries. This was all possible due to the llama.cpp and the cosmopolitan libc, which makes the LLMs run on different OSes.

The llama.cpp was developed by Georgi Gerganov to run Large Language Models in the quantized format so they can run on a CPU. The llama.cpp is a C library that lets us run quantized LLMs on consumer hardware. On the other hand, the cosmopolitan libc is another C library that builds a binary that can run on any OS(Windows, Mac, Ubuntu) without needing an interpreter. So the Llamafile is built on top of these libraries, which lets it create single-file executable LLMs

The available models are in the GGUF quantized format. GGUF is a file format for Large Language Models developed by Georgi Gerganov, the creator of llama.cpp. The GGUF is a format for storing, sharing, and loading Large Language Models effectively and efficiently on CPUs and GPUs. The GGUF uses a quantization technique to compress the models from their original 16-bit floating point to a 4-bit or 8-bit integer format. The weights of this quantized model can be stored in this GGUF format

This makes it simpler for 7 Billion Parameter models to run on a computer with a 16GB VRAM. We can run the Large Language Models without requiring a GPU (though Llamafile even allows us to run the LLMs on a GPU). Right now, the llamafiles of popular Open Source Large Language Models like LlaVa, Mistral, and WizardCoder are readily available to download and run

One Shot Executables

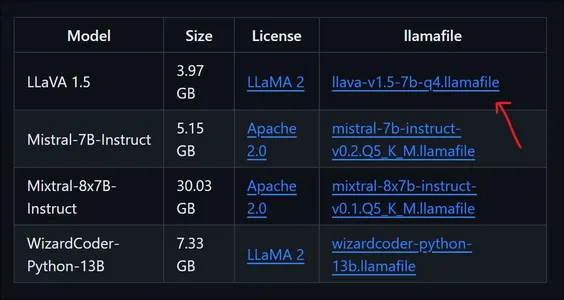

In this section, we will download and try running a multimodal LlaVa Llamafile. Here, we will not work with GPU and run the model on CPU. Go to the official Llamafile GitHub Repository by clicking here and downloading the LlaVa 1.5 Model.

Download the Model

The above picture shows all the available models with their names, sizes, and downloadable links. The LlaVa 1.5 is just around 4GB and is a powerful multi-model that can understand images. The downloaded model is a 7 Billion Parameter model that is quantized to 4-bits. After downloading the model, go to the folder where it was downloaded.

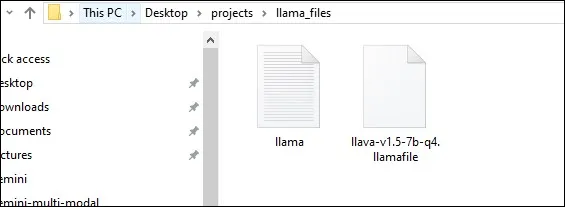

Then open the CMD, navigate to the folder where this model is downloaded, type the name of the file we downloaded, and press enter.

llava-v1.5-7b-q4.llamafile

For Mac and Linux Users

For Mac and Linux, by default, the execution permission is off for this file. Hence, we have to provide the execution permission for the llamafile, which we can do so by running the below command.

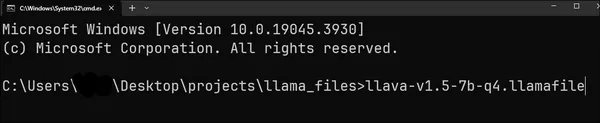

chmod +x llava-v1.5-7b-q4.llamafileThis is to activate the execution permission for the llava-v1.5-7b-q4.llamafile. Also, add the “./” before the file name to run the file on Mac and Linux. After you press the enter keyword, the model will be pushed to the system RAM and show the following output.

Then the browser will popup and the model will be running on the URL http://127.0.0.1:8080/

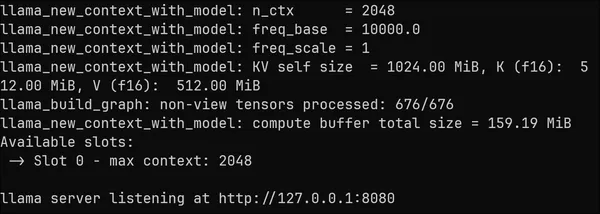

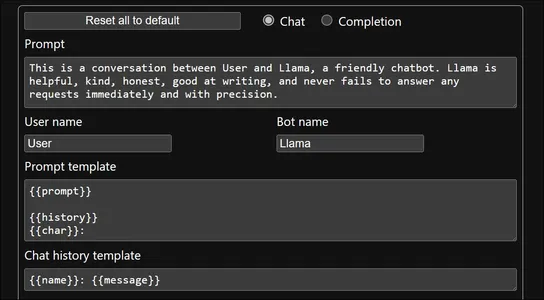

The above pic shows the default Prompt, User Name, LLM Name, Prompt Template, and Chat History Template. These can be configured, but for now, we will go with the default values.

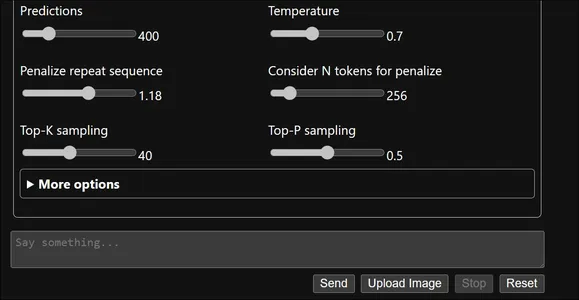

Below, we can even check the configurable LLM hyperparameters like the Top P, Top K, Temperature, and the others. Even these, we will let them be default for now. Now let’s type in something and click on send.

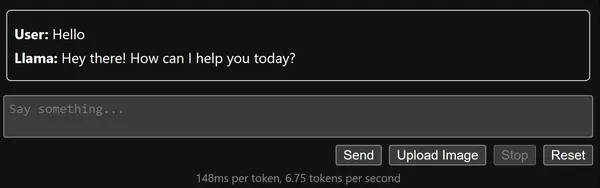

In the above pic, we can see that we have typed in a message and even received a response. Below that, we can check that we are getting around 6 tokens per second, which is a good token/second considering that we are running it entirely on CPU. This time, let’s try it with an Image.

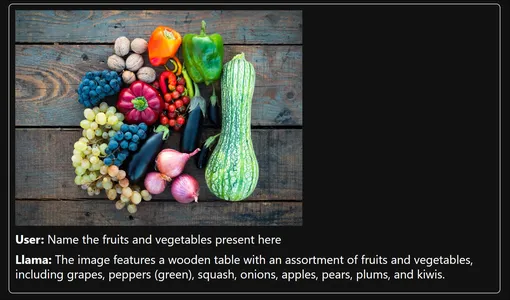

Though not 100% right, the model could almost get most of the things right from the Image. Now let’s have a multi-turn conversation with the LlaVa to test if it remembers the chat history.

In the above pic, we can see that the LlaVa LLM was able to keep up the convo well. It could take in the history conversation and then generate the responses. Though the last answer generated is not quite true, it gathered the previous convo to generate it. So this way, we can download a llamafile and just run them like software and work with those downloaded models.

Creating Llamafiles

We have seen a demo of Llamafile that was already present on the official GitHub. Often, we do not want to work with these models. Instead, we wish to create single-file executables of our Large Language Models. In this section, we will go through the process of creating single-file executables, i.e., llama-files from quantized LLMs.

Select a LLM

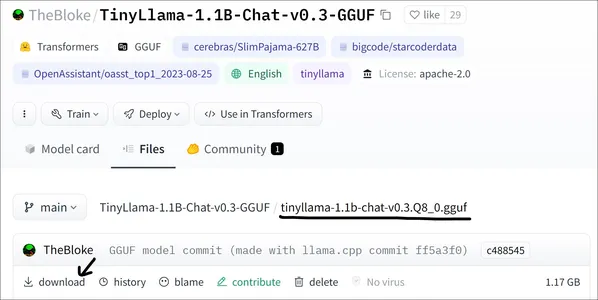

We will first start by selecting a Large Language Model. For this demo, we will select a quantized version of TinyLlama. Here, we will be downloading the 8-bit quantized GGUF model of TinyLlama (You can click here to go to HuggingFace and download the Model)

Download the Latest Llamafile

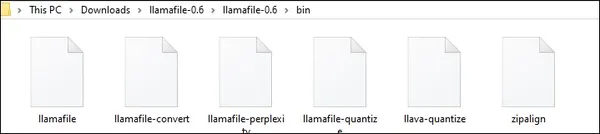

The latest llamafile zip from the official GitHub link can be downloaded. Also, download the zip and extract the zip file. The current version of this article is llama file-0.6. Afterin the llama extracting, the bin folder withfile folder will contain the files like the picture below.

Now move the downloaded TinyLlama 8-bit quantized model to this bin folder. To create the single-file executables, we need to create a .args file in the bin folder of llamafile. To this file, we need to add the following content:

-m

tinyllama-1.1b-chat-v0.3.Q8_0.gguf

--host

0.0.0.0

...

- The first line indicates the -m flag. This tells the llamafile that we are loading in the weights of a model.

- In the second line, we specify the model name that we have downloaded, which is present in the same directory in which the .args file is present, i.e., the bin folder of the llamafile.

- In the third line, we add the host flag, indicating that we run the executable file and want to host it to a web server.

- Finally, in the last line, we mention the address where we want to host, which maps to localhost. Followed by this are the three dots, which specify that we can pass arguments to our llamafile once it’s created.

- Add these lines to the .args file and save it.

For Windows Users

Now, the next step is for the Windows users. If working on Windows, we needed to have installed Linux via the WSL. If not, click here to go through the steps of installing Linux through the WSL. In Mac and Linux, no additional steps are required. Now open the bin folder of the llamafile folder in the terminal (if working on Windows, open this directory in the WSL) and type in the following commands.

cp llamafile tinyllama-1.1b-chat-v0.3.Q8_0.llamafileHere, we are creating a new file called tinyllama-1.1b-chat-v0.3.Q3_0.llamafile; that is, we are creating a file with the .llamafile extension and moving the file llamafile into this new file. Now, following this, we will type in this next command.

./zipalign -j0 tinyllama-1.1b-chat-v0.3.Q8_0.llamafile tinyllama-1.1b-chat-v0.3.Q8_0.gguf .argsHere we work with the zipalign file that came when we downloaded the llamafile zip from GitHub. We work with this command to create the llamafile for our quantized TinyLlama. To this zipalign command, we pass in the tinyllama-1.1b-chat-v0.3.Q8_0.llamafile that we have created in the previous step, then we pass the tinyllama-1.1b-chat-v0.3.Q8_0.llamafile model that we have in the bin folder and finally pass in the .args file that we have created earlier.

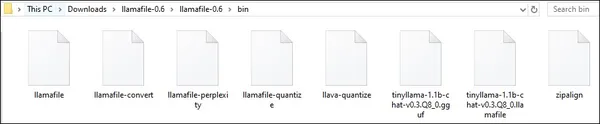

This will finally produce our single file executable tinyllama-1.1b-chat-v0.3.Q8_0.llamafile. To ensure we are on the same page, the bin folder now contains the following files.

Now, we can run the tinyllama-1.1b-chat-v0.3.Q8_0.llama file the same way we did before. In Windows, you can even rename the .llamafile to .exe and run it by double-clicking it.

OpenAI Compatible Server

This section will look into how to server LLMs through the Llamfile. We have noticed that when we run the llama file, the browser opens, and we can interact with LLM through the WebUI. This is basically what we call hosting the Large Language Model.

Once we run the Llamafile, we can interact with the respective LLM as an endpoint because the model is being served at the local host at the PORT 8080. The server follows the OpenAI API Protocol, i.e., similar to the OpenAI GPT Endpoint, thus making it easy to switch between the OpenAI GPT model and the LLM running with Llamafile.

Here, we will run the previously created TinyLlama llamafile. Now, this must be running on localhost 8080. We will now test it through the OpenAI API itself in Python

from openai import OpenAI

client = OpenAI(

base_url="http://localhost:8080/v1",

api_key = "sk-no-key-required"

)

completion = client.chat.completions.create(

model="TinyLlama",

messages=[

{"role": "system", "content": "You are a usefull AI

Assistant who helps answering user questions"},

{"role": "user", "content": "Distance between earth to moon?"}

]

)

print(completion.choices[0].message.content)- Here, we work with the OpenAI library. But instead of specifying the OpenAI endpoint, we specify the URL where our TinyLlama is hosted and give the “sk-no–token-required” for the api_key

- Then, the client will get connected to our TinyLlama endpoint

- Now, similar to how we work with the OpenAI, we can use the code to chat with our TinyLlama.

- For this, we work with the completions class of the OpenAI. We create new completions with the .create() object and pass the details like the model name and the messages.

- The messages are in the form of a list of dictionaries, where we have the role, which can be system, user, or assistant, and we have the content.

- Finally, we can retrieve the information generated through the above print statement.

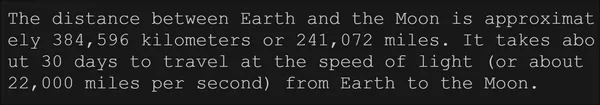

The output for the above can be seen below.

This way, we can leverage the llamafiles and replace the OpenAI API easily with the llamafile that we chose to run.

Llamafiles Limitations

While revolutionary, llamafiles are still under development. Some limitations include:

- Limited model selection: Currently, not all LLMs are available in the form of llamafiles. The current selection of pre-built Llamafiles is still growing. Currently, Llamafiles are available for Llama 2, LlaVa, Mistral, and Wizard Coder.

- Hardware requirements: Running LLMs, even through Llamafiles, still requires much computational resources. While they are easier to run than traditional methods, older or less powerful computers may need help to run them smoothly.

- Security concerns: Downloading and running executables from untrusted sources carries inherent risks. So there must be a trustworthy platform where we can download these llamafiles.

Llamafiles vs the Rest

Before Llamafiles, there were different ways to run Large Language Models. One was through the llama_cpp_python. This is the Python version of llama.cpp that lets us run quantized Large Language Models on consumer hardware like Laptops and Desktop PCs. But to run it, we must download and install Python and even deep learning libraries like torch, huggingface, transformers, and many more. And after that, it involved writing many lines of code to run the model.

Even then, sometimes, we may face issues due to dependency problems (that is, some libraries have lower or higher versions than necessary). And there is also the CTransformers library that lets us run quantized LLMs. Even this requires the same process that we have discussed for llama_cpp_python

And then, there is Ollama. Ollama has been highly successful in the AI community for its ease of use to easily load and run Large Language Models, especially the quantized ones. Ollama is a kind of TUI(Terminal User Interface) for LLMs. The only difference between the Ollama and the Llamafile is the shareability. That is, if want to, then I can share my model.llamafile with anyone and they can run it without downloading any additional software. But in the case of Ollama, I need to share the model.gguf file, which the other person can run only when they install the Ollama software or through the above Python libraries.

Regarding the resources, all of them require the same amount of resources because all these methods use the llama.cpp underneath to run the quantized models. It’s only about the ease of use where there are differences between these.

Conclusion

Llamafiles mark a crucial step forward in making LLMs readily runnable. Their ease of use and portability opens up a world of possibilities for developers, researchers, and casual users. While there are limitations, the potential of llamafiles to democratize LLM access is apparent. Whether you’re an expert developer or a curious novice, Llamafiles opens exciting possibilities for exploring the world of LLMs.In this guide, we have taken a look at how to download Llamafiles and even how to create our very own Llamafiles with our quantized models. We have even taken a look at the OpenAI-compatible server that is created when running Llamafiles.

Key Takeaways

- Llamafiles are single-file executables that make running large language models (LLMs) easier and more readily available.

- They eliminate the need for complex setups and configurations, allowing users to download and run LLMs directly without Python or GPU requirements.

- Llamafiles are right now, available for a limited selection of open-source LLMs, including LlaVa, Mistral, and WizardCoder.

- While convenient, Llamafiles still have limitations, like the hardware requirements and security concerns associated with downloading executables from untrusted sources.

- Despite these limitations, Llamafiles represents an important step towards democratizing LLM access for developers, researchers, and even casual users.

Frequently Asked Questions

A. Llamafiles provide several advantages over traditional LLM configuration methods. They make LLMs easier and faster to set up and execute because you don’t need to install Python or have a GPU. This makes LLMs more readily available to a wider audience. Additionally, Llamafiles can run across different operating systems.

A. While Llamafiles provide many benefits, they also have some limitations. The selection of LLMs available in Llamafiles is limited compared to traditional methods. Additionally, running LLMs through Llamafiles still requires a good amount of hardware resources, and older or less powerful computers may not support it. Finally, security concerns are associated with downloading and running executables from untrusted sources.

A. To get started with Llamafiles, you can visit the official Llamafile GitHub Repository. There, you can download the Llamafile for the LLM model you want to use. Once you have downloaded the file, you can run it directly like an executable.

A. No. Currently, Llamafiles only supports specific pre-built models. Creating our very own Llamafiles is planned for future versions.

A. The developers of Llamafiles are working to expand the selection of available LLM models, run them more efficiently, and implement security measures. These advancements aim to make Llamafiles even more available and secure for more people who have little technical background.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.

Related

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.analyticsvidhya.com/blog/2024/01/using-llamafiles-to-simplify-llm-execution/

- :has

- :is

- :not

- :where

- ][p

- $UP

- 1

- 11

- 12

- 14

- 3rd

- 7

- 9

- a

- Able

- About

- above

- access

- across

- add

- Additional

- Additionally

- address

- advancements

- advantages

- After

- AI

- aim

- All

- Allowing

- allows

- almost

- already

- also

- always

- amount

- an

- analytics

- Analytics Vidhya

- and

- Another

- answer

- any

- anyone

- api

- apparent

- approach

- ARE

- arguments

- around

- article

- AS

- Assistant

- associated

- At

- audience

- available

- background

- Basically

- BE

- because

- been

- before

- being

- below

- benefits

- between

- Billion

- BIN

- blogathon

- browser

- builds

- built

- but

- by

- call

- called

- came

- CAN

- case

- casual

- challenges

- challenging

- chat

- check

- chose

- click

- client

- code

- coder

- community

- compared

- compatible

- completion

- complex

- computational

- computer

- computers

- concept

- Concerns

- Configuration

- configured

- connected

- considering

- consumer

- Consumer Hardware

- contain

- contains

- content

- Convenient

- Conversation

- could

- CPU

- create

- created

- Creating

- Creative

- creator

- crucial

- curious

- Current

- Currently

- deep

- deep learning

- Default

- Demo

- democratize

- Democratizing

- dependencies

- Dependency

- desktop

- details

- developed

- Developer

- developers

- Development

- DID

- difference

- differences

- different

- directly

- discretion

- discussed

- distance

- do

- Dont

- download

- due

- e

- Earlier

- earth

- ease

- ease of use

- easier

- easily

- easy

- effectively

- efficiently

- eliminate

- Endpoint

- ensure

- Enter

- entirely

- Environment

- especially

- Even

- exciting

- execute

- execution

- Expand

- expert

- Exploring

- extension

- extract

- Face

- faster

- File

- Files

- Finally

- First

- floating

- followed

- following

- follows

- For

- form

- format

- Forward

- from

- future

- gathered

- generate

- generated

- generating

- get

- getting

- GitHub

- Give

- Go

- going

- good

- GPU

- GPUs

- Growing

- guide

- hand

- Hardware

- Have

- help

- helps

- hence

- here

- higher

- highly

- history

- host

- hosted

- hosting

- How

- How To

- How We Work

- However

- http

- HTTPS

- HuggingFace

- i

- if

- image

- images

- implement

- import

- important

- in

- include

- Including

- indicates

- indicating

- information

- informative

- inherent

- initial

- innovative

- install

- installation

- installing

- instead

- interact

- Interface

- into

- involved

- involves

- issues

- IT

- ITS

- itself

- just

- Keep

- Kind

- language

- Languages

- laptops

- large

- Last

- latest

- learning

- less

- let

- Lets

- Leverage

- libraries

- Library

- like

- limitations

- Limited

- Line

- lines

- LINK

- links

- linux

- List

- little

- Llama

- load

- loading

- local

- Look

- Lot

- lower

- mac

- make

- MAKES

- Making

- many

- Maps

- mark

- May..

- measures

- Media

- mention

- message

- messages

- methods

- Microsoft

- model

- models

- Moon

- more

- most

- move

- moving

- much

- must

- my

- name

- names

- Navigate

- necessary

- Need

- needed

- needing

- New

- next

- no

- novice

- now

- object

- of

- off

- official

- often

- older

- on

- once

- ONE

- ones

- only

- open

- open source

- OpenAI

- opens

- operating

- operating systems

- or

- original

- Other

- Others

- our

- output

- over

- own

- owned

- page

- parameter

- part

- party

- pass

- PCs

- People

- per

- permission

- person

- picture

- planned

- platform

- plato

- Plato Data Intelligence

- PlatoData

- Point

- Popular

- portability

- possibilities

- possible

- potential

- powerful

- present

- press

- previous

- previously

- problems

- process

- produce

- prospects

- protocol

- provide

- published

- pushed

- Python

- pytorch

- Questions

- quite

- RAM

- readily

- received

- replace

- repository

- represents

- require

- required

- Requirements

- requires

- researchers

- Resources

- respective

- response

- responses

- revolutionary

- revolutionized

- right

- risks

- Role

- Run

- running

- same

- Save

- Science

- Second

- Section

- secure

- security

- Security Measures

- see

- seen

- select

- selecting

- selection

- send

- served

- server

- set

- several

- Share

- sharing

- shot

- show

- shown

- Shows

- similar

- Simple

- simpler

- simplify

- single

- sizes

- smoothly

- So

- Software

- some

- something

- sometimes

- Source

- Sources

- specific

- start

- started

- Statement

- Step

- Steps

- Still

- stored

- successful

- support

- Supports

- Switch

- system

- Systems

- Take

- taken

- Technical

- technique

- tells

- template

- Terminal

- test

- text

- than

- that

- The

- the information

- the world

- their

- Them

- then

- There.

- These

- they

- things

- Third

- this

- those

- though?

- three

- Through

- Thus

- time

- to

- Tokens

- top

- torch

- towards

- traditional

- transformers

- true

- trustworthy

- try

- type

- Ubuntu

- under

- underneath

- understand

- URL

- us

- use

- used

- User

- User Interface

- users

- uses

- using

- Values

- version

- very

- via

- Visit

- vs

- want

- was

- Way..

- ways

- we

- web

- web server

- webp

- WELL

- were

- What

- when

- whether

- which

- while

- WHO

- wider

- will

- windows

- Windows users

- with

- without

- Work

- working

- world

- write

- write code

- writing

- Wrong

- you

- Your

- zephyrnet

- Zip