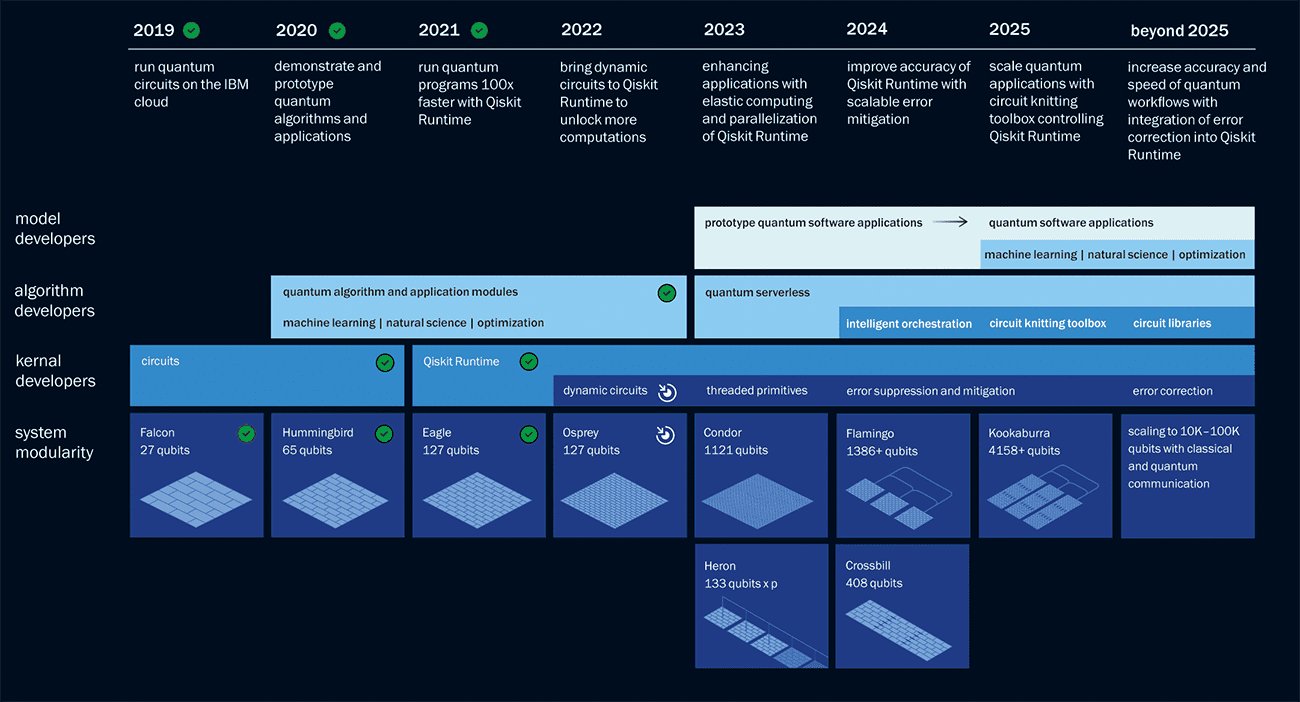

Vice-president of IBM Quantum Jay Gambetta talks to Philip Ball about the company’s many quantum advances over the last 20 years, as well as its recently announced five-year roadmap to “quantum advantage”

Companies and research labs across the globe are working towards getting their nascent quantum technologies out of the lab and into the real world, with the US technology giant IBM being a key player. In May this year, IBM Quantum unveiled its latest roadmap for the future of quantum computing in the coming decade, and the firm has set some ambitious targets. Having announced its Eagle processor with 127 quantum bits (qubits) last year, the company is now developing the 433-qubit Osprey processor for a debut later this year, to be followed in 2023 by the 1121-qubit Condor.

But beyond that, the company says, the game will switch to assembling such processors into modular circuits, in which the chips are wired together via sparser quantum or classical interconnections. That effort will culminate in what they refer to as their 4158-qubit Kookaburra device in 2025. Beyond then, IBM forecasts modular processors with 100,000 or more qubits, capable of computing without the errors that currently make quantum computing a matter of finding workarounds for the noisiness of the qubits. With this approach, the company’s quantum computing team is confident that it can achieve a general “quantum advantage”, where quantum computers will consistently outperform classical computers and conduct complex computations beyond the means of classical devices.

While he was in London on his way to the 28th Solvay conference in Brussels, which tackled quantum information, Physics World caught up with physicist Jay Gambetta, vice-president of IBM Quantum. Having spearheaded much of the company’s advances over the past two decades, Gambetta explained how these goals might be reached and what they will entail for the future of quantum computing.

What is the current state of the art at IBM Quantum? What are some of the key parameters you are focusing on?

The IBM roadmap is about scaling up – not just the number of qubits but their speed, quality and circuit architecture. We now have coherence times [the duration for which the qubits stay coherent and capable of performing a quantum computation] of 300 microseconds in the Eagle processor [compared with about 1 μs in 2010], and the next generation of devices will reach 300 milliseconds. And our qubits [made from superconducting metals] now have almost 99.9% fidelity [they incur only one error every 1000 operations – an error rate of 10–3]. I think 99.99% wouldn’t be impossible by the end of next year.

The ultimate litmus test for the maturity of quantum computers, then, is whether quantum runtime can be competitive with classical runtime

But doing things intelligently is going to become more important than just pushing the raw metrics. The processor architecture is getting increasingly important. I don’t think we’ll get much past 1000 qubits per chip [as on the Condor], so now we’re looking at modularity. This way, we can get to processors of 10,000 qubits by the end of this decade. We’re going to use both classical communication (to control the electronics) between chips, and quantum channels that create some entanglement (to perform computation). These between-chip channels are going to be slow – maybe 100 times slower than the circuits themselves. And the fidelities of the channels will be hard to push above 95%.

For high-performance computing, what really matters is minimizing the runtime – that is, minimizing the time it takes to generate a solution for a problem of interest. The ultimate litmus test for the maturity of quantum computers is whether quantum runtime can be competitive with classical runtime. We have started to show theoretically that if you have a large circuit you want to run, and you divide it up into smaller circuits, then every time you make a cut, you can think of it as incurring a classical cost, which increases the runtime exponentially. So the goal is to keep that exponential rise as close to 1 as possible.

For a given circuit, the runtime depends exponentially on a parameter we call γ̄ raised to the power nd, where n is the number of qubits and d is the depth [a measure of the longest path between the circuit’s input and output, or equivalently the number of time steps needed for the circuit to run]. So if we can getb γ̄ as close to 1 as possible, we get to a point where there’s real quantum advantage: no exponential growth in runtime. We can reduce γ̄ through improvements in coherence and gate fidelity [intrinsic error rate]. Eventually we’ll hit a tipping point where, even with the exponential overhead of error mitigation, we can reap runtime benefits over classical computers. If you can get γ̄ down to 1.001, the runtime is faster than if you were to simulate those circuits classically. I’m confident we can do this – with improvements in gate fidelity and suppressed crosstalk between qubits, we’ve already measured a γ̄ of 1.008 on the Falcon r10 [27-qubit] chip.

How can you make those improvements for error mitigation?

To improve fidelity, we’ve taken an approach called probabilistic error cancellation [arXiv:2201.09866]. The idea is that you send me workloads and I will send you processed results with noise-free estimates of them. You say I want you to run this circuit; I characterize all the noise I have in my system, and I make many runs and then process all those results together to give you a noise-free estimate of the circuit output. This way, we are starting to show that there is likely to be a continuum from where we are today with error suppression and error mitigation to full error correction.

So you can get there without building fully error-correcting logical qubits?

What is a logical qubit really? What do people actually mean by that? What really matters is: can you run logical circuits, and how do you run them in a way that the runtime is always getting faster? Rather than thinking about building logical qubits, we’re thinking about how we run circuits and give users estimates of the answer, and then quantifying it by the runtime.

When you do normal error correction, you correct what you thought the answer would have been up to that point. You update a reference frame. But we will achieve error correction via error mitigation. With γ̄ equal to 1, I’ll effectively have error correction, because there’s no overhead to improving the estimates as much as you like.

This way, we will effectively have logical qubits, but they’ll get inserted continuously. So we are starting to think of it at a higher level. Our view is to create, from a user perspective, a continuum that just gets faster and faster. The ultimate litmus test for the maturity of quantum computers, then, is whether quantum runtime can be competitive with classical runtime.

That’s very different from what other quantum firms are doing, but I will be very surprised if this doesn’t become the general view – I bet you’ll start to see people comparing runtimes, not error correction rates.

What we’re doing is just computing in general, and we’re giving it a boost through a quantum processor

If you make modular devices with classical connections, does that mean the future is not really quantum versus classical, but quantum and classical?

Yes. Bringing classical and quantum together will allow you to do more. That’s what I call quantum surplus: doing classical computing in a smart way using quantum resources.

If I could wave a magic wand, I would not call it quantum computing. I would go back and say really what we’re doing is just computing in general, and we’re giving it a boost through a quantum processor. I’ve been using the catchphrase “quantum-centric supercomputing”. It’s really about stepping up computing by adding quantum to it. I really think this will be the architecture.

What are the technical obstacles? Does it matter that these devices need cryogenic cooling, say?

That’s not really a big deal. A bigger deal is that if we continue on our road map, I’m worried about the price of the electronics and all the things that go around it. To bring down these costs, we need to develop an ecosystem; and we as a community are still not doing enough to create that environment. I don’t see many people focusing on just the electronics, but I think it will happen.

Is all the science now done, so that it’s now more a matter of engineering?

There will always be science to do, especially as you chart this path from error mitigation to error correction. What type of connectivity do you want to build into the chip? What are the connections? These are all fundamental science. I think we can still push error rates to 10-5. Personally, I don’t like to label things “science” or “technology”; we’re building an innovation. I think there is definitely a transition to these devices becoming tools, and the question becomes how we use these things for science, rather than about the science of creating the tool.

Are you worried there might be a quantum bubble?

No. I think quantum advantage can be broken into two things. First, how do you actually run circuits faster on quantum hardware? I’m confident I can make predictions about that. And second, how do you actually use these circuits, and relate them to applications? Why does a quantum-based method work better than just a classical method alone? Those are very hard science questions. And they’re questions that high-energy physicists, materials scientists and quantum chemists are all interested in. I think there’s definitely going to be a demand – we already see it. We’re seeing some business enterprises getting interested too, but it’s going to take a while to find real solutions, rather than quantum being a tool for doing science.

I see this as being a smooth transition. One big potential area of application is problems that have data with some type of structure, especially data for which it’s very hard to find the correlations classically. Finance and medicine both face problems like that, and quantum methods such as quantum machine-learning are very good at finding correlations. It’s going to be a long road, but it’s worth the investment for them to do it.

What about keeping the computation secure against, say, attacks like Shor’s factoring algorithm, which harnesses quantum methods to crack current public key cryptographic methods, based on factorization?

Everyone wants to be secure against Shor’s algorithm – it’s now being called “quantum-safe”. We’ve got a lot of fundamental research into the algorithms, but how to build it in is going to become an important question. We’re investigating building this into our products all along, rather than as an add-on. And we need to ask how we make sure we have the classical infrastructure that is safe for quantum. How that future plays out is going to be very important over the next few years – how you build quantum-safe hardware from the ground up.

My definition of success is when most users won’t even know they’re using a quantum computer

Have you been surprised at the speed at which quantum computing has arrived?

For someone who’s been in it as deep as me since 2000, it’s followed remarkably close to the path that was predicted. I remember going back to an internal IBM roadmap from 2011 and it was pretty spot on. I thought I was making things up then! In general, I feel as though people are overestimating how long it will take. As we get more and more advanced, and people bring quantum information ideas to these devices, in the next few years we’ll be able to run larger circuits. Then it will be about what types of architecture you need to build, how big the clusters are, what types of communication channels you use, and so on. These questions will be driven by the kind of circuits you’re running: how do we start building machines for certain types of circuits? There will be a specialization of circuits.

What will 2030 look like for quantum computing?

My definition of success is when most users won’t even know they’re using a quantum computer, because it’s built into an architecture that works seamlessly with classical computing. The measure of success would then be that it’s invisible to most people using it, but it enhances their life in some way. Maybe your mobile phone will use an app that does its estimation using a quantum computer. In 2030 we’re not going to be at that level but I think we’ll have very large machines by then and they’ll be well beyond what we can do classically.