Key Takeaways

- Thought Propagation (TP) is a novel method that enhances the complex reasoning abilities of Large Language Models (LLMs).

- TP leverages analogous problems and their solutions to improve reasoning, rather than making LLMs reason from scratch.

- Experiments across various tasks show TP substantially outperforms baseline methods, with improvements ranging from 12% to 15%.

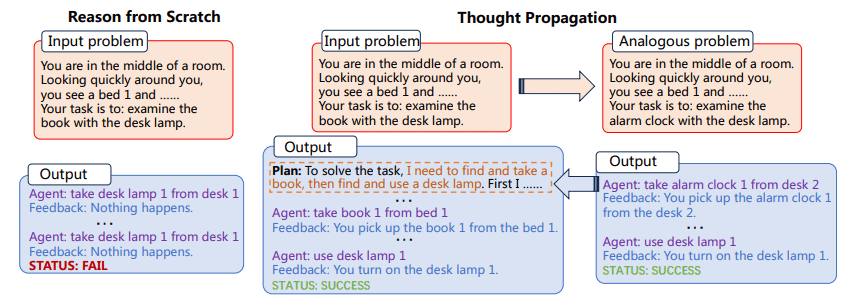

TP first prompts LLMs to propose and solve a set of analogous problems that are related to the input one. Then, TP reuses the results of analogous problems to directly yield a new solution or derive a knowledge-intensive plan for execution to amend the initial solution obtained from scratch.

The versatility and computational power of Large Language Models (LLMs) are undeniable, yet they are not without limit. One of the most significant and consistent challenges to LLMs is their general approach to problem-solving, consisting of reasoning from first principles for every new task encountered. This is problematic, as it allows for a high degree of adaptability, but also increases the likelihood of errors, particularly in tasks that require multi-step reasoning.

The challenge of "reasoning from scratch" is especially pronounced in complex tasks that demand multiple steps of logic and inference. For example, if an LLM is asked to find the shortest path in a network of interconnected points, it typically would not leverage prior knowledge or analogous problems to find a solution. Instead, it would attempt to solve the problem in isolation, which can lead to suboptimal results or even outright errors. Enter Thought Propagation (TP), a method designed to augment the reasoning capabilities of LLMs. TP aims to overcome the inherent limitations of LLMs by allowing them to draw from a reservoir of analogous problems and their corresponding solutions. This innovative approach not only improves the accuracy of LLM-generated solutions but also significantly enhances their ability to tackle multi-step, complex reasoning tasks. By leveraging the power of analogy, TP provides a framework that amplifies the innate reasoning capabilities of LLMs, bringing us one step closer to the realization of truly intelligent artificial systems.

Thought Propagation involves two main steps:

- First, the LLM is prompted to propose and solve a set of analogous problems related to the input problem

- Next, the solutions to these analogous problems are used to either directly yield a new solution or to amend the initial solution

The process of identifying analogous problems allows the LLM to reuse problem-solving strategies and solutions, thereby improving its reasoning abilities. TP is compatible with existing prompting methods, providing a generalizable solution that can be incorporated into various tasks without significant task-specific engineering.

Figure 1: The Thought Propagation process (Image from paper)

Moreover, the adaptability of TP should not be underestimated. Its compatibility with existing prompting methods makes it a highly versatile tool. This means that TP is not limited to any specific kind of problem-solving domain. This opens up exciting avenues for task-specific fine-tuning and optimization, thereby elevating the utility and efficacy of LLMs in a broad spectrum of applications.

The implementation of Thought Propagation can be integrated into the workflow of existing LLMs. For example, in a Shortest-path Reasoning task, TP could first solve a set of simpler, analogous problems to understand various possible paths. It would then use these insights to solve the complex problem, thereby increasing the likelihood of finding the optimal solution.

Example 1

- Task: Shortest-path Reasoning

- Analogous Problems: Shortest path between point A and B, Shortest path between point B and C

- Final Solution: Optimal path from point A to C considering the solutions of analogous problems

Example 2

- Task: Creative Writing

- Analogous Problems: Write a short story about friendship, Write a short story about trust

- Final Solution: Write a complex short story that integrates themes of friendship and trust

The process involves solving these analogous problems first, and then using the insights gained to tackle the complex task at hand. This method has demonstrated its effectiveness across multiple tasks, showcasing substantial improvements in performance metrics.

Thought Propagation's implications go beyond merely improving existing metrics. This prompting technique has the potential to alter how we understand and deploy LLMs. The methodology underscores a shift from isolated, atomic problem-solving towards a more holistic, interconnected approach. It prompts us to consider how LLMs can learn not just from data, but from the process of problem-solving itself. By continuously updating their understanding through the solutions to analogous problems, LLMs equipped with TP are better prepared to tackle unforeseen challenges, rendering them more resilient and adaptable in rapidly evolving environments.

Thought Propagation is a promising addition to the toolbox of prompting methods aimed at enhancing the capabilities of LLMs. By allowing LLMs to leverage analogous problems and their solutions, TP provides a more nuanced and effective reasoning method. Experiments confirm its efficacy, making it a candidate strategy for improving the performance of LLMs across a variety of tasks. TP may ultimately represent a significant step forward in the search for more capable AI systems.

Matthew Mayo (@mattmayo13) holds a Master's degree in computer science and a graduate diploma in data mining. As Editor-in-Chief of KDnuggets, Matthew aims to make complex data science concepts accessible. His professional interests include natural language processing, machine learning algorithms, and exploring emerging AI. He is driven by a mission to democratize knowledge in the data science community. Matthew has been coding since he was 6 years old.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.kdnuggets.com/thought-propagation-an-analogical-approach-to-complex-reasoning-with-large-language-models?utm_source=rss&utm_medium=rss&utm_campaign=thought-propagation-an-analogical-approach-to-complex-reasoning-with-large-language-models

- :has

- :is

- :not

- $UP

- 15%

- 8

- a

- abilities

- ability

- About

- accessible

- accuracy

- across

- addition

- AI

- AI systems

- aimed

- aims

- algorithms

- Allowing

- allows

- also

- amplifies

- an

- and

- any

- applications

- approach

- ARE

- artificial

- AS

- At

- attempt

- augment

- avenues

- b

- Baseline

- BE

- been

- Better

- between

- Beyond

- Bringing

- broad

- but

- by

- CAN

- candidate

- capabilities

- capable

- challenge

- challenges

- closer

- Coding

- community

- compatibility

- compatible

- complex

- computational

- computational power

- computer

- computer science

- concepts

- Confirm

- Consider

- considering

- consistent

- Consisting

- continuously

- Corresponding

- could

- Creative

- data

- data mining

- data science

- Degree

- Demand

- democratize

- demonstrated

- deploy

- designed

- directly

- domain

- draw

- driven

- editor-in-chief

- Effective

- effectiveness

- efficacy

- either

- elevating

- emerging

- Engineering

- Enhances

- enhancing

- Enter

- environments

- equipped

- Errors

- especially

- Even

- Every

- evolving

- example

- exciting

- execution

- existing

- experiments

- Exploring

- Find

- finding

- First

- For

- Forward

- Framework

- Friendship

- from

- gained

- General

- Go

- graduate

- hand

- he

- High

- highly

- his

- holds

- holistic

- How

- HTTPS

- identifying

- if

- image

- implementation

- implications

- improve

- improvements

- improves

- improving

- in

- include

- Incorporated

- Increases

- increasing

- inherent

- initial

- innate

- innovative

- input

- insights

- instead

- integrated

- Integrates

- Intelligent

- interconnected

- interests

- into

- involves

- isolated

- isolation

- IT

- ITS

- itself

- jpg

- just

- KDnuggets

- Kind

- knowledge

- language

- large

- lead

- LEARN

- learning

- Leverage

- leverages

- leveraging

- likelihood

- LIMIT

- limitations

- Limited

- logic

- machine

- machine learning

- Main

- make

- MAKES

- Making

- master

- matthew

- May..

- means

- merely

- method

- Methodology

- methods

- Metrics

- Mining

- Mission

- models

- more

- most

- multiple

- Natural

- Natural Language

- Natural Language Processing

- network

- New

- new solution

- novel

- obtained

- of

- Old

- on

- ONE

- only

- opens

- optimal

- optimization

- or

- Outperforms

- outright

- Overcome

- Paper

- particularly

- path

- performance

- plan

- plato

- Plato Data Intelligence

- PlatoData

- Point

- points

- possible

- potential

- power

- prepared

- principles

- Prior

- Problem

- problem-solving

- problems

- process

- processing

- professional

- promising

- pronounced

- propagation

- propose

- provides

- providing

- ranging

- rapidly

- rather

- realization

- reason

- related

- rendering

- represent

- require

- resilient

- Results

- reuse

- s

- Science

- scratch

- Search

- set

- shift

- Short

- should

- show

- showcasing

- significant

- significantly

- since

- solution

- Solutions

- SOLVE

- Solving

- specific

- Spectrum

- Step

- Steps

- Story

- strategies

- Strategy

- substantial

- substantially

- Systems

- tackle

- Task

- tasks

- technique

- than

- that

- The

- their

- Them

- themes

- then

- thereby

- These

- they

- this

- thought

- Through

- to

- tool

- Toolbox

- towards

- tp

- truly

- Trust

- two

- typically

- Ultimately

- undeniable

- underscores

- understand

- understanding

- unforeseen

- updating

- us

- use

- used

- using

- utility

- variety

- various

- versatile

- versatility

- was

- we

- which

- with

- without

- workflow

- would

- write

- writing

- years

- yet

- Yield

- zephyrnet