Someone wants to learn about Arduino programming. Do you suggest they blink an LED first? Or should they go straight for a 3D laser scanner with galvos, a time-of-flight sensor, and multiple networking options? Most of us need to start with the blinking light and move forward from there. So what if you want to learn about the latest wave of GPT — generative pre-trained transformer — programs? Do you start with a language model that looks at thousands of possible tokens in large contexts? Or should you start with something simple? We think you should start simple, and [Andrej Karpathy] agrees. He has a workbook that makes a tiny GPT that can predict the next bit in a sequence. It isn’t any more practical than a blinking LED, but it is a manageable place to start.

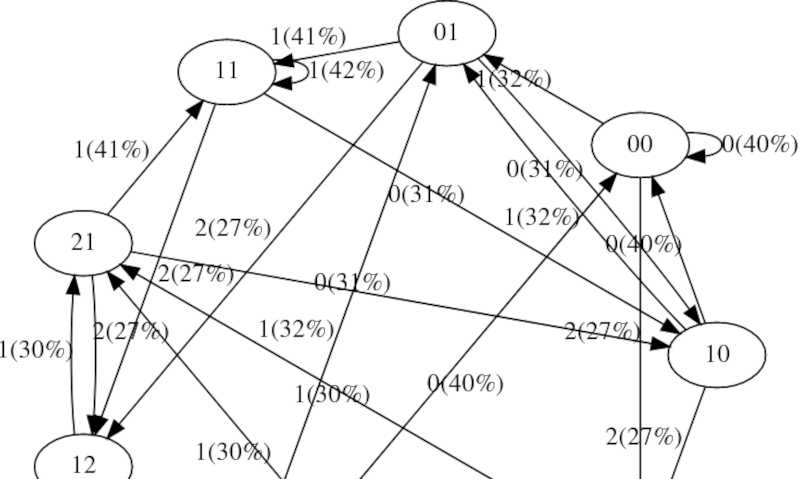

The simple example starts with a vocabulary of two. In other words, characters are 1 or 0. It also uses a context size of 3, so it will look at 3 bits and use that to infer the 4th bit. To further simplify things, the examples assume you will always get a fixed-size sequence of tokens, in this case, eight tokens. Then it builds a little from there.

The notebook uses PyTorch to create a GPT, but since you don’t need to understand those details, the code is all collapsed. You can, of course, expand it and see it, but at first, you should probably just assume it works and continue the exercise. You do need to run each block of code in sequence, even if it is collapsed.

The GPT is trained on a small set of data over 50 iterations. There should probably be more training, but this shows how it works, and you can always do more yourself if you are so inclined.

The real value here is to internalize this example and do more yourself. But starting from something manageable can help solidify your understanding. If you want to deepen your understanding of this kind of transformer, you might go back to the original paper that started it all.

All this hype over AI GPT-related things is really just… well… hype. But there is something there. We’ve talked about what it might mean. The statistical nature of these things, by the way, is exactly the way other software can figure out if your term paper was written by an AI.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- Platoblockchain. Web3 Metaverse Intelligence. Knowledge Amplified. Access Here.

- Source: https://hackaday.com/2023/04/10/the-hello-world-of-gpt/

- :is

- 1

- 3d

- a

- About

- AI

- All

- always

- and

- Arduino

- ARE

- At

- back

- BE

- Bit

- Blink

- Block

- builds

- by

- CAN

- case

- characters

- code

- collapsed

- context

- contexts

- continue

- course

- create

- data

- Deepen

- details

- Dont

- each

- Even

- exactly

- example

- examples

- Exercise

- Expand

- Figure

- First

- For

- Forward

- from

- further

- generative

- get

- Go

- help

- here

- How

- HTTPS

- Hype

- in

- In other

- Inclined

- IT

- iterations

- Kind

- language

- large

- laser

- latest

- LEARN

- Led

- light

- little

- Look

- LOOKS

- MAKES

- might

- model

- more

- most

- move

- move forward

- multiple

- Nature

- Need

- networking

- next

- notebook

- of

- on

- Options

- Other

- Paper

- Place

- plato

- Plato Data Intelligence

- PlatoData

- possible

- Practical

- predict

- probably

- Programming

- Programs

- pytorch

- real

- real value

- research

- Run

- Sequence

- set

- should

- Shows

- Simple

- simplify

- since

- Size

- small

- So

- Software

- something

- start

- started

- Starting

- starts

- statistical

- straight

- that

- The

- There.

- These

- things

- thousands

- to

- Tokens

- trained

- Training

- understand

- understanding

- us

- use

- value

- Wave

- Way..

- What

- will

- with

- words

- works

- world

- written

- Your

- yourself

- zephyrnet