What’s creativity? The most accredited definition is the following:

“Creativity is the capability of creating novel things”

It is considered one of the most important and irreplaceable peculiarities of humankind. But if this is such a special characteristic, it would be impossible for a neural network to imitate it, isn’t it? Well, not exactly. Today we are facing some extraordinary pinpoints in creating a creative AI with generative models, mainly known as Generative Adversarial Networks (GANs). These are considered by one of the fathers of Deep Learning, Yann Le Cunn, the most important breakthrough of the century in the AI field [8].

Generative Adversarial Networks

Generative Adversarial Networks (GANs) [3] were presented by Ian Goodfellow in 2014 and were immediately used to perform spectacular and never-explored tasks.

The first and most widely used application is the generation of new images as I showed in my previous article, “How does an AI Imagine the Universe?” [5] generating images of planets and celestial bodies such as the following:

All of these images represent non-existent objects generated by a neural network, and the same has been done with animals, people, and objects of all shapes and types.

If this in-depth educational content is useful for you, subscribe to our AI research mailing list to be alerted when we release new material.

These networks recently have proved capable of performing tasks that are extremely useful such as increasing the resolution of a photo or sometimes quite funny, such as getting Steve Ballmer and Robert Downey Jr. to duet to Bruno Mars’ Uptown Funk!

Although this may seem at first glance fascinating and totally harmless, there are serious implications and dangers lurking around the corner. These models are also used on a daily basis to deceive people or to ruin their reputation, for example through what are called deepfakes.

It is now possible with this technology to transpose the face of a source actor onto the face of a target actor, making it look, speak or move exactly like the source actor. A video deepfake can make people believe that a political leader has made a particularly dangerous statement that fuels hatred between people, or places a person in an unseemly context that he or she has never been in, or these videos can represent bogus evidence during a judicial process.

Like all tools, it is therefore not good or bad, it always depends on how you use it.

But before delving into the heart of this article, a little explanation of the inner workings of Generative Adversarial Networks is in order.

GANs are composed of two distinct networks, normally two convolutional ones, a generator and a discriminator, which are in contrast to each other. The generator, looking at the input data, tries to generate new credible images in an attempt to deceive the discriminator. On the other side, the discriminator tries to understand if the given image is a generated or an original one. When the generator has become good enough at generating images to the point of fooling the discriminator it can be used to create other credible examples in addition to those present in the input data.

Vision Transformers

One last small digression before going into the heart of the article. For those who have never heard of Vision Transformers, it is a new architecture that is revolutionizing the field of computer vision. It is based on the mechanism of self-attention which uses vectors obtained by the split of the input image into patches and projecting them into a linear space. The Vision Transformers-based architectures are today one of the most promising approaches in Computer Vision and are obtaining amazing results.

For an in-depth explanation of Vision Transformers, I suggest you visit my previous article.

But if Vision Transformers are so great, wouldn’t it be worth using them to make GANs?

TransGAN: Two pure Transformers can make one strong GAN, and that can scale up!

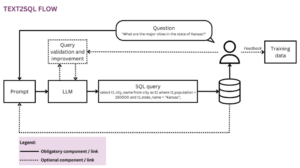

This study is designed to answer the question, is it possible to create a GAN without using convolutional networks? GANs normally rely on convolutional networks for both the generator and the discriminator. In this architecture, instead, only Vision Transformers are used, which is quite simple for the discriminator, since it is a normal classifier that has to say if the image is fake or not, but what about the generator? In this case, the situation is more complicated since the Transformers, relying on the mechanism of self-attention, require large computational resources, so directly generate an image at the desired resolution could soon become too expensive.

The architecture of the generator is therefore made up of a series of groups of Transformer Encoders. Each of these receives images at increasing resolutions but on a decreasing number of channels, so as to reduce complexity. In other words, the generator starts working on a small image with many channels and go ahead working on larger images but with a smaller number of channels.

If, for example, we want to generate a 32×32 pixels image on 3 channels, initially an image representing simple noise, of size 8×8, is generated, as in the classic GAN. This is transformed by a series of Transformer Encoders and after a while, undergoes an upscaling which transforms it into a 16×16 and reduces the number of channels. This process is repeated further until the target resolution of 32×32 is reached. Finally, the vectors generated by the last set of encoders is transformed back into patches to compose the output image.

With TransGANs [1], it is, therefore, possible to generate images in a computationally sustainable way and without the use of convolutional networks, but there is one small detail that still needs to be investigated: how is upscaling performed?

The strategy adopted to reduce channels while increasing resolution is shown in the figure and consists of transforming each “column” of pixels into a single super-pixel. All the super-pixels are combined together to obtain a single higher resolution image.

In order to further improve performance by achieving even higher resolutions outputs, the last Transformer Encoders were replaced by Grid Transformers Blocks.

In what is called grid self-attention, instead of calculating the correspondences between a given token and all other tokens, a partition of the full-size feature map into many non-overlapped grids is made, and the token interactions are calculated within each local grid.

TransGAN was able to set a new state-of-the-art on STL-10 and match the results of architectures based on convolutional networks on other datasets such as CelebA and CIFAR-10.

The results are excellent in many contexts, stimulating interest in new GAN variants based on Vision Transformers!

Paint Transformer

Another interesting and creative work based on Vision Transformers is the Paint Transformer [2]. This model is capable of turning a normal picture into a painting!

The problem here is approached as the prediction of a series of strokes for a given image to recreate it in a non-photorealistic manner.

The process is progressive, at each step the model predicts multiple strokes in parallel to minimise the difference between the current canvas and the target image. Two modules are used to achieve this: Stroke Predictor and Stroke Renderer.

The Stroke Renderer is responsible for taking a series of strokes as input and placing them on a canvas. The Stroke predictor is at the heart of the architecture and has the task of learning to predict the strokes needed to produce the figure.

The entire training process is self-supervised, an innovative and more sustainable training approach [7]. First, some random background strokes are generated and placed by a stroke renderer on an empty canvas, thus obtaining the intermediate canvas. Subsequently, random foreground strokes are generated and placed on the previous canvas to obtain the target canvas from another stroke renderer. The predictor receives both the intermediate canvas and the target one and tries to generate potential foreground strokes to be placed on the intermediate canvas. The predicted strokes are then placed on the intermediate canvas and both the strokes and the target canvas are used to calculate the difference with the originals and thus the loss to be minimised.

The Stroke Predictor contains two CNNs that are responsible for the initial extraction of features from the two input canvases. These pass first through a Transformer Encoder and then through a Transformer Decoder to output the desired strokes.

Thanks to this architecture, it is now possible to turn any photo into a painting with varied and exceptional results!

Conclusions

Some said that AI will be able to do everything but creative works. Today this statement is not solid anymore. They are making the reality less clear, with fake images and videos difficult to be distinguished from original ones, they are animating photos, give them colours, pretend to be a painter and many other intriguing applications. Modern deep learning techniques are showing the wonders that may be obtained using AI and we have just scratched the surface of this fascinating field.

References and Insights

[1] “Yifan Jiang et al.”. “TransGAN: Two Pure Transformers Can Make One Strong GAN, and That Can Scale Up”

[2] “Songhua Liu et al.”. “Paint Transformer: Feed Forward Neural Painting with Stroke Prediction”

[3] “Ian J. Goodfellow et al.”. “Generative Adversarial Networks”

[4] “Alexey Dosovitskiy et al.”. “An Image is Worth 16×16 Words: Transformers for Image Recognition at Scale”

[5] “Davide Coccomini”. “How does an AI Imagine the Universe?”

[6] “Davide Coccomini”. “On Transformers, Timesformers and Attention”

[7] “Davide Coccomini”. “Self-Supervised Learning in Vision Transformers”

[8] “Kyle Wiggers”. “Generative adversarial networks: What GANs are and how they’ve evolved”

[9] “Davide Coccomini et al.”. “Combining EfficientNet and Vision Transformers for Video Deepfake Detection”

This article was originally published on Towards Data Science and re-published to TOPBOTS with permission from the author.

Enjoy this article? Sign up for more AI updates.

We’ll let you know when we release more technical education.

Related

Source: https://www.topbots.com/the-creative-side-of-vision-transformers/

- 2016

- 7

- 9

- AI

- ai research

- All

- animals

- Application

- applications

- architecture

- around

- article

- channels

- Computer Vision

- content

- Creating

- Creative

- creativity

- Current

- CVPR

- data

- deep learning

- deepfakes

- detail

- Education

- educational

- extraction

- Face

- facing

- fake

- Feature

- Features

- Figure

- Finally

- First

- Forward

- funny

- GANs

- generative adversarial networks

- good

- great

- Grid

- here

- How

- HTTPS

- image

- Image Recognition

- innovative

- interest

- IT

- large

- learning

- local

- Making

- map

- Match

- model

- move

- network

- networks

- Neural

- neural network

- Noise

- order

- Other

- paint

- painting

- Patches

- People

- performance

- picture

- Planets

- prediction

- present

- real-time

- Reality

- reduce

- research

- Resources

- Results

- ROBERT

- Scale

- Series

- set

- Simple

- Size

- small

- So

- Space

- split

- Statement

- Strategy

- Study

- Surface

- sustainable

- Target

- Technical

- techniques

- Technology

- The Source

- token

- Tokens

- Training

- transforming

- Updates

- Video

- Videos

- vision

- What is

- WHO

- within

- words

- Work

- works

- worth

- youtube