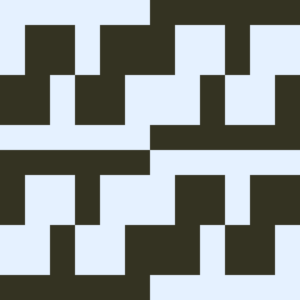

Pipeline parallelism splits a model “vertically” by layer. It’s also possible to “horizontally” split certain operations within a layer, which is usually called Tensor Parallel training. For many modern models (such as the Transformer), the computation bottleneck is multiplying an activation batch matrix with a large weight matrix. Matrix multiplication can be thought of as dot products between pairs of rows and columns; it’s possible to compute independent dot products on different GPUs, or to compute parts of each dot product on different GPUs and sum up the results. With either strategy, we can slice the weight matrix into even-sized “shards”, host each shard on a different GPU, and use that shard to compute the relevant part of the overall matrix product before later communicating to combine the results.

One example is Megatron-LM, which parallelizes matrix multiplications within the Transformer’s self-attention and MLP layers. PTD-P uses tensor, data, and pipeline parallelism; its pipeline schedule assigns multiple non-consecutive layers to each device, reducing bubble overhead at the cost of more network communication.

Sometimes the input to the network can be parallelized across a dimension with a high degree of parallel computation relative to cross-communication. Sequence parallelism is one such idea, where an input sequence is split across time into multiple sub-examples, proportionally decreasing peak memory consumption by allowing the computation to proceed with more granularly-sized examples.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://openai.com/research/techniques-for-training-large-neural-networks

- :is

- :where

- $UP

- 30

- a

- across

- Activation

- Allowing

- also

- an

- and

- AS

- At

- BE

- before

- between

- bubble

- by

- CAN

- certain

- Columns

- combine

- communicating

- Communication

- computation

- Compute

- consumption

- Cost

- data

- decreasing

- Degree

- device

- different

- Dimension

- DOT

- each

- either

- example

- examples

- For

- GitHub

- GPU

- GPUs

- High

- host

- HTTPS

- idea

- independent

- input

- into

- ITS

- large

- later

- layer

- layers

- many

- Matrix

- Memory

- model

- models

- Modern

- more

- multiple

- multiplying

- network

- networks

- Neural

- neural networks

- of

- on

- ONE

- OpenAI

- Operations

- or

- overall

- pairs

- Parallel

- part

- parts

- Peak

- pipeline

- plato

- Plato Data Intelligence

- PlatoData

- possible

- proceed

- Product

- Products

- reducing

- relative

- relevant

- Results

- schedule

- Sequence

- Slice

- split

- Splits

- Strategy

- such

- sum

- techniques

- that

- The

- thought

- time

- to

- Training

- use

- uses

- usually

- we

- weight

- which

- Wikipedia

- with

- within

- zephyrnet