OpenAI, Google, Microsoft, and Anthropic, four of the biggest AI firms, have founded a new industry association to ensure the “safe and responsible development” of so-called “frontier AI” models.

Developers of widely used AI technologies, including OpenAI, Microsoft, Google, and Anthropic, have formed the Frontier Model Forum in response to mounting demands for government regulation. To provide technical assessments and benchmarks, as well as to promote best practices and standards, this alliance draws on the expertise of its member companies.

Although it only has four members at the moment, the Frontier AI Model Forum welcomes new participants. To qualify, a company must work on innovative AI models and demonstrate a “strong commitment to frontier model safety.”

What does Frontier AI Model Forum offer?

At the core of the forum’s mission is the discussion of frontier AI, or cutting-edge AI and machine learning models that are considered dangerous enough to pose “serious risks to public safety.” According to Google, here are the core objectives for the Frontier AI Model Forum:

- Research into AI safety is being pushed forward to encourage the careful creation of cutting-edge models, lessen potential dangers, and pave the way for impartial, standardized assessments of performance and security.

- Educating the public on the nature, capabilities, limits, and effects of the technology by determining the best methods for its responsible development and implementation.

- Working together with decision-makers, academics, civic society, and businesses to disseminate information on trust and safety threats.

- Boosting efforts to create software that can help address some of society’s most pressing problems, such as climate change, disease diagnosis and prevention, and cyber security.

The major objectives of the Forum will be to increase research into AI safety, improve communication between corporations and governments, and determine best practices for developing and implementing AI models. The AI safety study will entail testing and comparing different AI models and releasing the benchmarks in a public library so that every major player in the business can understand the risks and take precautions.

The Frontier Model Forum will form an advisory board with members from a wide range of disciplines and perspectives to help decide on tactics and goals. In addition, pioneering companies will establish institutional frameworks that facilitate consensus-building between all relevant parties on issues related to AI development.

The Forum will also use the data and resources that academics, NGOs, and other interested parties have contributed. It will follow and support the standards set by preexisting AI projects like the Partnership on AI and MLCommons to ensure their successful implementation.

In the upcoming months, the Frontier Model Forum will form an Advisory Board comprised of individuals with a wide range of experiences and viewpoints to assist in steering the organization’s strategy and goals.

Key institutional arrangements, such as a charter, governance, and finance, will also be established by the founding firms, which a working group and executive board will lead. In the coming weeks, the forum will be consulting with civil society and government on the structure of the Forum and effective methods of collaboration.

When given the chance, the Frontier Model Forum is eager to lend its expertise to current national and global programs like the G7 Hiroshima process, the OECD’s work on artificial intelligence risks, standards, and social effect, and the US-EU Trade and Technology Council.

Each of the Forum’s initiatives, or “workstreams,” will aim to build on the progress already made by the business world, the nonprofit sector, and academia. The Forum will look at methods to engage with and support essential multi-stakeholder efforts like the Partnership on AI and MLCommons, both of which are making significant contributions throughout the AI community.

For more detailed information, click here.

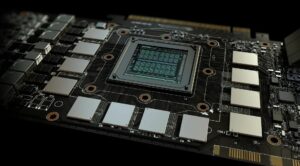

Featured image credit: Unsplash

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Automotive / EVs, Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- BlockOffsets. Modernizing Environmental Offset Ownership. Access Here.

- Source: https://dataconomy.com/2023/07/27/openai-microsoft-google-and-anthropic-team-up-for-frontier-ai/

- :has

- :is

- $UP

- 1

- a

- Academia

- academics

- According

- addition

- address

- advisory

- advisory board

- AI

- aim

- All

- Alliance

- already

- also

- an

- and

- ARE

- arrangements

- artificial

- artificial intelligence

- AS

- assessments

- assist

- Association

- At

- BE

- being

- benchmarks

- BEST

- best practices

- between

- Biggest

- board

- both

- build

- business

- businesses

- by

- CAN

- capabilities

- careful

- Chance

- change

- Civic

- click

- Climate

- Climate change

- collaboration

- coming

- coming weeks

- commitment

- Communication

- community

- Companies

- company

- comparing

- Comprised

- considered

- consulting

- contributed

- contributions

- Core

- Corporations

- Council

- create

- creation

- credit

- Current

- cutting-edge

- cyber

- cyber security

- Dangerous

- dangers

- data

- decide

- decision-makers

- demands

- demonstrate

- detailed

- Determine

- determining

- developing

- Development

- diagnosis

- different

- disciplines

- discussion

- Disease

- does

- draws

- eager

- effect

- Effective

- effects

- efforts

- encourage

- engage

- enough

- ensure

- essential

- establish

- established

- Every

- executive

- Experiences

- expertise

- facilitate

- finance

- firms

- follow

- For

- form

- formed

- Forum

- Forward

- Founded

- founding

- four

- frameworks

- from

- Frontier

- G7

- given

- Global

- Goals

- governance

- Government

- Governments

- Group

- Have

- help

- here

- HTTPS

- image

- implementation

- implementing

- improve

- in

- Including

- Increase

- individuals

- industry

- information

- initiatives

- innovative

- Institutional

- Intelligence

- interested

- into

- issues

- IT

- ITS

- jpg

- lead

- learning

- LEND

- Library

- like

- limits

- Look

- machine

- machine learning

- made

- major

- Making

- max-width

- member

- Members

- methods

- Microsoft

- Mission

- model

- models

- moment

- months

- more

- most

- must

- National

- Nature

- New

- NGOs

- Nonprofit

- objectives

- of

- offer

- on

- only

- OpenAI

- or

- Other

- participants

- parties

- Partnership

- pave

- performance

- perspectives

- Pioneering

- plato

- Plato Data Intelligence

- PlatoData

- player

- potential

- practices

- pressing

- Prevention

- problems

- process

- Programs

- Progress

- projects

- promote

- provide

- public

- pushed

- qualify

- range

- Regulation

- related

- releasing

- relevant

- research

- Resources

- response

- responsible

- risks

- Safety

- sector

- security

- set

- significant

- So

- Social

- Society

- Software

- some

- standards

- steering

- Strategy

- structure

- Study

- successful

- such

- support

- tactics

- Take

- team

- Technical

- Technologies

- Technology

- Testing

- that

- The

- their

- this

- threats

- throughout

- to

- together

- trade

- Trust

- understand

- upcoming

- use

- used

- viewpoints

- Way..

- Weeks

- Welcomes

- WELL

- which

- wide

- Wide range

- widely

- will

- with

- Work

- working

- Working Group

- world

- zephyrnet