Introduction

The amalgamation of artificial intelligence (AI) and artistry unveils new avenues in creative digital art, prominently through diffusion models. These models stand out in the creative AI art generation, offering a distinct approach from conventional neural networks. This article takes you on an explorative journey into the depths of diffusion models, elucidating their unique mechanism in crafting visually stunning and creatively rich artworks. Understand the nuances of diffusion models and gain insight into their role in redefining artistic expression through the lens of advanced AI technologies.

Learning Objectives

- Understand the fundamental concepts of diffusion models in AI.

- Explore the distinction between diffusion models and traditional neural networks in art generation.

- Analyze the process of creating art using diffusion models.

- Evaluate the creative and aesthetic implications of AI in digital art.

- Discuss the ethical considerations in AI-generated artwork.

This article was published as a part of the Data Science Blogathon.

Table of contents

Understanding Diffusion Models

Diffusion models revolutionize generative AI, presenting a unique image creation method distinct from conventional techniques like Generative Adversarial Networks (GANs). Starting with random noise, these models progressively refine it, resembling an artist fine-tuning a painting, resulting in intricate and coherent images.

This incremental refinement process mirrors the methodical nature of diffusion. Here each iteration subtly alters the noise, edging it closer to the final artistic vision. The output is not merely a product of randomness but an evolved piece of art, distinct in its progression and finish.

Coding for diffusion models demands a profound grasp of neural networks and machine learning frameworks such as TensorFlow or PyTorch. The resulting code is intricate, requiring extensive training on expansive datasets to achieve the nuanced effects observed in AI-generated art.

Application of Stable Diffusion in Art

The advent of AI art generators like stable diffusion models requires sophisticated coding within platforms such as TensorFlow or PyTorch. These models stand out for their ability to methodically transform randomness into structure, much like an artist who hones a preliminary sketch into a vivid masterpiece.

Stable diffusion models reshape the AI art scene by sculpting orderly images from randomness, eschewing the competitive dynamics characteristic of GANs. They excel in interpreting conceptual prompts into visual art, fostering a synergistic dance between AI capabilities and human ingenuity. By harnessing PyTorch, we observe how these models iteratively refine chaos into clarity, mirroring the artist’s journey from a nascent idea to a polished creation.

Experimenting with AI-Generated Art

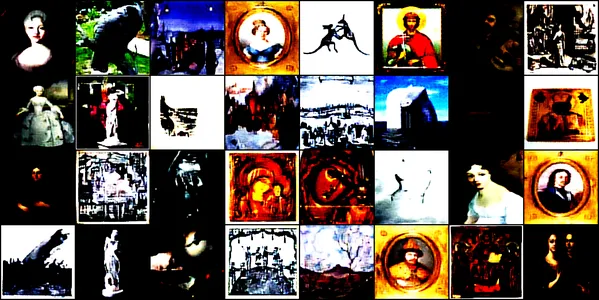

This demonstration delves into the fascinating world of AI-generated art using a convolutional neural network called the ConvDiffusionModel. This model is trained on diverse art images, encompassing drawings, paintings, sculptures, and engravings, as sourced from this Kaggle dataset. Our goal is to explore the model’s capability to capture and reproduce the complex aesthetics of these artworks.

Model Architecture and Training

Architectural Design

The ConvDiffusionModel, at its core, is a marvel of neural engineering, featuring a sophisticated encoder-decoder architecture tailored to the demands of art generation. The model’s structure is a complex neural network, integrating refined encoder-decoder mechanisms specifically honed for art generation. With additional convolutional layers and skip connections that emulate artistic intuition, the model can dissect and reassemble art with an astute understanding of composition and style.

- Encoder: The encoder is the model’s analytical eye, scrutinizing every input image’s minute details. As images pass through the encoder’s convolutional layers, they are progressively compressed into a latent space—a compact, encoded representation of the original artwork. Our encoder not only scrutinizes input images but now does so with an augmented depth of perception, courtesy of additional layers and batch normalization techniques. This extended examination allows for a richer, condensed representation within the latent space, mirroring an artist’s deep contemplation of a subject.

- Decoder: In contrast, the decoder serves as the model’s creative hand, taking the abstract sketches from the encoder and breathing life into them. It reconstructs the artwork from the latent space, layer by layer, detail by detail, until a complete image emerges. Our decoder benefits from skip connections and can reconstruct artwork with greater precision. It revisits the abstracted essence of the input and progressively embellishes it, achieving a rendition that’s more faithful to the source material. The enhanced layers work in concert to ensure that the final image is a vivid, intricate piece reflective of the input’s artistry.

Training Process

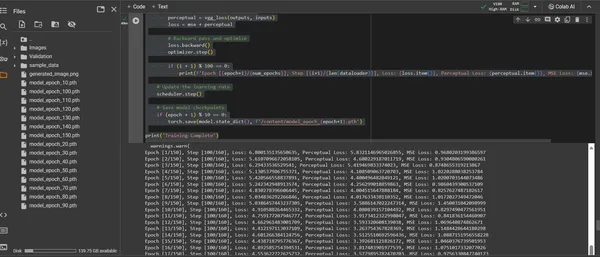

The training of the ConvDiffusionModel is a journey through an artistic landscape spanning 150 epochs. Each epoch represents a complete pass through the entire dataset, with the model striving to refine its understanding and improve the fidelity of its generated images.

- Hybrid Loss Function: At the heart of the training lies the mean squared error (MSE) loss function. This function quantifies the difference between the original masterpiece and the model’s recreation, providing a clear metric to minimize. We will introduce a perceptual loss component derived from a pre-trained VGG network that complements the mean squared error (MSE) metric. This dual-loss strategy propels the model to honor the artistic integrity of the originals while perfecting the technical reproduction of their details.

- Optimizer: With its learning rate dynamically adjusted by a scheduler, the Adam optimizer guides the model’s learning with increased sagacity. This adaptive approach ensures that the model’s progress in learning to replicate and innovate art is both steady and robust.

- Iteration and Refinement: The training iterations are a dance between preserving artistic essence and pursuing technical replication. With every cycle, the model edges closer to a synthesis of fidelity and creativity.

- Visualization of Progress: Images are saved at regular intervals during training to visualize the model’s progress. These snapshots offer a window into the model’s learning curve, showcasing how its generated art evolves, becoming clearer, more detailed, and more artistically coherent with each epoch.

The above is demonstrated via the following piece of code:

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import datasets, transforms

from torch.utils.data import DataLoader

from torchvision.utils import save_image

from torchvision.models import vgg16

from PIL import Image

# Defining a function to check for valid images

def is_valid_image(image_path):

try:

with Image.open(image_path) as img:

img.verify()

return True

except (IOError, SyntaxError) as e:

# Printing out the names of all corrupt files

print(f'Bad file:', image_path)

return False

# Defining the neural network

class ConvDiffusionModel(nn.Module):

def __init__(self):

super(ConvDiffusionModel, self).__init__()

# Encoder

self.enc1 = nn.Sequential(nn.Conv2d(3, 64, kernel_size=3,

stride=1, padding=1),

nn.ReLU(),

nn.BatchNorm2d(64),

nn.MaxPool2d(kernel_size=2,

stride=2))

self.enc2 = nn.Sequential(nn.Conv2d(64, 128,

kernel_size=3, padding=1),

nn.ReLU(),

nn.BatchNorm2d(128),

nn.MaxPool2d(kernel_size=2,

stride=2))

self.enc3 = nn.Sequential(nn.Conv2d(128, 256, kernel_size=3,

padding=1),

nn.ReLU(),

nn.BatchNorm2d(256),

nn.MaxPool2d(kernel_size=2,

stride=2))

# Decoder

self.dec1 = nn.Sequential(nn.ConvTranspose2d(256, 128,

kernel_size=3, stride=2, padding=1, output_padding=1),

nn.ReLU(),

nn.BatchNorm2d(128))

self.dec2 = nn.Sequential(nn.ConvTranspose2d(128, 64,

kernel_size=3, stride=2, padding=1, output_padding=1),

nn.ReLU(),

nn.BatchNorm2d(64))

self.dec3 = nn.Sequential(nn.ConvTranspose2d(64, 3,

kernel_size=3, stride=2, padding=1, output_padding=1),

nn.Sigmoid())

def forward(self, x):

# Encoder

enc1 = self.enc1(x)

enc2 = self.enc2(enc1)

enc3 = self.enc3(enc2)

# Decoder with skip connections

dec1 = self.dec1(enc3) + enc2

dec2 = self.dec2(dec1) + enc1

dec3 = self.dec3(dec2)

return dec3

# Using a pre-trained VGG16 model to compute perceptual loss

class VGGLoss(nn.Module):

def __init__(self):

super(VGGLoss, self).__init__()

self.vgg = vgg16(pretrained=True).features[:16].cuda()

.eval() # Only the first 16 layers

for param in self.vgg.parameters():

param.requires_grad = False

def forward(self, input, target):

input_vgg = self.vgg(input)

target_vgg = self.vgg(target)

loss = torch.nn.functional.mse_loss(input_vgg,

target_vgg)

return loss

# Checking if CUDA is available and set device to GPU if it is.

device = torch.device("cuda" if torch.cuda.is_available()

else "cpu")

# Initializing the model and perceptual loss

model = ConvDiffusionModel().to(device)

vgg_loss = VGGLoss().to(device)

mse_loss = nn.MSELoss()

optimizer = optim.Adam(model.parameters(), lr=0.001)

scheduler = optim.lr_scheduler.StepLR(optimizer, step_size=30,

gamma=0.1)

# Dataset and DataLoader setup

transform = transforms.Compose([

transforms.Resize((128, 128)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225]),

])

dataset = datasets.ImageFolder(root='/content/Images',

transform=transform, is_valid_file=is_valid_image)

dataloader = DataLoader(dataset, batch_size=32,

shuffle=True)

# Training loop

num_epochs = 150

for epoch in range(num_epochs):

for i, (inputs, _) in enumerate(dataloader):

inputs = inputs.to(device)

# Zero the parameter gradients

optimizer.zero_grad()

# Forward pass

outputs = model(inputs)

# Calculate losses

mse = mse_loss(outputs, inputs)

perceptual = vgg_loss(outputs, inputs)

loss = mse + perceptual

# Backward pass and optimize

loss.backward()

optimizer.step()

if (i + 1) % 100 == 0:

print(f'Epoch [{epoch+1}/{num_epochs}],

Step [{i+1}/{len(dataloader)}], Loss: {loss.item()},

Perceptual Loss: {perceptual.item()}, MSE Loss:

{mse.item()}')

# Saving the generated image for visualization

save_image(outputs, f'output_epoch_{epoch+1}

_step_{i+1}.png')

# Updating the learning rate

scheduler.step()

# Saving model checkpoints

if (epoch + 1) % 10 == 0:

torch.save(model.state_dict(),

f'/content/model_epoch_{epoch+1}.pth')

print('Training Complete')

Visualizing the Generated Artwork

Manifesting AI-Crafted Artistry

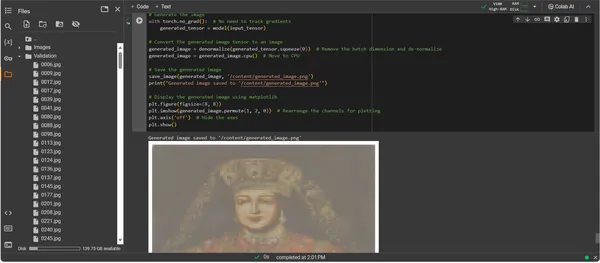

With the ConvDiffusionModel now fully trained, the focus shifts from the abstract to the concrete—from the potential to actualising AI-crafted art. The subsequent code snippet materializes the model’s learned artistic capabilities, transforming input data into a digital canvas of expression.

import os

import matplotlib.pyplot as plt

# Loading the trained model

model = ConvDiffusionModel().to(device)

model.load_state_dict(torch.load('/content/model_epoch_150.pth'))

model.eval() # Set the model to evaluation mode

# Transforming for the input image

transform = transforms.Compose([

transforms.Resize((128, 128)),

transforms.ToTensor(),

transforms.Normalize(mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225]),

])

# Function to de-normalize the image for viewing

def denormalize(tensor):

mean = torch.tensor([0.485, 0.456, 0.406]).

to(device).view(-1, 1, 1)

std = torch.tensor([0.229, 0.224, 0.225]).

to(device).view(-1, 1, 1)

tensor = tensor * std + mean # De-normalize

tensor = tensor.clamp(0, 1) # Clamp to the valid image range

return tensor

# Loading and transforming the image

input_image_path = '/content/Validation/0006.jpg'

input_image = Image.open(input_image_path).convert('RGB')

input_tensor = transform(input_image).unsqueeze(0).to(device)

# Adding a batch dimension

# Generating the image

with torch.no_grad():

generated_tensor = model(input_tensor)

# Converting the generated image tensor to an image

generated_image = denormalize(generated_tensor.squeeze(0))

# Removing the batch dimension and de-normalizing

generated_image = generated_image.cpu() # Move to CPU

# Saving the generated image

save_image(generated_image, '/content/generated_image.png')

print("Generated image saved to '/content/generated_image.png'")

# Displaying the generated image using matplotlib

plt.figure(figsize=(8, 8))

plt.imshow(generated_image.permute(1, 2, 0))

# Rearrange the channels for plotting

plt.axis('off') # Hide the axes

plt.show()

Artwork Generation Code Walkthrough

- Model Resurrection: The first step in the artwork generation is to revive our trained ConvDiffusionModel. The model’s learned weights are loaded and brought into evaluation mode, setting the stage for creation without further altering its parameters.

- Image Transformation: To ensure consistency with the training regime, input images are processed through the same sequence of transformations. This includes resizing to match the model’s input dimensions, tensor conversion for PyTorch compatibility, and normalization based on the training data’s statistical profile.

- Denormalization Utility: A custom function reverses the preprocessing effects, re-scaling the tensor to the original image’s colour range. This step is essential for rendering the generated output into a visually accurate representation.

- Input Prepping: An image is loaded and subjected to the aforementioned transformations. It’s crucial to note that this image serves as the muse from which the AI will draw inspiration—the silent whisper ignites the model’s synthetic imagination.

- Artwork Synthesis: In a delicate dance of forward propagation, the model interprets the input tensor, allowing its layers to collaborate in producing a new artistic vision. Perform this process without tracking gradients, as we’re now in the realm of application, not training.

- Image Conversion: The tensor output of the model, now holding the digitally born artwork, is denormalized, translating the model’s creation back into the familiar space of color and light that our eyes can appreciate.

- Artwork Revelation: The transformed tensor is laid out onto a digital canvas, culminating in a saved image file. This file is a window into the AI’s creative soul, a static echo of the dynamic process that gave it life.

- Artwork Retrieval: The script concludes by saving the generated image to a designated path and announcing its completion. The saved image, a synthesis of learned artistic principles and emergent creativity, is ready for display and contemplation.

Analyzing the Output

The ConvDiffusionModel’s output presents a figure with a clear nod to historical art. Draped in elaborate attire, the AI-rendered image echoes the grandeur of classical portraits yet with a distinct, modern touch. The subject’s attire is rich in texture, blending the model’s learned patterns with a novel interpretation. Delicate facial features and a subtle interplay of light and shadow showcase the AI’s nuanced understanding of traditional art techniques. This artwork is a testament to the model’s sophisticated training, reflecting an elegant synthesis of historical artistry through the prism of advanced machine learning. In essence, it is a digital homage to the past, crafted with the algorithms of the present.

Challenges and Ethical Considerations

Implementing diffusion models for art generation brings with it several challenges and ethical considerations that you should consider:

- Data Provenance: The training datasets must be curated responsibly. Verifying that the data used to train diffusion models does not contain copyrighted or protected works without proper authorization is essential.

- Bias and Representation: AI models can perpetuate biases in their training data. Ensuring diverse and inclusive datasets is important to avoid reinforcing stereotypes in AI-generated art.

- Control Over Output: Since diffusion models can generate a wide range of outputs, setting boundaries to prevent the creation of inappropriate or offensive content is necessary.

- Legal Framework: The lack of a robust legal framework to address the nuances of AI in the creative process presents a challenge. Legislation needs to evolve to protect the rights of all parties involved.

Conclusion

The rise of diffusion models in AI and art marks a transformative era, merging computational precision with aesthetic exploration. Their journey in the art world highlights significant innovation potential but comes with complexities. Balancing originality, influence, ethical creation, and respect for existing works is integral to the artistic process.

Key Takeaways

- Diffusion models are at the forefront of a transformative shift in art creation. They offer new digital tools that expand the canvas of artistic expression beyond traditional boundaries.

- In the AI-enhanced art, prioritizing the ethical gathering of training data and respecting the intellectual property of creators is imperative to maintain integrity in digital artistry.

- The convergence of artistic vision and technological innovation opens doors to a symbiotic relationship between artists and AI developers. Foster a collaborative environment that can give rise to groundbreaking art.

- Ensuring that AI-generated art represents a broad spectrum of perspectives is vital. Incorporate a varied range of data that reflects the richness of different cultures and viewpoints, thus promoting inclusivity.

- The burgeoning interest in AI-crafted art necessitates the establishment of robust legal frameworks. These frameworks should clarify copyright issues, recognize contributions, and govern the commercial use of AI-generated artwork.

The dawn of this artistic evolution offers a path brimming with creative potential yet requires mindful guardianship. It is incumbent upon us to cultivate a landscape where the fusion of AI and art thrives, guided by responsible and culturally sensitive practices.

Frequently Asked Questions

A. Diffusion models are generative ML algorithms that create images by starting with a pattern of random noise and gradually shaping it into a coherent picture. This process is akin to an artist starting with a blank canvas and slowly adding layers of detail.

A. GANs, diffusion models do not require a separate network to judge the output. They work by adding and removing noise iteratively, often resulting in more detailed and nuanced images.

A. Yes, diffusion models can generate original art pieces by learning from a dataset of images. However, the originality is influenced by the diversity and scope of the training data. There is an ongoing debate about the ethics of using existing artworks to train these models.

A. Ethical concerns encompass avoiding AI-generated art copyright infringement. Respecting human artists’ originality, preventing bias perpetuation, and ensuring transparency in AI’s creative process.

A. The future of AI-generated art looks promising, with diffusion models offering new tools for artists and creators. We can expect to see more sophisticated and intricate artworks as technology advances. However, the creative community must navigate ethical considerations and work towards clear guidelines and best practices.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.

Related

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.analyticsvidhya.com/blog/2023/12/implementing-diffusion-models-for-creative-ai-art-generation/

- :is

- :not

- :where

- 001

- 1

- 10

- 100

- 11

- 12

- 15%

- 150

- 16

- 19

- 224

- 225

- 8

- 9

- a

- ability

- About

- above

- ABSTRACT

- accurate

- Achieve

- achieving

- Adam

- adaptive

- adding

- Additional

- address

- Adjusted

- advanced

- advances

- advent

- adversarial

- AI

- ai art

- akin

- algorithms

- All

- Allowing

- allows

- an

- Analytical

- analytics

- Analytics Vidhya

- and

- Announcing

- Application

- appreciate

- approach

- architecture

- ARE

- Art

- article

- artist

- artistic

- artistically

- artistry

- Artists

- artwork

- artworks

- AS

- At

- augmented

- authorization

- available

- avenues

- avoid

- avoiding

- AXES

- back

- Bad

- balancing

- based

- BE

- becoming

- benefits

- BEST

- best practices

- between

- Beyond

- bias

- biases

- blank

- blending

- blogathon

- born

- both

- boundaries

- breathing

- brimming

- Brings

- broad

- brought

- burgeoning

- but

- by

- calculate

- called

- CAN

- canvas

- capabilities

- capability

- capture

- challenge

- challenges

- channels

- Chaos

- characteristic

- check

- checking

- clamp

- clarity

- class

- clear

- clearer

- closer

- code

- Coding

- COHERENT

- collaborate

- collaborative

- color

- comes

- commercial

- community

- compact

- compatibility

- competitive

- complete

- completion

- complex

- complexities

- component

- composition

- computational

- Compute

- concepts

- conceptual

- Concerns

- concert

- concludes

- Connections

- Consider

- considerations

- contain

- content

- contrast

- contributions

- conventional

- Convergence

- Conversion

- converting

- convolutional neural network

- copyright

- copyright infringement

- Core

- corrupt

- CPU

- crafted

- create

- Creating

- creation

- Creative

- Creatively

- creativity

- creators

- crucial

- culminating

- Cultivate

- culturally

- curated

- curve

- custom

- cycle

- dance

- data

- datasets

- debate

- deep

- defining

- demands

- demonstrated

- depth

- Depths

- Derived

- designated

- detail

- detailed

- details

- developers

- device

- differ

- difference

- different

- Diffusion

- digital

- Digital Art

- digitally

- Dimension

- dimensions

- discretion

- Display

- displaying

- distinct

- distinction

- diverse

- Diversity

- do

- does

- doors

- draw

- Drawings

- during

- dynamic

- dynamically

- dynamics

- e

- each

- echo

- echoes

- effects

- Elaborate

- else

- emerges

- encoded

- encompass

- encompassing

- Engineering

- enhanced

- ensure

- ensures

- ensuring

- Entire

- Environment

- epoch

- epochs

- Era

- error

- essence

- essential

- establishment

- Ether (ETH)

- ethical

- ethics

- evaluation

- Every

- evolution

- evolve

- evolved

- evolves

- examination

- Excel

- Except

- existing

- Expand

- expansive

- expect

- exploration

- explore

- expression

- extended

- extensive

- eye

- Eyes

- facial

- faithful

- false

- familiar

- fascinating

- Features

- Featuring

- fidelity

- Figure

- File

- Files

- final

- finish

- First

- Focus

- following

- For

- forefront

- Forward

- Foster

- fostering

- Framework

- frameworks

- from

- fully

- function

- functional

- fundamental

- further

- fusion

- future

- Gain

- GANs

- gathering

- gave

- generate

- generated

- generating

- generation

- generative

- generative adversarial networks

- Generative AI

- generators

- Give

- goal

- GPU

- gradients

- gradually

- grandeur

- grasp

- greater

- groundbreaking

- guided

- guidelines

- Guides

- hand

- Harnessing

- Heart

- here

- Hide

- highlights

- historical

- holding

- homage

- honor

- How

- However

- HTTPS

- human

- i

- idea

- if

- ignites

- image

- images

- imagination

- imperative

- implementing

- implications

- import

- important

- improve

- in

- includes

- Inclusive

- Inclusivity

- incorporate

- increased

- incremental

- Incumbent

- influence

- influenced

- infringement

- ingenuity

- innovate

- Innovation

- input

- inputs

- insight

- integral

- Integrating

- integrity

- intellectual

- intellectual property

- interest

- interpretation

- into

- intricate

- introduce

- intuition

- involved

- issues

- IT

- iteration

- iterations

- ITS

- journey

- jpg

- judge

- Lack

- landscape

- layer

- layers

- learned

- learning

- Legal

- legal framework

- Legislation

- Lens

- lies

- Life

- light

- like

- loading

- LOOKS

- loss

- losses

- machine

- machine learning

- maintain

- marvel

- masterpiece

- Match

- material

- matplotlib

- mean

- mechanism

- mechanisms

- Media

- merely

- merging

- method

- methodical

- metric

- minimize

- minute

- mirroring

- ML

- ML algorithms

- Mode

- model

- models

- Modern

- module

- more

- move

- much

- MUSE

- must

- names

- nascent

- Nature

- Navigate

- necessary

- needs

- network

- networks

- Neural

- Neural engineering

- neural network

- neural networks

- New

- Noise

- note

- novel

- now

- nuances

- observe

- observed

- of

- off

- offensive

- offer

- offering

- Offers

- often

- on

- ongoing

- only

- opens

- Optimize

- or

- original

- originality

- Originals

- OS

- Other

- our

- out

- output

- outputs

- over

- owned

- painting

- paintings

- parameter

- parameters

- part

- parties

- pass

- past

- path

- Pattern

- patterns

- perception

- perfecting

- perform

- perspectives

- picture

- piece

- pieces

- Platforms

- plato

- Plato Data Intelligence

- PlatoData

- portraits

- potential

- practices

- Precision

- preliminary

- present

- presents

- preserving

- prevent

- preventing

- principles

- printing

- prioritizing

- process

- processed

- producing

- Product

- Profile

- profound

- Progress

- progression

- progressively

- promising

- promoting

- prompts

- propagation

- proper

- property

- protect

- protected

- provenance

- providing

- published

- pursuing

- pytorch

- quantifies

- random

- randomness

- range

- Rate

- ready

- realm

- recognize

- Redefining

- refine

- refined

- reflecting

- reflects

- regime

- regular

- relationship

- removing

- rendering

- replication

- representation

- represents

- reproduction

- require

- requires

- resembling

- reshape

- respect

- respecting

- responsible

- responsibly

- resulting

- return

- revelation

- Revive

- revolutionize

- RGB

- Rich

- rights

- Rise

- robust

- Role

- same

- saved

- saving

- scene

- Science

- scope

- script

- see

- SELF

- sensitive

- separate

- Sequence

- serves

- set

- setting

- setup

- several

- Shadow

- shaping

- shift

- Shifts

- should

- showcase

- showcasing

- shown

- significant

- since

- Slowly

- Snippet

- So

- sophisticated

- Soul

- Source

- sourced

- Space

- spanning

- specifically

- Spectrum

- Squared

- stable

- Stage

- stand

- Starting

- statistical

- steady

- Step

- Strategy

- striving

- structure

- Stunning

- style

- subject

- subsequent

- such

- Symbiotic

- synergistic

- synthesis

- synthetic

- tailored

- takes

- taking

- Target

- Technical

- techniques

- technological

- Technologies

- Technology

- tensorflow

- testament

- that

- The

- The Future

- The Source

- their

- Them

- There.

- These

- they

- this

- thrives

- Through

- Thus

- to

- tools

- torch

- Torchvision

- touch

- towards

- Tracking

- traditional

- Train

- trained

- Training

- Transform

- Transformation

- transformations

- transformative

- transformed

- transforming

- transforms

- Transparency

- true

- try

- understand

- understanding

- unique

- until

- Unveils

- updating

- upon

- us

- use

- used

- using

- utility

- valid

- verifying

- via

- viewing

- viewpoints

- vision

- visual

- visual art

- visualization

- visualize

- visually

- vital

- was

- we

- webp

- What

- What is

- which

- while

- Whisper

- WHO

- wide

- Wide range

- will

- window

- with

- within

- without

- Work

- works

- world

- X

- yes

- yet

- you

- zephyrnet

- zero