Artificial intelligence has progressed so rapidly that even some of the scientists responsible for many key developments are troubled by the pace of change. Earlier this year, more than 300 professionals working in AI and other concerned public figures issued a blunt warning about the danger the technology poses, comparing the risk to that of pandemics or nuclear war.

Lurking just below the surface of these concerns is the question of machine consciousness. Even if there is “nobody home” inside today’s AIs, some researchers wonder if they may one day exhibit a glimmer of consciousness—or more. If that happens, it will raise a slew of moral and ethical concerns, says Jonathan Birch, a professor of philosophy at the London School of Economics and Political Science.

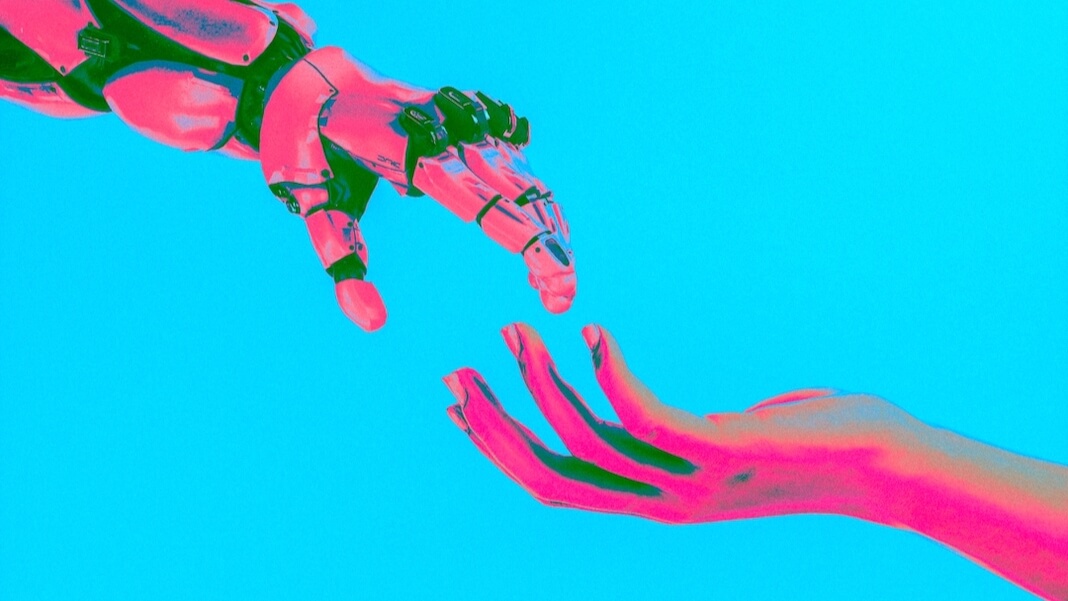

As AI technology leaps forward, ethical questions sparked by human-AI interactions have taken on new urgency. “We don’t know whether to bring them into our moral circle, or exclude them,” said Birch. “We don’t know what the consequences will be. And I take that seriously as a genuine risk that we should start talking about. Not really because I think ChatGPT is in that category, but because I don’t know what’s going to happen in the next 10 or 20 years.”

In the meantime, he says, we might do well to study other non-human minds—like those of animals. Birch leads the university’s Foundations of Animal Sentience project, a European Union-funded effort that “aims to try to make some progress on the big questions of animal sentience,” as Birch put it. “How do we develop better methods for studying the conscious experiences of animals scientifically? And how can we put the emerging science of animal sentience to work, to design better policies, laws, and ways of caring for animals?”

Our interview was conducted over Zoom and by email, and has been edited for length and clarity.

(This article was originally published on Undark. Read the original article.)

Undark: There’s been ongoing debate over whether AI can be conscious, or sentient. And there seems to be a parallel question of whether AI can seem to be sentient. Why is that distinction is so important?

Jonathan Birch: I think it’s a huge problem, and something that should make us quite afraid, actually. Even now, AI systems are quite capable of convincing their users of their sentience. We saw that last year with the case of Blake Lemoine, the Google engineer who became convinced that the system he was working on was sentient—and that’s just when the output is purely text, and when the user is a highly skilled AI expert.

So just imagine a situation where AI is able to control a human face and a human voice and the user is inexperienced. I think AI is already in the position where it can convince large numbers of people that it is a sentient being quite easily. And it’s a big problem, because I think we will start to see people campaigning for AI welfare, AI rights, and things like that.

And we won’t know what to do about this. Because what we’d like is a really strong knockdown argument that proves that the AI systems they’re talking about are not conscious. And we don’t have that. Our theoretical understanding of consciousness is not mature enough to allow us to confidently declare its absence.

UD: A robot or an AI system could be programmed to say something like, “Stop that, you’re hurting me.” But a simple declaration of that sort isn’t enough to serve as a litmus test for sentience, right?

JB: You can have very simple systems [like those] developed at Imperial College London to help doctors with their training that mimic human pain expressions. And there’s absolutely no reason whatsoever to think these systems are sentient. They’re not really feeling pain; all they’re doing is mapping inputs to outputs in a very simple way. But the pain expressions they produce are quite lifelike.

I think we’re in a somewhat similar position with chatbots like ChatGPT—that they are trained on over a trillion words of training data to mimic the response patterns of a human to respond in ways that a human would respond.

So, of course, if you give it a prompt that a human would respond to by making an expression of pain, it will be able to skillfully mimic that response.

But I think when we know that’s the situation—when we know that we’re dealing with skillful mimicry—there’s no strong reason for thinking there’s any actual pain experience behind that.

UD: This entity that the medical students are training on, I’m guessing that’s something like a robot?

JB: That’s right, yes. So they have a dummy-like thing, with a human face, and the doctor is able to press the arm and get an expression mimicking the expressions humans would give for varying degrees of pressure. It’s to help doctors learn how to carry out techniques on patients appropriately without causing too much pain.

And we’re very easily taken in as soon as something has a human face and makes expressions like a human would, even if there’s no real intelligence behind it at all.

So if you imagine that being paired up with the sort of AI we see in ChatGPT, you have a kind of mimicry that is genuinely very convincing, and that will convince a lot of people.

UD: Sentience seems like something we know from the inside, so to speak. We understand our own sentience—but how would you test for sentience in others, whether an AI or any other entity beyond oneself?

JB: I think we’re in a very strong position with other humans, who can talk to us, because there we have an incredibly rich body of evidence. And the best explanation for that is that other humans have conscious experiences, just like we do. And so we can use this kind of inference that philosophers sometimes call “inference to the best explanation.”

I think we can approach the topic of other animals in exactly the same way—that other animals don’t talk to us, but they do display behaviors that are very naturally explained by attributing states like pain. For example, if you see a dog licking its wounds after an injury, nursing that area, learning to avoid the places where it’s at risk of injury, you’d naturally explain this pattern of behavior by positing a pain state.

And I think when we’re dealing with other animals that have nervous systems quite similar to our own, and that have evolved just like we have, I think that sort of inference is entirely reasonable.

UD: What about an AI system?

JB: In the AI case, we have a huge problem. We first of all have the problem that the substrate is different. We don’t really know whether conscious experience is sensitive to the substrate—does it have to have a biological substrate, which is to say a nervous system, a brain? Or is it something that can be achieved in a totally different material—a silicon-based substrate?

But there’s also the problem that I’ve called the “gaming problem”—that when the system has access to trillions of words of training data, and has been trained with the goal of mimicking human behavior, the sorts of behavior patterns it produces could be explained by it genuinely having the conscious experience. Or, alternatively, they could just be explained by it being set the goal of behaving as a human would respond in that situation.

So I really think we’re in trouble in the AI case, because we’re unlikely to find ourselves in a position where it’s clearly the best explanation for what we’re seeing—that the AI is conscious. There will always be plausible alternative explanations. And that’s a very difficult bind to get out of.

UD: What do you imagine might be our best bet for distinguishing between something that’s actually conscious versus an entity that just has the appearance of sentience?

JB: I think the first stage is to recognize it as a very deep and difficult problem. The second stage is to try and learn as much as we can from the case of other animals. I think when we study animals that are quite close to us, in evolutionary terms, like dogs and other mammals, we’re always left unsure whether conscious experience might depend on very specific brain mechanisms that are distinctive to the mammalian brain.

To get past that, we need to look at as wide a range of animals as we can. And we need to think in particular about invertebrates, like octopuses and insects, where this is potentially another independently evolved instance of conscious experience. Just as the eye of an octopus has evolved completely separately from our own eyes—it has this fascinating blend of similarities and differences—I think its conscious experiences will be like that too: independently evolved, similar in some ways, very, very different in other ways.

And through studying the experiences of invertebrates like octopuses, we can start to get some grip on what the really deep features are that a brain has to have in order to support conscious experiences, things that go deeper than just having these specific brain structures that are there in mammals. What kinds of computation are needed? What kinds of processing?

Then—and I see this as a strategy for the long term—we might be able to go back to the AI case and say, well, does it have those special kinds of computation that we find in conscious animals like mammals and octopuses?

UD: Do you believe we will one day create sentient AI?

JB: I am at about 50:50 on this. There is a chance that sentience depends on special features of a biological brain, and it’s not clear how to test whether it does. So I think there will always be substantial uncertainty in AI. I am more confident about this: If consciousness can in principle be achieved in computer software, then AI researchers will find a way of doing it.

Image Credit: Cash Macanaya / Unsplash

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Automotive / EVs, Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- BlockOffsets. Modernizing Environmental Offset Ownership. Access Here.

- Source: https://singularityhub.com/2023/07/17/studying-animal-sentience-could-help-solve-the-ethical-puzzle-of-sentient-ai/

- :has

- :is

- :not

- :where

- $UP

- 10

- 20

- 20 years

- 300

- 50

- a

- Able

- About

- absolutely

- AC

- access

- achieved

- actual

- actually

- afraid

- After

- AI

- AI systems

- All

- allow

- already

- also

- alternative

- always

- am

- an

- and

- animal

- animals

- Another

- any

- approach

- appropriately

- ARE

- AREA

- argument

- ARM

- article

- AS

- At

- avoid

- back

- bbc

- BE

- because

- been

- behind

- being

- believe

- below

- BEST

- Bet

- Better

- between

- Beyond

- Big

- bind

- Blend

- body

- Brain

- bring

- but

- by

- call

- called

- campaigning

- CAN

- capable

- carry

- case

- Category

- causing

- Chance

- change

- chatbots

- ChatGPT

- Circle

- clarity

- clear

- clearly

- Close

- College

- comparing

- completely

- computation

- computer

- Computer Software

- concerned

- Concerns

- conducted

- confident

- confidently

- conscious

- Consciousness

- Consequences

- control

- convince

- could

- course

- create

- credit

- DANGER

- data

- day

- dealing

- debate

- deep

- deeper

- depends

- Design

- develop

- developed

- developments

- different

- difficult

- Display

- distinction

- distinctive

- do

- Doctor

- Doctors

- does

- Dog

- Dogs

- doing

- Dont

- Earlier

- easily

- Economics

- Edge

- effort

- emerging

- engineer

- enough

- entirely

- entity

- ethical

- European

- Even

- evidence

- evolved

- exactly

- example

- exhibit

- experience

- Experiences

- expert

- Explain

- explained

- explanation

- expression

- expressions

- eye

- Face

- fascinating

- Features

- Figures

- Find

- First

- For

- Forward

- from

- genuine

- get

- Give

- glimmer

- Go

- goal

- going

- happen

- happens

- Have

- having

- he

- help

- highly

- How

- How To

- HTTPS

- huge

- human

- Humans

- i

- if

- imagine

- Imperial

- Imperial College

- Imperial College London

- important

- in

- In other

- incredibly

- independently

- inputs

- inside

- instance

- Intelligence

- interactions

- Interview

- into

- Issued

- IT

- ITS

- just

- Key

- Kind

- Know

- large

- Last

- Last Year

- Laws

- Leads

- leaps

- LEARN

- learning

- left

- Length

- licking

- like

- London

- Long

- Look

- Lot

- machine

- make

- MAKES

- Making

- many

- mapping

- mature

- May..

- me

- meantime

- mechanisms

- medical

- methods

- might

- moral

- more

- much

- Nature

- Need

- needed

- New

- next

- no

- now

- nuclear

- numbers

- Nursing

- of

- on

- ONE

- ongoing

- or

- order

- originally

- Other

- Others

- our

- ourselves

- out

- output

- over

- own

- Pace

- Pain

- paired

- Pandemics

- Parallel

- particular

- past

- patients

- Pattern

- patterns

- People

- philosophy

- Places

- plato

- Plato Data Intelligence

- PlatoData

- plausible

- policies

- political

- poses

- position

- potentially

- press

- pressure

- Problem

- processing

- produce

- produces

- professionals

- Professor

- programmed

- Progress

- progressed

- proves

- public

- published

- purely

- put

- puzzle

- question

- Questions

- raise

- range

- rapidly

- Read

- real

- really

- reason

- reasonable

- recognize

- researchers

- Respond

- response

- responsible

- Rich

- right

- Risk

- robot

- Said

- same

- saw

- say

- says

- School

- Science

- scientists

- Second

- see

- seems

- sensitive

- seriously

- serve

- set

- should

- similar

- similarities

- Simple

- situation

- skilled

- So

- Software

- SOLVE

- some

- something

- somewhat

- Soon

- sparked

- speak

- special

- specific

- Stage

- start

- State

- States

- Strategy

- strong

- Students

- Study

- Studying

- substantial

- support

- Surface

- system

- Systems

- Take

- taken

- Talk

- talking

- techniques

- Technology

- terms

- test

- than

- that

- The

- their

- Them

- then

- theoretical

- There.

- These

- they

- thing

- things

- think

- Thinking

- this

- this year

- those

- Through

- to

- today’s

- too

- topic

- TOTALLY

- trained

- Training

- Trillion

- trillions

- trouble

- try

- Uncertainty

- understand

- understanding

- unlikely

- urgency

- us

- use

- User

- users

- Versus

- very

- Voice

- war

- was

- Way..

- ways

- we

- Welfare

- WELL

- What

- when

- whether

- which

- WHO

- why

- wide

- will

- with

- without

- words

- Work

- working

- would

- would give

- wounds

- year

- years

- yes

- you

- zephyrnet

- zoom