Last week, Google launched its new AI, or rather its new big language model, dubbed Gemini. The Gemini 1.0 model is available in three versions: Gemini Nano is supposed to be best suited for tasks on a specific device, Gemini Pro is supposed to be the best option for a wider range of tasks, and Gemini Ultra is Google’s largest language model that will handle the most complex tasks you can give it.

Something that Google was keen to highlight at the launch of Gemini Ultra was that the language model outperformed the latest version of OpenAI’s GPT-4 in 30 of the 32 most commonly used tests to measure the capabilities of language models. The tests cover everything from reading comprehension and various math questions to writing code for Python and image analysis. In some of the tests, the difference between the two AI models was only a few tenths of a percentage point, while in others it was up to ten percentage points.

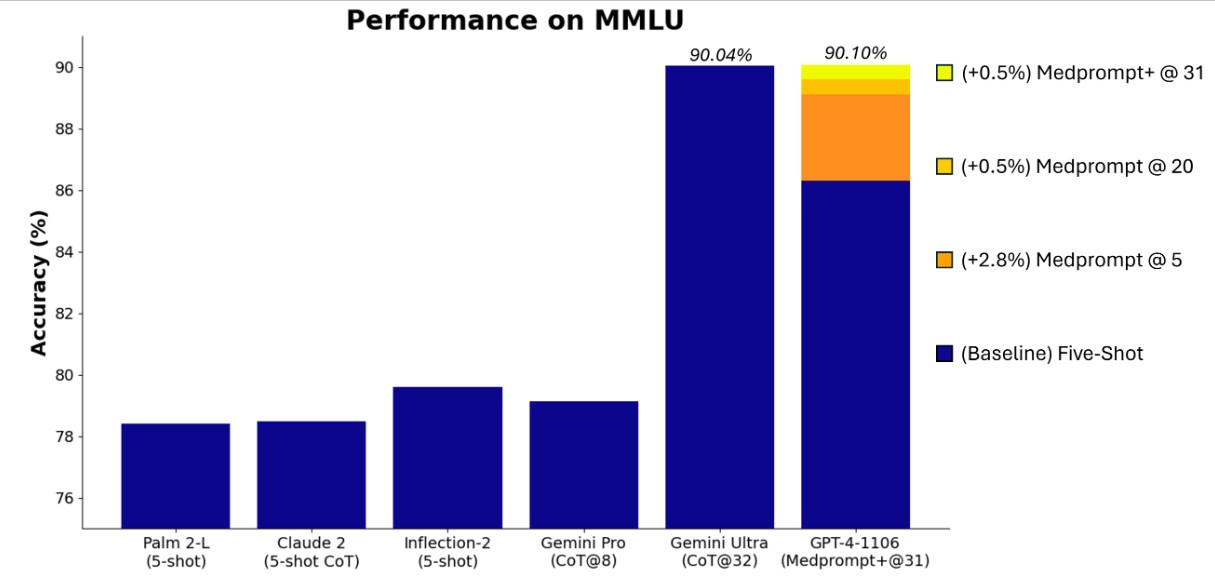

Perhaps Gemini Ultra’s most impressive achievement, however, is that it is the first language model to beat human experts in massive multitask language understanding (MMLU) tests, where Gemini Ultra and experts were faced with problem-solving tasks in 57 different fields, ranging from math and physics to medicine, law, and ethics. Gemini Ultra managed to achieve a score of 90.0 percent, while the human expert it was compared to “only” scored 89.8 percent.

The launch of Gemini will be gradual. Last week, Gemini Pro became available to the public, as Google’s chatbot Bard started using a modified version of the language model, and Gemini Nano is built into a number of different functions on Google’s Pixel 8 Pro smartphone. Gemini Ultra isn’t ready for the public yet. Google says it’s still undergoing security testing and is only being shared with a handful of developers and partners, as well as experts in AI liability and security. However, the idea is to make Gemini Ultra available to the public via Bard Advanced when it launches early next year.

Microsoft has now countered Google’s claims that Gemini Ultra can beat GPT-4 by having GPT-4 run the same tests again, but this time with slightly modified prompts or inputs. Microsoft researchers published research in November on something they called Medprompt, a mix of different strategies for feeding prompts into the language model to get better results. You may have noticed how the answers you get out of ChatGPT or the images you get out of Bing’s image creator are slightly different when you change the wording a bit. That concept, but much more advanced, is the idea behind Medprompt.

Microsoft

By using Medprompt, Microsoft managed to make GPT-4 perform better than Gemini Ultra on a number of the 30 tests Google previously highlighted, including the MMLU test, where GPT-4 with Medprompt inputs managed to get a score of 90.10 per cent. Which language model will dominate in the future remains to be seen. The battle for the AI throne is far from over.

This article was translated from Swedish to English and originally appeared on pcforalla.se.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- Source: https://www.pcworld.com/article/2170884/ai-battle-continues-google-launches-gemini-to-beat-openai-and-microsofts-gpt-4.html

- :has

- :is

- :where

- $UP

- 1

- 10

- 30

- 32

- 8

- 90

- a

- Achieve

- achievement

- advanced

- again

- AI

- AI models

- aim

- analysis

- and

- answers

- appeared

- ARE

- article

- AS

- At

- available

- Battle

- BE

- beat

- became

- behind

- being

- BEST

- Better

- between

- Big

- Bit

- built

- but

- by

- called

- CAN

- capabilities

- cent

- change

- chatbot

- ChatGPT

- claims

- code

- commonly

- compared

- complex

- concept

- cover

- creator

- developers

- device

- difference

- different

- dominate

- dubbed

- Early

- English

- ethics

- everything

- expert

- experts

- faced

- far

- feeding

- few

- Fields

- First

- For

- from

- functions

- future

- Gemini

- get

- gif

- Give

- Google’s

- gradual

- handful

- handle

- Have

- having

- Highlight

- Highlighted

- How

- However

- HTML

- HTTPS

- human

- idea

- image

- image analysis

- images

- impressive

- in

- Including

- inputs

- into

- IT

- ITS

- jpg

- Keen

- language

- largest

- Last

- latest

- launch

- launched

- launches

- Law

- liability

- make

- managed

- massive

- math

- May..

- measure

- medicine

- Microsoft

- mix

- model

- models

- modified

- more

- most

- much

- nano

- New

- next

- November

- now

- number

- of

- on

- only

- OpenAI

- Option

- or

- originally

- Others

- out

- outperformed

- over

- partners

- per

- percent

- percentage

- perform

- Physics

- Pixel

- plato

- Plato Data Intelligence

- PlatoData

- Point

- points

- previously

- Pro

- problem-solving

- prompts

- public

- published

- Published research

- Python

- Questions

- range

- ranging

- rather

- Reading

- ready

- remains

- research

- researchers

- Results

- Run

- s

- same

- says

- score

- scored

- security

- security testing

- seen

- shared

- slightly different

- smartphone

- some

- something

- specific

- started

- Still

- strategies

- supposed

- Swedish

- takes

- tasks

- ten

- test

- Testing

- tests

- than

- that

- The

- The Future

- they

- this

- three

- throne

- time

- to

- two

- Ultra

- undergoing

- understanding

- used

- using

- various

- version

- via

- vs

- was

- week

- WELL

- were

- when

- which

- while

- wider

- will

- with

- wording

- writing

- year

- yet

- you

- zephyrnet