We are deeply committed to pursuing research that’s responsible and community engaged in all areas, including artificial intelligence (AI). We achieve this through transparency, external validation, and supporting academic institutions through collaboration and sponsorship. This approach allows us to accelerate achieving the greatest advances in our three focus areas: generative AI, data center scaling, and online safety. Today, we’re sharing insights and results from two of our generative AI research projects. ControlNet is an open-source neural network that adds conditional control to image generation models for more precise image outputs. StarCoder is a state-of-the-art open-source large language model (LLM) for code generation.

Both projects are academic and industry collaborations. Both are also focused on radically more powerful tools for our creators: 3D artists and programmers. Most importantly and aligned with our mission of investing in the long view through transformative research, these projects exhibit indications of advances in fundamental scientific understanding and control of AI for many applications. We believe this work may have a significant impact on the future of Roblox and the field as a whole and are proud to share it openly.

ControlNet

Recent AI breakthroughs — specifically data-driven machine learning (ML) methods using deep neural networks — have driven new advances in creation tools. These advances include our Code Assist and Material Generator features that are publicly available in our free tool, Roblox Studio. Modern generative AI systems contain data structures called models that are refined through billions of training operations. The most powerful models today are multimodal, meaning they are trained on a mixture of media such as text, images, and audio. This allows them to find the common underlying meanings across media rather than overfitting to specific elements of a data set, such as color palettes or spelling.

These new AI systems have significant expressive power, but that power is directed largely through “prompt engineering.” Doing so means simply changing the input text, similar to refining a search engine query if it didn’t return what you expected. While this may be an engaging way to play with a new technology such as an undirected chatbot, it is not an efficient or effective way to create content. Creators instead need power tools that they can leverage effectively through active control rather than guesswork.

The ControlNet project is a step toward solving some of these challenges. It offers an efficient way to harness the power of large pre-trained AI models such as Stable Diffusion, without relying on prompt engineering. ControlNet increases control by allowing the artist to provide additional input conditions beyond just text prompts. Roblox researcher and Stanford University professor Maneesh Agrawala and Stanford researcher Lvmin Zhang frame the goals for our joint ControlNet project as:

- Develop a better user interface for generative AI tools. Move beyond obscure prompt manipulation and build around more natural ways of communicating an idea or creative concept.

- Provide more precise spatial control, to go beyond making “an image like” or “an image in the style of…” to enable realizing exactly the image that the creator has in their mind.

- Transform generative AI training to a more compute-efficient process that executes more quickly, requires less memory, and consumes less electrical energy.

- Extend image generative AI into a reusable building block. It then can be integrated with standardized image processing and 3D rendering pipelines.

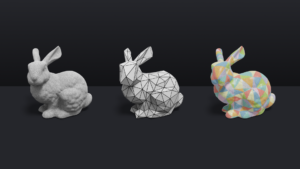

By allowing creators to provide an additional image for spatial control, ControlNet grants greater control over the final generated image. For example, a prompt of “male deer with antlers” on an existing text-to-image generator produced a wide variety of images, as shown below:

These images generated with previous AI solutions are attractive, but unfortunately essentially arbitrary results—there is no control. There is no way on those previous image generating systems to steer the output, except for revising the text prompt.

With ControlNet, the creator now has much more power. One way of using ControlNet is to provide both a prompt and a source image to determine the general shape to follow. In this case, the resulting images would still offer variety but, crucially, retains the specified shape:

The creator could also have specified a set of edges, an image with no prompt at all, or many other ways of providing expressive input to the system.

To create a ControlNet, we clone the weights within a large diffusion model’s network into two versions. One is the trainable network (this provides the control; it is “the ControlNet”) and the other is the locked network. The locked network preserves the capability learned from billions of images and could be any previous image generator. We then train the trainable network on task-specific data sets to learn the conditional control from the additional image. The trainable and locked copies are connected with a unique type of convolution layer we call zero convolution, where the convolution weights progressively grow from zeros to optimized parameters in a learned manner, meaning that they initially have no influence and the system derives the optimal level of control to exert on the locked network.

Since the original weights are preserved via the locked network, the model works well with training data sets of various sizes. And the zero convolution layer makes the process much faster — closer to fine-tuning a diffusion model than training new layers from scratch.

We’ve performed extensive validation of this technique for image generation. ControlNet doesn’t just improve the quality of the output image. It also makes training a network for a specific task more efficient and thus practical to deploy at scale for our millions of creators. In experiments, ControlNet provides up to a 10x efficiency gain compared to alternative scenarios that require a model to be fully re-trained. This efficiency is critical, as the process of creating new models is time consuming and resource-intensive relative to traditional software development. Making training more efficient conserves electricity, reduces costs, and increases the rate at which new functionality can be added.

ControlNet’s unique structure means it works well with training data sets of various sizes and on many different types of media. ControlNet has been shown to work with many different types of control modalities including photos, hand-drawn scribbles, and openpose pose detection. We believe that ControlNet can be applied to many different types of media for generative AI content. This research is open and publicly available for the community to experiment with and build upon, and we’ll continue presenting more information as we make more discoveries with it.

StarCoder

Generative AI can be applied to produce images, audio, text, program source code, or any other form of rich media. Across different media, however, the applications with the greatest successes tend to be those for which the output is judged subjectively. For example, an image succeeds when it appeals to a human viewer. Certain errors in the image, such as strange features on the edges or even an extra finger on a hand, may not be noticed if the overall image is compelling. Likewise, a poem or short story may have grammatical errors or some logical leaps, but if the gist is compelling, we tend to forgive these.

Another way of considering subjective criteria is that the result space is continuous. One result may be better than another, but there’s no specific threshold at which the result is completely acceptable or unacceptable. For other domains and forms of media the output is judged objectively. For example, the source code produced by a generative AI programming assistant is either correct or not. If the code cannot pass a test, it fails, even if it is similar to the code for a valid solution. This is a discrete result space. It is harder to succeed in a discrete space both because the criteria are more strict and because one cannot progressively approach a good solution—the code is broken right up until it suddenly works.

LLMs used for text output work well for subjective, continuous applications such as chatbots. They also seem to work well for prose generation in many human languages, such as English and French. However, existing LLMs don’t seem to work as well for programming languages as they do for those human languages. Code is a form of mathematics that is a very different, objective way of expressing meaning than natural language. It is a discrete result space instead of a continuous result space. To achieve the highest quality of programming language code generation for Roblox creators, we need methods of applying LLMs that can work well in this discrete, objective space. We also need robust methods for expressing code functionality independent of a particular language syntax, such as Lua, JavaScript, or Python.

StarCoder, a new state-of-the-art open-source LLM for code generation, is a major advance to this technical challenge and a truly open LLM for everyone. StarCoder is one result of the BigCode research consortium, which involves more than 600 members across academic and industry research labs. Roblox researcher and Northeastern University professor Arjun Guha helped lead this team to develop StarCoder. These first published results focus exclusively on the code aspect, which is the area in which the field most needs new growth given the relative success of subjective methods.

To deliver generative AI through LLMs that support the larger AI ecosystem and the Roblox community, we need models that have been trained exclusively on appropriately licensed and responsibly gathered data sets. These should also bear unrestrictive licenses so that anyone can use them, build on them, and contribute back to the ecosystem. Today, the most powerful LLMs are proprietary, or licensed for limited forms of commercial use, which prohibits or limits researchers’ ability to experiment with the model itself. In contrast, StarCoder is a truly open model, created through a coalition of industry and academic researchers and licensed without restriction for commercial application at any scale. StarCoder is trained exclusively on responsibly gathered, appropriately licensed content. The model was initially trained on public code and an opt-out process is available for those who prefer not to have their code used for training.

Today, StarCoder works on 86 different programming languages, including Python, C++, and Java. As of the paper’s publication, it was outperforming every open code LLM that supports multiple languages and was even competitive with many of the closed, proprietary models.

The StarCoder LLM is a contribution to the ecosystem, but our research goal goes much deeper. The greatest impact of this research is advancing semantic modeling of both objective and subjective multimodal models, including code, text, images, speech, video, and to increase training efficiency through domain-transfer techniques. We also expect to gain deep insights into the maintainability and controllability of generative AI for objective tasks such as source code generation. There is a big difference between an intriguing demonstration of emerging technology and a secure, reliable, and efficient product that brings value to its user community. For our ML models, we optimize performance for memory footprint, power conservation, and execution time. We’ve also developed a robust infrastructure, surrounded the AI core with software to connect it to the rest of the system, and developed a seamless system for frequent updates as new features are added.

Bringing Roblox’s scientists and engineers together with some of the sharpest minds in the scientific community is a key component in our pursuit of breakthrough technology. We are proud to share these early results and invite the research community to engage with us and build on these advances.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Automotive / EVs, Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- PlatoHealth. Biotech and Clinical Trials Intelligence. Access Here.

- ChartPrime. Elevate your Trading Game with ChartPrime. Access Here.

- BlockOffsets. Modernizing Environmental Offset Ownership. Access Here.

- Source: https://blog.roblox.com/2023/09/controlnet-starcoder-roblox-research-advancements-generative-ai/

- :has

- :is

- :not

- :where

- $UP

- 1

- 3d

- 3D Rendering

- a

- ability

- academic

- accelerate

- acceptable

- Achieve

- achieving

- across

- active

- added

- Additional

- Adds

- advance

- advancements

- advances

- advancing

- AI

- AI models

- ai research

- AI systems

- AI training

- aligned

- All

- Allowing

- allows

- also

- alternative

- an

- and

- Another

- any

- anyone

- appeals

- Application

- applications

- applied

- Applying

- approach

- appropriately

- ARE

- AREA

- areas

- around

- artificial

- artificial intelligence

- Artificial intelligence (AI)

- artist

- Artists

- AS

- aspect

- Assistant

- At

- attractive

- audio

- available

- back

- BE

- Bear

- because

- been

- believe

- below

- Better

- between

- Beyond

- Big

- billions

- Block

- Blog

- both

- breakthrough

- breakthroughs

- Brings

- Broken

- build

- Building

- but

- by

- C++

- call

- called

- CAN

- cannot

- capability

- case

- Center

- certain

- challenge

- challenges

- changing

- chatbot

- chatbots

- closed

- closer

- coalition

- code

- collaboration

- collaborations

- color

- commercial

- committed

- Common

- communicating

- community

- compared

- compelling

- competitive

- completely

- component

- concept

- conditions

- Connect

- connected

- CONSERVATION

- considering

- consortium

- contain

- content

- continue

- continuous

- contrast

- contribute

- contribution

- control

- copies

- Core

- correct

- Costs

- could

- create

- created

- Creating

- creation

- Creative

- creator

- creators

- criteria

- critical

- crucially

- data

- Data Center

- data set

- data sets

- data-driven

- deep

- deep neural networks

- deeper

- Deer

- deliver

- deploy

- Detection

- Determine

- develop

- developed

- Development

- difference

- different

- Diffusion

- do

- Doesn’t

- doing

- domains

- Dont

- driven

- Early

- ecosystem

- Effective

- effectively

- efficiency

- efficient

- either

- electricity

- elements

- emerging

- Emerging Technology

- enable

- energy

- engage

- engaged

- engaging

- Engine

- Engineering

- Engineers

- English

- Errors

- essentially

- Even

- Every

- everyone

- exactly

- example

- Except

- exclusively

- Executes

- execution

- exhibit

- existing

- expect

- expected

- experiment

- experiments

- expressive

- extensive

- external

- extra

- fails

- faster

- Features

- field

- final

- Find

- finger

- First

- Focus

- focused

- follow

- Footprint

- For

- form

- forms

- FRAME

- Free

- French

- frequent

- from

- fully

- functionality

- fundamental

- future

- Gain

- gathered

- General

- generated

- generating

- generation

- generative

- Generative AI

- generator

- given

- Go

- goal

- Goals

- Goes

- good

- grants

- greater

- greatest

- Grow

- Growth

- hand

- harder

- harness

- Have

- helped

- highest

- However

- HTTPS

- human

- idea

- if

- image

- image generation

- images

- Impact

- improve

- in

- include

- Including

- Increase

- Increases

- independent

- indications

- industry

- influence

- information

- Infrastructure

- initially

- input

- insights

- instead

- institutions

- integrated

- Intelligence

- Interface

- into

- intriguing

- investing

- invite

- involves

- IT

- ITS

- itself

- Java

- JavaScript

- joint

- jpg

- judged

- just

- Key

- Labs

- language

- Languages

- large

- largely

- larger

- layer

- layers

- lead

- leaps

- LEARN

- learned

- learning

- less

- Level

- Leverage

- Licensed

- licenses

- Limited

- limits

- locked

- logical

- Long

- machine

- machine learning

- major

- make

- MAKES

- Making

- Manipulation

- manner

- many

- mathematics

- max-width

- May..

- meaning

- meanings

- means

- Media

- Members

- Memory

- methods

- millions

- mind

- minds

- Mission

- mixture

- ML

- modalities

- model

- modeling

- models

- Modern

- more

- more efficient

- most

- move

- much

- multiple

- Natural

- Natural Language

- Need

- needs

- network

- networks

- Neural

- neural network

- neural networks

- New

- New Features

- no

- Northeastern University

- now

- objective

- objectively

- of

- offer

- Offers

- on

- ONE

- online

- open

- open source

- openly

- Operations

- optimal

- Optimize

- optimized

- or

- original

- Other

- our

- outperforming

- output

- over

- overall

- parameters

- particular

- pass

- performance

- performed

- Photos

- plato

- Plato Data Intelligence

- PlatoData

- Play

- power

- powerful

- Practical

- precise

- prefer

- previous

- process

- processing

- produce

- Produced

- Product

- Professor

- Program

- Programmers

- Programming

- programming languages

- progressively

- project

- projects

- proprietary

- proud

- provide

- provides

- providing

- public

- Publication

- publicly

- published

- pursuit

- Python

- quality

- quickly

- radically

- Rate

- rather

- realizing

- reduces

- refined

- refining

- relative

- reliable

- relying

- rendering

- require

- requires

- research

- Research Community

- researcher

- researchers

- resource-intensive

- responsible

- REST

- restriction

- result

- resulting

- Results

- retains

- return

- reusable

- Rich

- right

- Roblox

- robust

- Safety

- Scale

- scaling

- scenarios

- scientific

- scientists

- scratch

- seamless

- Search

- search engine

- secure

- seem

- set

- Sets

- Shape

- Share

- sharing

- Sharpest

- Short

- should

- shown

- significant

- similar

- simply

- sizes

- So

- Software

- software development

- solution

- Solutions

- Solving

- some

- Source

- source code

- Space

- Spatial

- specific

- specifically

- specified

- speech

- spelling

- sponsorship

- stanford

- Stanford university

- state-of-the-art

- Step

- Still

- Story

- strict

- structure

- structures

- studio

- style

- succeed

- success

- such

- support

- Supporting

- Supports

- surrounded

- syntax

- system

- Systems

- Task

- tasks

- team

- Technical

- techniques

- Technology

- test

- text

- than

- that

- The

- The Area

- The Future

- The Source

- their

- Them

- then

- There.

- These

- they

- this

- those

- three

- threshold

- Through

- Thus

- time

- to

- today

- together

- tool

- tools

- toward

- traditional

- Train

- trained

- Training

- transformative

- Transparency

- truly

- two

- type

- types

- underlying

- understanding

- unfortunately

- unique

- university

- until

- Updates

- upon

- us

- use

- used

- User

- User Interface

- using

- validation

- value

- variety

- various

- very

- via

- Video

- View

- was

- Way..

- ways

- we

- WELL

- What

- when

- which

- while

- WHO

- whole

- wide

- with

- within

- without

- Work

- works

- would

- you

- zephyrnet

- zero