Researchers at the Universities of London, Durham and Surrey have developed a novel AI system that can eavesdrop on your keyboard to collect potentially sensitive data. The algorithm, presented in a new paper, was tested on a MacBook Pro keyboard and achieved 93-95% accuracy in detecting which keys were pressed based solely on audio recordings.

The research also illustrates how ubiquitous microphones are in phones, laptops and other devices, which could thus be utilized to compromise data security through acoustic side-channel attacks. While previous papers have explored laptop keystroke detection via audio, this AI-based approach achieves unprecedented levels of precision.

According to the researchers, their AI model also surpasses other hardware-based methods, which face distance and bandwidth constraints. With microphones embedded in common consumer devices, typing acoustics are more exposed and accessible than ever before.

So how does this new audio algorithm work? The researchers first recorded audio samples of typing on a MacBook Pro, pressing each key 25 times. This allowed the AI system to analyze the minute variations between the sound emanating from each key.

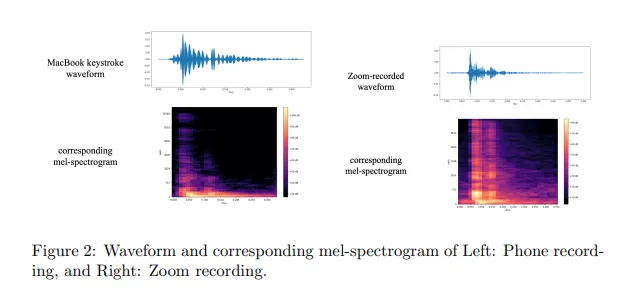

The audio recordings were then transformed into spectrograms, which are visual representations of sound frequencies over time. The AI model was trained on these spectrograms, learning to associate different patterns with different keystrokes.

Through applying this training process across thousands of audio segments, the algorithm learns the subtle distinctions between the acoustic fingerprints of each key being struck. Once trained on a specific keyboard, the AI can then analyze new audio recordings and predict keystrokes with high accuracy.

The researchers found that when trained on a MacBook Pro keyboard, the algorithm achieved between 93-95% precision. Performance only dropped slightly when tested on keyboard sounds in Zoom call recordings.

The AI system needs to be calibrated to specific keyboard models and audio environments. However, the approach could be widely applicable if attackers can obtain the proper training data. With a customized model, bad actors could potentially intercept passwords, messages, emails, and more.

Protect Yourself

While the privacy threat is concerning, the study also demonstrates the growing capabilities of AI algorithms to find insights in new forms of data. Acoustic emanations have long been explored in side-channel attacks—perhaps most commonly via laser microphones— but sophisticated machine learning now allows for unprecedented analysis of these leaked signals.

There are some ways to protect your data against this type of attack—and they don’t involve typing quietly.

Touch typists seem to confuse the model, making its accuracy drop to 40% (probably because typists press keys in different parts, changing the acoustics). A change in the typing style, playing sounds on a speaker, and using touchscreen keyboards are also mentioned as countermeasures. You may enjoy immersing yourself in the rabbithole of keyboard modding, as changing the acoustics of your keyboard renders the AI unusable as it would require another training round.

Going forward, the researchers suggest further inquiry into detecting and guarding against these emerging threat vectors. As AI continues to unlock new potentials for harnessing ubiquitous data sources, maintaining data security and privacy will require equal ingenuity to identify and mitigate unintended vulnerabilities.

Stay on top of crypto news, get daily updates in your inbox.

- SEO Powered Content & PR Distribution. Get Amplified Today.

- PlatoData.Network Vertical Generative Ai. Empower Yourself. Access Here.

- PlatoAiStream. Web3 Intelligence. Knowledge Amplified. Access Here.

- PlatoESG. Automotive / EVs, Carbon, CleanTech, Energy, Environment, Solar, Waste Management. Access Here.

- BlockOffsets. Modernizing Environmental Offset Ownership. Access Here.

- Source: https://decrypt.co/151623/ai-keyboard-eavesdropping-listen-hear-typing